Académique Documents

Professionnel Documents

Culture Documents

1323

Transféré par

Ashraf MorsyDescription originale:

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

1323

Transféré par

Ashraf MorsyDroits d'auteur :

Formats disponibles

Educational Methodologies

Using OSCE-Based Evaluation: Curricular Impact over Time

Rosemarie R. Zartman, D.D.S., M.S.; Alton G. McWhorter, D.D.S., M.S.; N. Sue Seale, D.D.S., M.S.D.; William John Boone, Ph.D.

Abstract: The Objective Structured Clinical Examination (OSCE) is becoming more widely used for performance assessment in dentistry. The department of pediatric dentistry at Baylor College of Dentistry (BCD) began incorporating the OSCE into its curriculum in 1995. This article describes the evolution of the departments use of the OSCE and its impact on teaching and the curriculum. The discussion focuses on logistics and station design, curricular content and order, student anxiety, writing and scoring exams, and curriculum assessment. BCD has found that using an OSCE-based testing format is time-consuming and labor-intensive, but provides unprecedented feedback about students understanding and pinpoints areas of confusion. The demands of an OSCE-based testing format reveal that students can master, to the level of competency, only a finite amount of information in a given time period. The timed, interactive aspects of the OSCE create high levels of student anxiety that must be addressed. Writing and scoring OSCE items are different from traditional test items. The OSCE is a valuable mechanism to assess the students progress toward competency. This review of the process of incorporating OSCEs into a curriculum is the foundation for future assessment of the OSCE and its use for curricular improvement. Dr. Zartman is Assistant Professor, Dr. McWhorter is Associate Professor, and Dr. Seale is Regents Professor and Chairman, Department of Pediatric Dentistry, Baylor College of Dentistry, Texas A&M University System Health Science Center; Dr. Boone is Associate Professor of Science and Environmental Education and Coordinator of the Program in Science and Environmental Education, Indiana University. Direct correspondence to Dr. Rosemarie Zartman, P.O. Box 660677, Department of Pediatric Dentistry, Baylor College of Dentistry, Texas A&M University System Health Science Center, Dallas, TX 75266-0677; 214-8288456 phone; 214-828-8132 fax; rzartman@tambcd.edu. Key words: OSCE, assessment of competency, Rasch analysis, curriculum Submitted for publication 8/22/02; accepted 10/14/02

he Objective Structured Clinical Examination (OSCE) requires that examinees rotate through a series of stations in which they are required to perform a variety of clinical tasks.1 It has been used successfully in medicine since Harden and Gleeson introduced it in 1975 as a procedure to assess clinical competence at the bedside using timed stations.2 Harden and Gleeson described two types of stations: procedure stations and question stations. Procedure stations involving history-taking often used simulated patients as an alternative to actual patients. Review of the medical literature reveals that a variety of different formats have been used in administering the OSCE, and much of the literature focuses on its use in specialty programs. Performance assessments such as the OSCE are only now becoming more widely used in dentistry. In 1994, the National Dental Examining Board of Canada (NDEB) began to include an OSCE as part of the certification process.3 OSCEs have also been used for summative in-course assessments at the

Royal London School of Medicine and Dentistry and to provide feedback in restorative dentistry midway through the fourth year at Leeds Dental Institute in the United Kingdom.4,5 Several publications have focused on the use of standardized patients (SPs) in dental education. Johnson and Kopp described the use of an SP-based format for comprehensive treatment planning and emergency cases.1 Stilwell and Reisine used a patient-instructor (PI) program to teach and give individual feedback to a class of thirdyear dental students.6 Using carefully trained lay people to consistently portray a particular patient (standardized patient) and to assess students performance is a viable method for teaching and evaluating clinical interviewing skills.7 It can also be used to provide formative feedback throughout a predoctoral curriculum; however, there is nothing in the dental literature supporting this use. The Department of Pediatric Dentistry at Baylor College of Dentistry (BCD) incorporated OSCEs into the curriculum in 1995. Results of what

December 2002

Journal of Dental Education

1323

we have learned by using the OSCE have been the subject of four Faculty Development Workshops presented at Annual Sessions of the American Dental Education Association since 1997. The purpose of this paper is to describe the evolution of the use of an OSCE-based testing format and the impact it has had on our program.

Logistics and Station Design

When we initially began to use the OSCE format, the logistics of developing and administering the examination were somewhat daunting and consumed all our energy and efforts. We soon discovered that three crucial factors must be considered at the beginning of the process: 1) the size of the class being examined, 2) the amount of space available, and 3) the time allowed for administering the exam. All other logistical considerations can be manipulated to accommodate these fixed variables. Timed interactive stations are the hallmark of the OSCE format. Station types reported in the literature vary from short, simple, static exhibits5 to lengthy sophisticated stations with a standardized patient.8 At BCD, we have identified three categories of stations: 1) interactive, nonproctored stations where the student examines materials and provides a written answer to the question; 2) observed stations where the student performs a task in front of a passive observer who judges his or her performance; and 3) proctored, interactive stations where the student interacts with a proctor trained to act as a standardized patient or parent and also serve as a judge of the students performance. The two station types using proctors require trained personnel who must be present for the entire exam. Some proctors must be dentists, while others can be nondentists. We found that recruitment from outside our discipline is possible because the proctors are trained and standardized prior to the exam. Ultimately, the size and makeup of the workforce available to participate in the examination determines numbers and types of proctored stations that can be used. Once the space available, time allotted to the exam, class size, and available workforce have been determined, the numbers, lengths, and types of stations can be finalized. For example, at BCD, for a midterm examination of third-year students, we have a space allotment of two 100-station laboratories and one lecture room, an exam time of two hours, a class

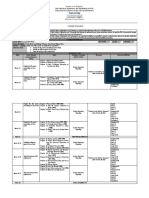

size of eighty-seven, and an available workforce of twenty composed of full- and part-time faculty, graduate students, department staff, and individuals recruited from outside the department. For exam security, we examine all students at one time. The class is randomly divided in half, and during the first hour, one-half (forty-three) of the students take a written exam (not described further in this paper) while the other half (forty-four) take the OSCE. The groups are reversed during the second hour. Therefore, the total time allocated for the administration of the OSCE is sixty minutes. This constricted time frame is dictated by curricular scheduling outside of our control; though challenging, it is adequate for a meaningful examination experience, as this is one of three OSCEs administered during the term. To rotate forty-four students through the OSCE in sixty minutes, the total must be divided into smaller, more manageable groups. Allowing five minutes for students to find their places and receive instructions and ten minutes to transfer them at the end of the exam, we have approximately forty-five minutes to actually administer the examination. Since we use four-minute stations, there can be eleven stations in forty-five minutes. The result is four groups of eleven students each. All four groups take the OSCE simultaneously; therefore, all stations must be duplicated four times, and four different proctors must be standardized for each proctored station. In this scenario, given a workforce of twenty, there could be as many as five proctored stations. All proctored stations have a performance checklist of designated tasks that assist the proctor in judging the students performance. Nonproctored stations require written answers that must be graded after the exam is completed. Keys for these stations are prepared in advance to facilitate standardization in grading. The space allocated for the four OSCEs is in the two laboratories, and two groups of eleven students each are assigned to each laboratory. Due to the close proximity of OSCE stations, each has a three-sided, foam-board barricade for privacy. Figure 1 depicts a rotation chart for stations for two identical OSCEs being administered simultaneously in one laboratory. With time and experience, the logistics of developing and administering the OSCEs consumed less energy, and we began to focus on the valuable feedback provided through observing the students and grading their responses.

1324

Journal of Dental Education Volume 66, No. 12

Figure 1. Rotation chart for stations for two identical OSCEs given simultaneously in one laboratory

Curriculum Content and Order

The need for curricular modification in pediatric dentistry at BCD became evident within our first year of experience with the OSCE-based testing format. The interactive nature of the OSCE, which requires students to use written and verbal communication as well as problem-solving skills, provided us with unprecedented feedback about their understanding and mastery of concepts. Published reports on the use of standardized patients in dental OSCE stations have also identified this improved level of feedback concerning areas of student misunderstanding as a finding associated with the format.1,8 The fact that OSCE questions are open-ended eliminates the advantage of recognition or guessing possible when taking multiple choice tests; thus, the students answers reflect their core comprehension. Our most overwhelming and alarming discovery was how confused our students were over what we believed to be the most basic concepts. As we searched

for the source of their confusion, we quickly realized our curriculum was overloaded with nonessential information. Concomitant with our initial OSCE discoveries was the movement in dental education to competency-based teaching. Our experiences with the OSCE forced us to evaluate and streamline our curriculum using the competency-based concept of necessary to know versus nice to know. Achievement of competency requires mastery of basic information necessary for a beginning practitioner. Testing for mastery of information involves assessment of higher-order critical thinking and problem-solving skills that require in-depth command of concepts. Our experiences with OSCEs revealed multiple areas of confusion that required reteaching and retesting. In order to produce a student who gave evidence of mastery, concepts from the elaboration theory were employed.9 All important fundamental concepts were introduced early in the second-year course, so they could be built upon as the student progressed. A traditional preclinical technique course consisting of twelve hours of lecture and thirty-six hours of laboratory was converted to forty-eight one-

December 2002

Journal of Dental Education

1325

hour modules taught to small groups of five to six students. Each module was hands-on and interactive in format, and all of the basic pediatric dentistry concepts were introduced during this course. The goal was to produce a safe beginner who could enter the clinic in the third year. Traditional lectures were replaced in the third and fourth years with smallgroup, interactive seminars that used multiple approaches to reinforce and build on basic concepts. The OSCE format is now used throughout the pediatric dentistry curriculum to identify areas of misunderstanding and confusion so that reteaching and retesting can take place. A total of seven OSCEs are given throughout the pediatric dentistry didactic curriculum, and focus groups are routinely held to assess students attitudes and concerns about the exam. Time is made available to review exams and provide feedback to students about their performance.

Student Anxiety Concerning the OSCE

Changes in curriculum format can result in anxiety for students as well as for those involved in implementing the changes. When the faculty does not pay attention to feedback and subtle cues from students, it can sometimes be overlooked that students are experiencing their own stressors. During the first administrations of the OSCE, there were multiple anecdotal reports from station proctors that some students had shaking hands and quivering voices at the proctored, interactive stations. There was even one incident where a student experienced such a heightened level of anxiety that she could not complete the exam. These observed behaviors led the faculty to include focus group sessions concerning the OSCE with each group of students in the second-year pediatric dentistry course. Each group of six students met for fifty minutes with a facilitator who was an educational specialist employed by the college and who did not participate in the course or exam. The facilitator followed a list of open-ended questions about the course and the OSCE to elicit student comments. By using small groups of students with a facilitator not involved in the course or exam, our intent was to encourage frank, uninhibited feedback. The facilitator asked the students: Was the OSCE straightforward? Did you have enough time at each station? How can the OSCE be better described so you will

know how to study? A majority of the student groups (nine out of fourteen) said that they needed more time at some of the stations. Students expressed concern that the format was still unfamiliar. Some reported feeling panic when the classmate in front of them accidentally walked away with materials from that station or if a classmate did not leave the station quickly enough. A moderate number of student groups (six out of fourteen) felt that some of the questions were vague. Other comments described frustration with the quality of some of the materials used at stations; this frustration caused them to lose focus at subsequent stations, thereby increasing their anxiety. It was apparent that what we might interpret to be small details could be sources of stress that could have a snowball effect on students. In addition to focus group feedback, the most common anecdotal feedback to faculty was that students reported feeling very threatened by the observed nature of the proctored OSCE stations and the required roleplay at interactive stations. Using the focus group feedback, the faculty created practice OSCE modules to be included within the course for the purpose of lowering stress among students. Practice OSCEs were used within a lesson module during the course and in a stand-alone module that closely simulated the exams given during the course. For the practice OSCE module, a classroom was set up to simulate (on a smaller scale) the administration of an actual test. Five stations were set up with the same types of materials used in a real OSCE, including props such as dental models and radiographs, answer sheets for students to record their answers, foam board barricades, and arrows attached to the tables to help direct students from station to station. The faculty coordinating the practice OSCE gave directions to the students and operated a timer to cue them to move through all five stations. This practice OSCE did not count for a grade, but after the exercise, students reviewed correct answers with the faculty leader. The faculty member also used this time to answer students questions and give advice on how to study for these exams. The focus was on the OSCE format and logistics rather than on reviewing course information. The outcome was a student who had more practice with this new format and was more familiar with the types of questions that would appear. With better-prepared and more relaxed students, when the actual exams were graded, we obtained a better sense of students understanding because reduction of anxiety levels had improved their performance.

1326

Journal of Dental Education Volume 66, No. 12

The grading of the OSCE was also a source of anxiety for students. Students expressed concerns that some proctors graded harder than others. Students indicated that some of the questions were too difficult to interpret and/or answer within the four-minute time limit of the stations. These issues required the faculty to look at ways to improve the standardization of the proctors and the grading process. Our standardization of proctors involves writing and using scripts for proctors who talk to students so that each student technically is being asked the same question. When there were multiple proctors for the same station, these individuals met to practice scenarios of students and proctors interacting at that station. This helped refine the proctors response during the interactions. We realize that we have more work to do, but what we have learned has affected our test construction as well as what we do with the test answers we collect from students. The test answer data reveal valuable information when analyzed.

Scoring Exams/Writing Exams

The performance data obtained from students at OSCE stations include written answers, proctor or observer evaluations of performance of a task, and student materials left at stations for grading after the exam is over. The resulting data are very different from traditional test data that can often be immediately scored electronically. The OSCEs are scored by either the proctor or by a faculty member who scores all of the students answers for a given nonproctored station. Having different scorers for an examination can create the need for employing checks on standardization of the scoring process. For proctored and observed stations scored during the exam, it is essential to create forms called proctor sheets (checklists) that include all the tasks the student should demonstrate to receive credit for performing the prescribed procedure. This causes the faculty to break down a given clinical skill or treatment into all the pieces or subskills that make up the total action. For a dental student treating a pediatric patient, for example, giving local anesthesia includes the subskills of knowing the correct tooth to anesthetize, using behavioral management techniques, knowing where to place the needle, etc. (Figure 2). A variety of skillsincluding communication, motor, and cog-

nitiveare all involved in the same station. For all three types of stations, faculty must determine what the expected answer will be and then break the answer down into its smallest subskills or items before the exam is given. This process allows the creation of reliable proctor sheets, and for the answers scored after the exam, it facilitates creation of a grading key that helps maintain consistency among faculty when grading the entire group. When the exam is scored as a distribution of items, then a judge (proctor or faculty) only needs to decide if the student performed the item correctly, partially correctly, or incorrectly. Sometimes students provide answers that are partially correct, but are not the expected responses on our exam key. These instances have created a need to scrutinize each question before it becomes part of the exam. Several faculty members thus read a proposed question and make revisions to the question. This process of review and revision of the question continues until all are satisfied that it should not be misinterpreted. The creation of exam questions, key, and proctor sheets takes place over several weeks. Since our items are scored as correct, partially correct, or incorrect, we assign each a specific point value. For example, an item may be worth a total of four points. If a student scores the item totally correctly, he or she receives four points toward the total score. If the faculty judge determines the answer is partially correct, the student earns two points. If the student answered the item incorrectly, he or she receives no points for that item. Students total raw scores are tabulated and then converted to a traditional grade on a letter scale. One advantage of this method is that difficult items that test higher order skills or items inherent to the skill can be assigned higher point values than the easier items. This is known as weighting of the exam questions. The student receives feedback in the form of a grade. Faculty receive feedback in the form of student responses to items on a clinically based, performance-based examination. Student responses are converted into data spreadsheets relatively easily because they are in the form of items that fall into one of three categories: correct, partially correct, or incorrect. Each category is designated by a number 2, 1, or 0 in the spreadsheet for each item. With the help of a measurement expert, the data can be analyzed. Rasch analysis10 allows us to produce maps that demonstrate how the tested group of students has performed. We have coded items that appear on this type of map to better describe them. For instance,

December 2002

Journal of Dental Education

1327

Figure 2. Proctor sheet for OSCE station at which the student demonstrates local anesthesia administration

1328

Journal of Dental Education Volume 66, No. 12

an item can be described by the content area (domain) that we are testing, such as the restorative domain. The item can be described by the skill it tests, such as a communication skill. The item can also be described by the facultys assessment of the difficulty of the item. The maps more clearly show item difficulty that is not readily apparent through the simple calculation of percentages. This information paired with the item descriptors (domain, skill, faculty assessment of difficulty) has become the backbone of our process toward exam and course improvement.

Summary

Published reports concerning use of OSCEbased testing formats, both in the medical and dental literature, indicate that OSCEs have many formats and uses. This paper described the effects an OSCE-based testing format has had on our predoctoral curriculum in pediatric dentistry over a seven-year period. The following statements summarize the lessons learned: 1. The use of an OSCE-based testing format is time-consuming and labor-intensive, requires extensive resources, and provides unprecedented feedback about students understanding and areas of confusion concerning basic concepts. 2. The demands of an OSCE-based testing format reveal that students can master, to the level of competency, only a finite amount of information in a given time period. Therefore, competency-based education requires a streamlined curriculum. 3. The timed, interactive aspects of the OSCE create high levels of student anxiety that can impact performance among students unfamiliar with the format. For students performance to be reflective of their actual knowledge, strategies must be implemented to desensitize students to the OSCE testing format. 4. Writing and scoring OSCE items are different from traditional test items and require standardization of judges and graders, weighting of items, and mixing items by skill set, difficulty, and domain being tested. 5. The OSCE is a valuable mechanism to assess curriculum, not only for the content but also its effectiveness. A Rasch analysis of OSCE-based testing data provides a means to develop and use tools for this purpose.

Curriculum Assessment

Earlier we discussed how we used OSCE data concerning student performance to manage our curriculum by reducing its scope and revising its sequencing. The most recent development from our use of OSCE-based testing has been the realization that data obtained have broader application than merely grading student performance. As we have become more skilled at designing examinations to facilitate data analysis, we have come to the realization that these analyses can be used to assess the overall effectiveness of the curriculum. By definition, curricular assessment should be used to determine whether the curriculum is doing what it is supposed to do: produce a competent beginning practitioner. Most information in the dental literature concerning curricular assessment is directed either at whole school curriculum in preparation for accreditation site visits or at individual courses based on student evaluations.11,12 There is a dearth of information about how to assess, over time, the effectiveness of a disciplinebased curriculum to produce the desired result. At BCD, a number of approaches to curriculum assessment have been developed using a Rasch analysis of OSCE data from our students performance throughout their three years in the pediatric dentistry curriculum. These have led to the development of purposeful assessment techniques (PAT), which were discussed in detail in an earlier paper.13 Briefly, PAT are those techniques that help provide valid and reliable measures that are informative to students, faculty, and administrators and allow one to work toward development of linear measurement scales that are person-free and item-free. These are being used to assess the effectiveness of our pediatric dentistry curriculum.

REFERENCES

1. Johnson JA, Kopp KC, Williams RG. Standardized patients for the assessment of dental students clinical skills. J Dent Educ 1990;54:331-3. 2. Harden RM, Gleeson FA. Assessment of clinical competence using an objective structured clinical examination (OSCE). Med Educ 1979;13:41-54. 3. Gerrow JD, Boyd MA, Duquette P, Bentley KC. Results of the National Dental Examining Board of Canada written examination and implications for certification. J Dent Educ 1997;61:921-7.

December 2002

Journal of Dental Education

1329

4. Davenport ES, Davis JEC, Cushing AM, Holsgrove GJ. An innovation in the assessment of future dentists. Br Dent J 1998;184:192-5. 5. Manogue M, Brown G. Developing and implementing an OSCE in dentistry. Eur J Dent Educ 1998;2:51-7. 6. Stillwell NA, Reisine S. Using patient-instructors to teach and evaluate interviewing skills. J Dent Educ 1992;56:118-22. 7. Proceedings of the AAMCs consensus conference on the use of standardized patients in the teaching and evaluation of clinical skills. Acad Med 1993;68 [entire issue]. 8. Logan HL, Muller PJ, Edwards Y, Jakobsen JR. Using standardized patients to assess presentation of a dental treatment plan. J Dent Educ 1999;63:729-37.

9. Reigeluth CM, Darwazeh A. The elaboration theorys procedure for designing instruction. J Instr Dev 1982;5:22-32. 10. Rasch G. Probabilistic models for some intelligence and attainment tests. Copenhagen: Danmarks Paedogogiske Institut, 1960; Chicago: University of Chicago Press, 1980. 11. Collett WK, Gale MA. A method to review and revise the dental curriculum. J Dent Educ 1985;49:804-8. 12. Cohen PA. Effectiveness of student ratings feedback and consultation for improving instruction in dental school. J Dent Educ 1991;55:145-50. 13. Boone WJ, McWhorter AG, Seale NS. Purposeful assessment techniques (PAT) applied to an OSCE-based measurement of competencies in a pediatric dentistry curriculum. J Dent Educ 2001;65:1232-7.

1330

Journal of Dental Education Volume 66, No. 12

Vous aimerez peut-être aussi

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5795)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Motor Transport Operator NCODocument208 pagesMotor Transport Operator NCOJeremiah PerezPas encore d'évaluation

- NADCAP AC7114 Rev FDocument23 pagesNADCAP AC7114 Rev FAnonymous gFcnQ4go100% (3)

- Acca P7Document48 pagesAcca P7Kool SamuelPas encore d'évaluation

- Certifications - PGMP Application FormDocument15 pagesCertifications - PGMP Application FormManojJainPas encore d'évaluation

- Level 1 Unit 6 Congratulations!Document2 pagesLevel 1 Unit 6 Congratulations!Cria_SaludPas encore d'évaluation

- FOM - StaffingDocument36 pagesFOM - StaffingCrisDBPas encore d'évaluation

- Experience Verification FormDocument1 pageExperience Verification Formshakir ahmed0% (1)

- Ohta w501 Lombok 2013Document4 pagesOhta w501 Lombok 2013Arif WibisonoPas encore d'évaluation

- Dr. Homi Bhabha Balvaidnyanik Exam 2019: Structure of The CompetitionDocument2 pagesDr. Homi Bhabha Balvaidnyanik Exam 2019: Structure of The CompetitionMaths TricksPas encore d'évaluation

- Going Geek: What Every Smart Kid (And Every Smart Parent) Should Know About College AdmissionsDocument20 pagesGoing Geek: What Every Smart Kid (And Every Smart Parent) Should Know About College Admissionsaskjohnabout100% (1)

- LAWCET Detailed NotificationDocument6 pagesLAWCET Detailed NotificationJameelSaadPas encore d'évaluation

- Syllabus - GE 9 - Rizal 1Document4 pagesSyllabus - GE 9 - Rizal 1Roger Yatan Ibañez Jr.Pas encore d'évaluation

- A Project Report ON ": Recruitment and Selection at Hyundai Harshali HyundaiDocument75 pagesA Project Report ON ": Recruitment and Selection at Hyundai Harshali HyundaiHarsh Agrawal100% (1)

- Course Outline - Behavior in Organizations 2020-21 - SSDocument8 pagesCourse Outline - Behavior in Organizations 2020-21 - SSHarshad SavantPas encore d'évaluation

- The Effect of Blended Learning Model On High School StudentsDocument7 pagesThe Effect of Blended Learning Model On High School StudentsLorie May MarcosPas encore d'évaluation

- Emotional Intelligence For Effective Classroom Management - Handout-FinalDocument21 pagesEmotional Intelligence For Effective Classroom Management - Handout-FinalBhanu Pratap SinghPas encore d'évaluation

- Learner Errors and Error Analysis WENIDocument3 pagesLearner Errors and Error Analysis WENIWeni RatnasariPas encore d'évaluation

- CPGET 2021, OUCET - Dates, Eligibility, Application Form, SyllabusDocument4 pagesCPGET 2021, OUCET - Dates, Eligibility, Application Form, SyllabusRaju dumpatiPas encore d'évaluation

- Management 330: Principles of Management: Basic InformationDocument12 pagesManagement 330: Principles of Management: Basic InformationsamiaPas encore d'évaluation

- Updated May 2021 Exam ScheduleDocument5 pagesUpdated May 2021 Exam ScheduleAnoop SreedharPas encore d'évaluation

- Practical Research 1Document19 pagesPractical Research 1Ferlyn CastellejoPas encore d'évaluation

- LSE For You - Candidate Number and Exam TimetableDocument2 pagesLSE For You - Candidate Number and Exam TimetablediyaPas encore d'évaluation

- Socioling Exam PDFDocument3 pagesSocioling Exam PDFcielo curyPas encore d'évaluation

- Data CollectionDocument11 pagesData Collectionanjuhooda1987Pas encore d'évaluation

- Assignment (Descriptive Measures)Document2 pagesAssignment (Descriptive Measures)JwzPas encore d'évaluation

- My Iplan For Teaching DemonstrationDocument3 pagesMy Iplan For Teaching DemonstrationmarjunampoPas encore d'évaluation

- Accenture PDFDocument2 pagesAccenture PDFSahil KumarPas encore d'évaluation

- SOP's For Funded Projects (Draft) : Prepared byDocument8 pagesSOP's For Funded Projects (Draft) : Prepared bySaeed ArifPas encore d'évaluation

- A. Project Work Plan and Budget Matrix (Annex 9) 1A. AccessDocument10 pagesA. Project Work Plan and Budget Matrix (Annex 9) 1A. AccessaveheePas encore d'évaluation

- The USe of Process Approach in WritingDocument9 pagesThe USe of Process Approach in WritingHedhedia Cajepe100% (1)