Académique Documents

Professionnel Documents

Culture Documents

Online Analytical Processing

Transféré par

Faheema T MoosanDescription originale:

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Online Analytical Processing

Transféré par

Faheema T MoosanDroits d'auteur :

Formats disponibles

Online Analytical Processing

CHAPTER 1 INTRODUCTION

1.1 Online Analytical Processing

On-Line Analytical Processing (OLAP) is a category of software technology that enables analysts, managers and executives to gain insight into data through fast, consistent, interactive access to a wide variety of possible views of information that has been transformed from raw data to reflect the real dimensionality of the enterprise as understood by the user. OLAP functionality is characterized by dynamic multi-dimensional analysis of consolidated enterprise data supporting end user analytical and navigational activities including trend analysis over sequential time periods slicing subsets for on-screen viewing drill-down to deeper levels of consolidation reach-through to underlying detail data rotation to new dimensional comparisons in the viewing area.

OLAP is implemented in a multi-user client/server mode and offers consistently rapid response to queries, regardless of database size and complexity. OLAP synthesize enterprise helps the user

information through comparative, personalized viewing, as well as

through analysis of historical and projected data in various "what-if" data model scenarios. This is achieved through use of an OLAP Server. An OLAP server is a high-capacity, multi-user data manipulation engine specifically designed to support and operate on multi-dimensional data structures. A multi-dimensional structure is arranged so that every data item is located and accessed based on the intersection of the dimension members which define that item. The OLAP term dates back to 1993, but the ideas, technology and even some of the products have origins long before then. How does it work? OLAP technology consists of two major components, the server and the client. Typically the server is a multi-user, LAN-based data base that is loaded either from your legacy systems or

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

from your data warehouse. You dont need a data warehouse in order to implement OLAP but if you have historical data, OLAPs visualization capabilities will reveal patterns of your business process that are hidden in the data. The Server Think of OLAP data bases as multi-dimensional arrays or cubes of data, actually cubes of cubes, capable of holding hundreds of thousands of rows and columns of both text and numbers. The current terminology for these data base servers is Multi-Dimensional Databases (MDDs). The MDDs are loaded from your data source (legacy or warehouse) according to an aggregation model that you define. Fortunately, defining the model and loading the data base can be very easy. For some OLAP products absolutely no programming is required to build the model or to load the data. The Client The client component for several OLAP products presents a spreadsheet-type interface with very special features. Features available in some products include the ability to instantly change the data component of the x, y, or z dimension of your spreadsheet using drag-and-drop. You can change your display from tabular to any one of various charts including pie, bar, stacked bar, clustered bar, line, or multi-line - - all with one second response using drag-and-drop. Exception highlighting is another very nice feature. This allows your display to dynamically change font, point-size, and color of rows or columns based upon the value of a component of the display. You can also hide rows or columns based on dynamic values.

Instant drill-down/drill-up is a particularly valuable feature. For example, placing your mouse over a school name and double clicking can invoke a drill-down to department-level numbers. Double clicking on a department can take you down to account-level data. At any point you can then jump from one graphic or tabular display to the next, including multi-year displays, each with a one second response time.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

Performance of OLAP depends up on these things:

Aggregations

o

Materializing aggregations usually leads to a faster query response since we probably need to do less work to answer a request for cell values.

Partitions Partitions give you the ability to choose different storage strategies to optimize the tradeoff between processing and querying performance.

Data slices on partitions

o

Setting a data slice is an efficient way to avoid querying irrelevant partitions.

Among the key features of OLAP services are:

Ease of use provides by user interface wizards A flexible, robust data model for cube definition and storage Write enabled cubes for "what if " scenarios analysis Scalable architecture that provides a variety of storage scenarios and an automated solution to the "data-explosion syndrome" that plagues traditional OLAP technologies

Integration of administration tools, security, data sources and client/server caching. Widely supported APIs and open architecture to support custom applications

1.2 BENEFITS OF USING OLAP OLAP holds several benefits for businesses: 1. OLAP helps managers in decision-making through the multidimensional data views that it is capable of providing, thus increasing their productivity. 2. OLAP applications are self-sufficient owing to the inherent flexibility provided to the organized databases. 3. It enables simulation of business models and problems, through extensive usage of analysis-capabilities. 4. In conjunction with data warehousing, OLAP can be used to provide reduction in the application backlog, faster information retrieval and reduction in query drag.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

1.3 OLAP Cube

An OLAP cube as shown in fig (1.1.1) is a data structure that allows fast analysis of data. The arrangement of data into cubes overcomes a limitation of relational databases. Relational databases are not well suited for near instantaneous analysis and display of large amounts of data. Instead, they are better suited for creating records from a series of transactions known as OLTP or On-Line Transaction Processing. Although many report-writing tools exist for relational databases, these are slow when the whole database must be summarized.

The OLAP cube consists of numeric facts called measures which are categorized by dimensions. The cube metadata is typically created from a star schema or snowflake schema of tables in a relational database. Measures are derived from the records in the fact table and dimensions are derived from the dimension tables.

Fig (1.3.1) Olap Cube

Each of the elements of a dimension could be summarized using a hierarchy. The hierarchy is a series of parent-child relationships, typically where a parent member represents the consolidation

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

of the members which are its children. Parent members can be further aggregated as the children of another parent. For example May 2005 could be summarized into Second Quarter 2005 which in turn would be summarized in the Year 2005. Similarly the cities could be summarized into regions, countries and then global regions; products could be summarized into larger categories; and cost headings could be grouped into types of expenditure. Conversely the analyst could start at a highly summarized level, such as the total difference between the actual results and the budget, and drill down into the cube to discover which locations, products and periods had produced this difference.

1.4 OLAP Operations

The analyst can understand the meaning contained in the databases using multidimensional analysis. By aligning the data content with the analysts mental model, the chances of confusion and erroneous interpretations are reduced. The analyst can navigate through the database and screen for a particular subset of the data, changing the data's orientations and defining analytical calculations. The user-initiated process of navigating by calling for page displays interactively, through the specification of slices via rotations and drill down/up is sometimes called "slice and dice". Common operations include slice and dice, consolidation, drill down and pivot. Slicing and dicing: A slice is a subset of a multi-dimensional array corresponding to a single value for one or more members of the dimensions not in the subset. The dice operation is a slice on more than two dimensions of a data cube (or more than two consecutive slices).

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

Fig (1.4.1) Slicing and dicing

Consolidation: Consolidation involves the aggregation of data. These can be simple roll-ups or complex expressions involving inter-related data. For example, sales offices can be rolled-up to districts and districts rolled-up to regions. Drill Down/Up: Drilling down or up is a specific analytical technique whereby the user navigates among levels of data ranging from the most summarized (up) to the most detailed (down).

Fig (1.4.2) Consolidation and Drill Down

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

Pivot: To change the dimensional orientation of a report or page display. It allows user to view data from a new perspective and axis rotation

Fig (1.4.2) Pivot

1.5 History

Online Analytical Processing, or OLAP is an approach to quickly provide answers to analytical queries that are multi-dimensional in nature. OLAP is part of the broader category business intelligence, which also encompasses relational reporting and data mining. The typical applications of OLAP are in business reporting for sales, marketing, management reporting, business process management (BPM), budgeting and forecasting, financial reporting and similar areas. The term OLAP was created as a slight modification of the traditional database term OLTP (Online Transaction Processing). Databases configured for OLAP employ a multidimensional data model, allowing for complex analytical and ad-hoc queries with a rapid execution time. They borrow aspects of navigational databases and hierarchical databases that are speedier than their relational kin. Nigel Pendse has suggested that an alternative and perhaps more descriptive term to describe the concept of OLAP is Fast Analysis of Shared Multidimensional Information (FASMI). The output of an OLAP query is typically displayed in a matrix (or pivot) format. The

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

dimensions form the row and column of the matrix; the measures, the values. The first product that performed OLAP queries was Express, which was released in 1970 (and acquired by Oracle in 1995 from Information Resources). However, the term did not appear until 1993 when it was coined by Ted Codd, who has been described as the father of the relational database". Codds paper resulted from a short consulting assignment which Codd undertook for former Arbor Software (later Hyperion Solutions, and in 2007 acquired by Oracle), as a sort of marketing coup. The company had released its own OLAP product, Essbase, a year earlier. As a result Codd's "twelve laws of online analytical processing were explicit in their reference to Essbase. There was some ensuing controversy and when Computerworld learned that Codd was paid by Arbor, it retracted the article. OLAP market experienced strong growth in late 90s with dozens of commercial products going into market. In 1998, Microsoft released its first OLAP Server Microsoft Analysis Services, which drove wide adoption of OLAP technology and moved it into mainstream.

1.5.1 APL

Multidimensional analysis, the basis for OLAP, is not new. In fact, it goes back to 1962, with the publication of Ken Iversons book, A Programming Language. The first computer implementation of the APL language was in the late 1960s, by IBM. APL is a mathematically defined language with multidimensional variables and elegant, if rather abstract, processing operators. It was originally intended more as a way of defining multidimensional transformations than as a practical programming language, so it did not pay attention to

mundane concepts like files and printers. In the interests of a succinct notation, the operators were Greek symbols. In fact, the resulting programs were so succinct that few could predict what an APL program would do. It became known as a Write Only Language (WOL), because it was easier to rewrite a program that needed maintenance than to fix it. In spite of inauspicious beginnings, APL did not go away. It was used in many 1970s and 1980s business applications that had similar functions to todays OLAP systems. Indeed, IBM developed an entire mainframe operating system for APL, called VSPC, and some people regarded it as the personal productivity environment of choice long before the spreadsheet made an appearance.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

One of these APL-based mainframe products from the 1980s was originally called Frango, and later Fregi. It was developed by IBM in the UK, and was used for interactive, top-down planning. A PC-based descendant of Frango surfaced in the early 1990s as KPS, and the product remains on sale today as the Analyst module in Cognos Planning. This is one of several APLbased products that Cognos has built or acquired since 1999. Ironically, this APL-based module has returned home, now that IBM owns Cognos. Even today, more than 40 years later, APL continues to be enhanced and used in new applications. It is used behind the scenes in many business applications, and has even entered the worlds of Unicode, object-oriented programming and Vista. Few, if any, other 1960s computer languages have shown such longevity.

1.5.2 Express

By 1970, a more application-oriented multidimensional product, with academic origins, had made its first appearance: Express. This, in a completely rewritten form and with a modern code-base, became a widely used contemporary OLAP offering, but the original 1970s concepts still lie just below the surface. Even after 30 years, Express remains one of the major OLAP technologies, although Oracle struggled and failed to keep it up-to-date with the many newer client/server products. Oracle announced in late 2000 that it would build OLAP server capabilities into Oracle9i starting in mid 2001. The second release of the Oracle9i OLAP Option included both a version of the Express engine, called the Analytic Workspace, and a new ROLAP engine. In fact, delays with the new OLAP technology and applications means that Oracle is still selling Express and applications based on it in 2005, some 35 years after Express was first released. This means that the Express engine has lived on well into its fourth decade, and if the OLAP Option eventually becomes successful (particularly in its new guise as a super-charged materialized views engine), the renamed Express engine could be on sale into its fifth decade, making it possibly one of the longest-lived software products ever.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

10

1.5.3 EIS

By the mid 1980s, the term EIS (Executive Information System) had been born. The idea was to provide relevant and timely management information using a new, much simpler user interface than had previously been available. This used what was then a revolutionary concept, a graphical user interface running on DOS PCs, and using touch screens or mice. For executive use, the PCs were often hidden away in custom cabinets and desks, as few senior executives of the day wanted to be seen as nerdy PC users. The first explicit EIS product was Pilots Command Center though there had been EIS applications implemented by IRI and Comshare earlier in the decade. This was a cooperative processing product, an architecture that would now be called client/server. Because of the limited power of mid 1980s PCs, it was very server-centric, but that approach came back into fashion again with products like EUREKA: Strategy and Holos and the Web.

1.5.4 Spreadsheets and OLAP

By the late 1980s, the spreadsheet was already becoming dominant in end-user analysis, so the first multidimensional spreadsheet appeared in the form of Compete. This was originally

marketed as a very expensive specialist tool, but the vendor could not generate the volumes to stay in business, and Computer Associates acquired it, along with a number of other spreadsheet products including SuperCalc and 20/20. The main effect of CAs

acquisition of Compete was that the price was slashed, the copy protection removed and the product was heavily promoted. However, it was still not a success; a trend that was to be repeated with CAs other OLAP acquisitions. For a few years, the old Compete was still occasionally found, bundled into a heavily discounted bargain pack. Later, Compete formed the basis for CAs version 5 of SuperCalc, but the multidimensionality aspect of it was not promoted. By the late 1980s, Sniper had entered the multidimensional spreadsheet world, originally with a proprietary DOS spreadsheet, and then by linking to DOS 1-2-3. It entered the Windows era by turning its (then named) TM/1 product into a multidimensional back-end server for standard Excel and 1-2-3. Slightly later, Arbor did the same thing, although its new Essbase product could then only work in client/server mode,

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

11

whereas Sinpers could also work on a stand-alone PC. This approach to bringing multidimensionality to spreadsheet users has been far more popular with users.

In fact, that traditional vendors of proprietary front-ends have been forced to follow suit, and products like Express, Holos, Gentian, MineShare, Power Play, MetaCube and White Light all proudly offered highly integrated spreadsheet access to their OLAP servers. Ironically, for its first six months, Microsoft OLAP Services was one of the few OLAP servers not to have a vendor-developed spreadsheet client, as Microsofts (very basic) offering only appeared in June 1999 in Excel 2000. However, the (then) OLAP@Work. Excel add-in filled the gap, and still (under its new snappy name, Business Query MD for Excel) provided much better exploitation of the server than did Microsofts own Excel interface. Since then there have been at least ten other third party Excel add-ins developed for Microsoft Analysis Services, all offering capabilities not available even in Excel 2003. However, Business Objects acquisition of Crystal Decisions has led to the phasing out of Business Query MD for Excel, to be replaced by technology from Crystal. There was a rush of new OLAP Excel add-ins in 2004 from Business Objects, Cognos, Microsoft, Micro Strategy and Oracle. Perhaps with users disillusioned by disappointing Web capabilities, the vendors rediscovered that many numerate users would rather have their BI data displayed via a flexible Excel-based interface rather than in a dumb Web page or PDF. Microsoft is taking this further with Performance Point, whose main user interface for data entry and reporting is via Excel.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

12

CHAPTER 2 DIFFERENCES BETWEEN OLTP AND OLAP

Main Differences between OLTP and OLAP are:1. User and System Orientation OLTP: customer-oriented, used for data analysis and querying by clerks, clients and IT professionals. OLAP: market-oriented, used for data analysis by knowledge workers (managers, executives, analysis). 2. Data Contents OLTP: manages current data, very detail-oriented. OLAP: manages large amounts of historical data, provides facilities for summarization and aggregation, stores information at different levels of granularity to support decision making process. 3. Database Design OLTP: adopts an entity relationship(ER) model and an application-oriented database design. OLAP: adopts star, snowflake or fact constellation model and a subject-oriented database design. 4. View OLTP: focuses on the current data within an enterprise or department. OLAP: spans multiple versions of a database schema due to the evolutionary process of an organization; integrates information from many organizational locations and data stores.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

13

CHAPTER 3 DATAWAREHOUSE

3.1 Data Warehouse

A data warehouse is a repository of an organization's electronically stored data. Data warehouses are designed to facilitate reporting and analysis this classic definition of the data warehouse focuses on data storage. However, the means to retrieve and analyze data, to extract, transform and load data, and to manage the data dictionary are also considered essential components of a data warehousing system. Many references to data warehousing use this broader context. Thus, an expanded definition for data warehousing includes business intelligence tools, tools to extract, transform, and load data into the repository, and tools to manage and retrieve metadata. A data warehouse provides a common data model for all data of interest regardless of the data's source. This makes it easier to report and analyze information than it would be if multiple data models were used to retrieve information such as sales invoices, order receipts, general ledger charges, etc. When users are requesting access to large amounts of historical information for reporting purposes, we should strongly consider a ware house or mart. The user will be benefit when the information is organized in an efficient manner for this type of access A data warehouse is often used as the basis for a decision support system. It is designed to overcome problems encountered when an organization attempts to perform strategic analysis using the same database that is used for online transaction processing (OLTP).Data warehouses are read-only, integrated databases designed to answer comparative and what if questions.

OLAP is a key component of data warehousing, and OLAP Services provides essential functionality for a wide array of applications ranging from reporting to advanced decision support.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

14

Unlike OLTP systems that store data in a highly normalized fashion, the data in the data warehouse is stored in a very de normalized manner to improve query performance.

Data warehouses often use star and snowflake schemas to provide the fastest possible response times to complex queries, and the basis for aggregations managed by OLAP tools.

Difficulties often encountered when OLTP databases are used for online analysis include the following:

Analysts do not have the technical expertise required to create ad hoc queries against the complex data structure.

Analytical queries that summarize large volumes of data adversely affect the ability of the system to respond to online transactions.

System performance when responding to complex analysis queries can be slow or unpredictable, providing inadequate support to online analytical users.

Constantly changing data interferes with the consistency of analytical information. Security becomes more complicated when online analysis is combined with online transaction processing.

Data warehousing provides one of the keys to solving these problems, organizing data for the purpose of analysis. Data warehouses

Can combine data from heterogeneous data sources into a single homogenous structure. Organize data in simplified structures for efficiency of analytical queries rather than for transaction processing.

Contain transformed data that is valid, consistent, consolidated, and formatted for analysis. Provide stable data that represents business history. Are updated periodically with additional data rather than frequent transactions. Simplify security requirements. Provide a database organized for OLAP rather than OLTP.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

15

3.2 Data Warehousing Infrastructure

Data Warehousing is open to an almost limitless range of definitions. Simply put, Data store an aggregation of a company's data. Data Warehouses are an important asset for organizations to maintain efficiency, profitability and competitive advantages. Organizations collect data through many sources - Online, Call Center, Sales Leads, Inventory Management. The data collected have degrees of value and business relevance. As data is collected, it is passed through a 'conveyor belt', call the Management. An organization's data life cycle management's policy will dictate the data warehousing design and methodology.

Fig (3.2.1) Data warehouse infrastructure

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

16

3.2.1 Data Repositories

The Data Warehouse repository is the database that stores active data of business value for an organization. The Data Warehouse modeling design is optimized for data analysis. There are variants of Data Warehouses - Data Marts and ODS. Data Marts are not physically any different from Data Warehouses. Data Marts can be thought of as smaller Data Warehouses built on a departmental rather than on a company-wide level. Data Warehouses collects data and is the repository for historical data. Hence it is not always efficient for providing up-to-date analysis. This is where ODS, Operational Data Stores, come in. ODS are used to hold recent data before migration to the Data Warehouse. ODS are used to hold data that have a deeper history that OLTPs. Keep large amounts of data in OLTPs can tie down computer resources and slow down processing - imagine waiting at the ATM for 10 minutes between the prompts for inputs. .The goal of Data Warehousing is to generate front-end analytics that will support business executives and operational managers. Data becomes active as soon as it is of interest to an organization. Data life cycle begins with a business need for acquiring data. Active data are referenced on a regular basis during day-to-day business operations. Over time, this data loses its importance and is accessed less often, gradually losing its business value, and ending with its archival or disposal.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

17

Fig (3.2.2). Data Life Cycle in Enterprises

Active Data Active data is of business use to an organization. The ease of access for business users to active data is an absolute necessity in order to run an efficient business. The simple, but critical principle that all data moves through life-cycle stages is key to improving data management. By understanding how data is used and how long it must be retained, companies can develop a strategy to map usage patterns to the optimal storage media, thereby minimizing the total cost of storing data over its life cycle. The same principles apply when data is stored in a relational database, although the challenge of managing and storing relational data is compounded by complexities inherent in data relationships. Relational databases are a major consumer of storage and are also among the most difficult to manage because they are accessed on a regular basis. Without the ability to manage relational data effectively, relative to its use and storage requirements, runaway database growth will result in increased operational costs, poor performance, and limited availability for the applications that rely on these databases. The ideal solution is to manage data stored in relational databases as part of an overall enterprise data management solution.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

18

Inactive Data Data are put out to pasture once they are no longer active. I.e. there are no longer needed for critical business tasks or analysis. Prior to the mid-nineties, most enterprises achieved data in Microfilms and tape back-ups.

3.2.2 Pre-Data Warehouse

The pre-Data Warehouse zone provides the data for data warehousing. Data Warehouse designers determine which data contains business value for insertion. OLTP databases are where operational data are stored. OLTP databases can reside in transactional software applications such as Enterprise Resource Management (ERP), Supply Chain, Point of Sale, and Customer Serving Software. OLTPs are design for transaction speed and accuracy. Metadata ensures the sanctity and accuracy of data entering into the data lifecycle process. Metadata ensures that data has the right format and relevancy. Organizations can take preventive action in reducing cost for the ETL stage by having a sound Metadata policy. The commonly used terminology to describe Meta data is "data about data".

3.2.3 Data Cleansing

Cleansing data involves consolidating data within a database by removing inconsistent data, removing duplicates and reindexing existing data in order to achieve the most accurate and concise database. Data cleansing may also include streamlining the formatting of information that has been merged from different parent databases. Data cleansing may be performed manually or through specific software programs. Data cleansing ranges from simple cleansing techniques such as defaulting values and resetting dates to complex cleansing, such as matching customer records and parsing address fields. Most ETL projects require a level of data cleansing that guarantees complete data and referential integrity. Data cleansing is nothing but a validating the data and purify the data and send from the source to target.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

19

Before data enters the data warehouse, the extraction, transformation and cleaning (ETL) process ensures that the data passes the data quality threshold.ETLs are also responsible for running scheduled tasks that extract data from OLTPs.

3.2.4 Front-End Analysis

The last and most critical potion of the Data Warehouse overview are the front-end applications that business users will use to interact with data stored in the repositories. Data Mining is the discovery of useful patterns in data. Data mining are used for prediction analysis and classification - e.g. what is the likelihood that a customer will migrate to a competitor. OLAP, Online Analytical Processing, is used to analyze historical data and slice the business information required. OLAPs are often used by marketing managers. Slices of data that are useful to marketing managers can be - How many customers between the ages 24-45 that live in New York State buy over $2000 worth of groceries a month? Reporting tools are used to provide reports on the data. Data are displayed to show relevancy to the business and keep track of key performance indicators (KPI). Data Visualization tools is used to display data from the data repository. Often data visualization is combined with Data Mining and OLAP tools. Data visualization can allow the user to manipulate data to show relevancy and patterns. The star schema (sometimes referenced as star join schema) as shown in fig (3.1.3) is the simplest style of data warehouse schema. The star schema consists of a few "fact tables" (possibly only one, justifying the name) referencing any number of "dimension tables". The star schema is considered an important special case of the snowflake schema.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

20

Fig (3.2.3) Star Schema

A snowflake schema as shown in fig(3.1.4) is a logical arrangement of tables in a relational database such that the entity relationship diagram resembles a snowflake in shape. Closely related to the star schema, the snowflake schema is represented by centralized fact tables which are connected to multiple dimensions. In the snowflake schema, however, dimensions are normalized into multiple related tables whereas the star schema's dimensions are denormalized with each dimension being represented by a single table. When the dimensions of a snowflake schema are elaborate, having multiple levels of relationships, and where child tables have multiple parent tables ("forks in the road"), a complex snowflake shape starts to emerge. The snow flaking effect only affects the dimension tables and not the fact tables.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

21

Fig (3.2.4) Snowflake Schema

The primary difference between the application database and a data warehouse is that while the former is designed (and optimized) to record , the latter has to be designed (and optimized) to respond to analysis questions that are critical for your business. Application databases are OLTP (On-Line Transaction Processing) systems where every transaction has to be recorded, and super-fast at that. Consider the scenario where a bank ATM has disbursed cash to a customer but was unable to record this event in the bank records. If this started happening frequently, the bank wouldnt stay in business for too long. So the banking system is designed to make sure that every transaction gets recorded within the time you stand before the ATM machine. This system is write-optimized, and you shouldn't crib if your analysis query (read operation) takes a lot of time on such a system.

A Data Warehouse (DW) on the other end, is a database (yes, you are right, it's a database) that is designed for facilitating querying and analysis. Often designed as OLAP (On-

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

22

Line Analytical Processing) systems, these databases contain read-only data that can be queried and analyzed far more efficiently as compared to your regular OLTP application databases. In this sense an OLAP system is designed to be read-optimized.

A data warehouse typically stores data in different levels of granularity or summarization, depending on the data requirements of the business. If an enterprise needs data to assist strategic planning, then only highly summarized data is required. The lower the level of granularity of data required by the enterprise, the higher the number of resources (specifically data storage) required to build the data warehouse. The different levels of summarization in order of increasing granularity are:

Current operational data Historical operational data Aggregated data Metadata

The components of schema design are dimensions, keys, and fact and dimension tables. Fact tables

Contain data that describes a specific event within a business, such as a bank transaction or product sale. Alternatively, fact tables can contain data aggregations, such as sales per month per region. Except in cases such as product or territory realignments, existing data within a fact table is not updated; new data is simply added.

Because fact tables contain the vast majority of the data stored in a data warehouse, it is important that the table structure be correct before data is loaded. Expensive table restructuring can be necessary if data required by decision support queries is missing or incorrect.

The characteristics of fact tables are:

o o o o

Many rows; possibly billions Primarily numeric data; rarely character data. Multiple foreign keys (into dimension tables). Static data.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

23

Dimension tables

Contain data used to reference the data stored in the fact table, such as product descriptions, customer names and addresses, and suppliers. Separating this verbose (typically character) information from specific events, such as the value of a sale at one point in time, makes it possible to optimize queries against the database by reducing the amount of data to be scanned in the fact table.

Dimension tables do not contain as many rows as fact tables, and dimensional data is subject to change, as when a customer's address or telephone number changes. Dimension tables are structured to permit change.

o o o o o

The characteristics of dimension tables are: Fewer rows than fact tables; possibly hundreds to thousands. Primarily character data. Multiple columns that are used to manage dimension hierarchies. One primary key (dimensional key). Updatable data.

Dimensions

Are categories of information that organize the warehouse data, such as time, geography, organization, and so on? Dimensions are usually hierarchical in that one member may be a child of another member

Dimensional keys: Are unique identifiers used to query data stored in the central fact table

3.3 Data Mart

A data mart is a simple form of a data warehouse that is focused on a single subject (or functional area), such as Sales, Finance, or Marketing. Data marts are often built and controlled by a single department within an organization. Given their single-subject focus, data marts

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

24

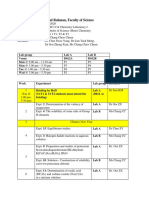

usually draw data from only a few sources. The sources could be internal operational systems, a central data warehouse, or external data. How Is It Different from a Data Warehouse? A data warehouse, unlike a data mart, deals with multiple subject areas and is typically implemented and controlled by a central organizational unit such as the corporate Information Technology (IT) group. Often, it is called a central or enterprise data warehouse. Typically, a data warehouse assembles data from multiple source systems. Nothing in these basic definitions limits the size of a data mart or the complexity of the decisionsupport data that it contains. Nevertheless, data marts are typically smaller and less complex than data warehouses; hence, they are typically easier to build and maintain. Table A-1 summarizes the basic differences between a data warehouse and a data mart. Table A-1 Differences between a Data Warehouse and a Data Mart Category Scope Subject Data Sources Size (typical) Implementation Time Data Warehouse Corporate Multiple Many 100 GB-TB+ Months to years Data Mart Line of Business (LOB) Single subject Few < 100 GB Months

Fig (3.3.1) Difference between data warehouse and data mart

3.3.1 Dependent and Independent Data Marts

There are two basic types of data marts: dependent and independent. The categorization is based primarily on the data source that feeds the data mart. Dependent data marts draw data from a central data warehouse that has already been created. Independent data marts, in contrast, are standalone systems built by drawing data directly from operational or external sources of data, or both.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

25

The main difference between independent and dependent data marts is how you populate the data mart; that is, how you get data out of the sources and into the data mart. This step, called the Extraction-Transformation-and Loading (ETL) process, involves moving data from operational systems, filtering it, and loading it into the data mart. With dependent data marts, this process is somewhat simplified because formatted and summarized (clean) data has already been loaded into the central data warehouse. The ETL process for dependent data marts is mostly a process of identifying the right subset of data relevant to the chosen data mart subject and moving a copy of it, perhaps in a summarized form. With independent data marts, however, you must deal with all aspects of the ETL process, much as you do with a central data warehouse. The number of sources is likely to be fewer and the amount of data associated with the data mart is less than the warehouse, given your focus on a single subject. The motivations behind the creation of these two types of data marts are also typically different. Dependent data marts are usually built to achieve improved performance and availability, better control, and lower telecommunication costs resulting from local access of data relevant to a specific department. The creation of independent data marts is often driven by the need to have a solution within a shorter time.

3.3.2 Steps in Implementing a Data Mart

Simply stated, the major steps in implementing a data mart are to design the schema, construct the physical storage, populate the data mart with data from source systems, access it to make informed decisions, and manage it over time.

1. Designing

The design step is first in the data mart process. This step covers all of the tasks from initiating the request for a data mart through gathering information about the requirements, and developing the logical and physical design of the data mart. The design step involves the following tasks:

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

26

Gathering the business and technical requirements Identifying data sources Selecting the appropriate subset of data Designing the logical and physical structure of the data mart

2. Constructing

This step includes creating the physical database and the logical structures associated with the data mart to provide fast and efficient access to the data. This step involves the following tasks:

Creating the physical database and storage structures, such as table spaces, associated with the data mart

Creating the schema objects, such as tables and indexes defined in the design step Determining how best to set up the tables and the access structures

3. Populating

The populating step covers all of the tasks related to getting the data from the source, cleaning it up, modifying it to the right format and level of detail, and moving it into the data mart. More formally stated, the populating step involves the following tasks:

Mapping data sources to target data structures Extracting data Cleansing and transforming the data Loading data into the data mart Creating and storing metadata

4. Accessing

The accessing step involves putting the data to use: querying the data, analyzing it, creating reports, charts, and graphs, and publishing these. Typically, the end user uses a graphical frontend tool to submit queries to the database and display the results of the queries. The accessing step requires that you perform the following tasks:

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

27

Set up an intermediate layer for the front-end tool to use. This layer, the met layer, translates database structures and object names into business terms, so that the end user can interact with the data mart using terms that relate to the business function.

Maintain and manage these business interfaces. Set up and manage database structures, like summarized tables that help queries submitted through the front-end tool execute quickly and efficiently.

5.Managing

This step involves managing the data mart over its lifetime. In this step, you perform management tasks such as the following:

Providing secure access to the data Managing the growth of the data Optimizing the system for better performance Ensuring the availability of data even with system failures

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

28

CHAPTER 4 ETL TOOLS

ETL Tools are meant to extract, transform and load the data into Data Warehouse for decision making. Before the evolution of ETL Tools, the above mentioned ETL process was done manually by using SQL code created by programmers. This task was tedious and cumbersome in many cases since it involved many resources, complex coding and more work hours. On top of it, maintaining the code placed a great challenge among the programmers. These difficulties are eliminated by ETL Tools since they are very powerful and they offer many advantages in all stages of ETL process starting from extraction, data cleansing, data profiling, transformation, debugging and loading into data warehouse when compared to the old method. There are a number of ETL tools available in the market to do ETL process the data according to business/technical requirements.

Fig (4.1) ETL

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

29

4.1 ETL

Concepts

ETL(Extraction, transformation, and loading) refers to the methods involved in accessing and manipulating source data and loading it into target database. The first step in ETL process is mapping the data between source systems and target database(data warehouse or data mart). The second step is cleansing of source data in staging area. The third step is transforming cleansed source data and then loading into the target system. Commonly used ETL tools Informatica Datastage

4.2 ETL Data Quality

Data Quality in an ETL project involves the handling of data in movement. A normal data quality effort involves identifying problems in a data store and fixing them. ETL data quality involves identifying problems in data moving through the ETL process and finding some way to handle those problems. The ideal solution is to fix the problem in the source system. This is usually not realistic:

Not all data quality problems are discovered during the ETL build. The ETL jobs need to be robust enough to detect new data quality problems in production.

Not all ETL projects have the funding, scope or authority to fix problems in source systems. Not all source systems have the same data quality rules as an ETL process. A data quality issue in ETL may not be one in the source system.

4.2.1 Detecting Data Quality Problems

An ETL project needs to detect data quality problems during the analysis phase of the project and anticipate new data quality problems after the implementation of the ETL processes.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

30

The best way to handle data quality problems in the ETL process is to discover them during the analysis phase. This is why many ETL vendors offer data profiling solutions. To know the problems up front lets the team build quality controls into the ETL processes. ETL processes can detect data quality problems by investigating each field for common data quality problems such as null, invalid dates and out of range values. The business analysts can apply additional data quality checks for combinations of data outside the valid business rules. The ETL process will then run these data quality detection for all data moving through.

4.2.2 Handling Data Quality Problems

The core decision when handling a data quality problem is whether the data in movement is to be kept or dropped. This depends on whether it is fit for use at the destination. On the one hand the higher the keep rate the more complete the information at the target. On the other hand garbage in, garbage out. A high keep rate can result in inaccurate data reaching the target. An ETL process should seek to attain a high keep rate and to protect the value of that data via data cleansing techniques.

Data Cleansing in ETL

Data cleansing ranges from simple cleansing techniques such as defaulting values and resetting dates to complex cleansing, such as matching customer records and parsing address fields. Most ETL projects require a level of data cleansing that guarantees complete data and referential integrity. Data cleansing is nothing but a validating the data and purify the data and send from the source to target.

ETL Data Auditing

Data auditing in ETL is a form of data quality control that works at a high level to ensure the number of rows going into an ETL process matches the number of rows being delivered, taking

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

31

into account any valid transformation that changes row counts. Many ETL processes can have row leakage caused by rejections, transformation errors or data integrity mismatches. Good ETL design and development standards will ensure rows leaking out of the ETL process are trapped and reported on. Common row leakage causes include a null value in a field that was not supposed to contain nulls, a look up to a table that does not have a matching row, an insert to a database table that is rejected by the database. The ETL data audit controls should ensure all rows leaked from and ETL process are trapped. It should provide volume history reporting that compares input row counts to delivered row counts.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

32

CHAPTER 5 CLASSIFICATIONS OF OLAP

In the OLAP world, there are mainly two different types: Multidimensional OLAP (MOLAP) and Relational OLAP (ROLAP). 5.1MOLAP This is the more traditional way of OLAP analysis. In MOLAP, data is stored in a multidimensional cube. The storage is not in the relational database, but in proprietary formats. Multidimensional OLAP is one of the oldest segments of the OLAP market. The business problem MOLAP addresses is the need to compare, track, analyze and forecast high level budgets based on allocation scenarios derived from actual numbers. The first forays into data warehousing were led by the MOLAP vendors who created special purpose databases that provided a cube-like structure for performing data analysis.

MOLAP tools restructure the source data so that it can be accessed, summarized, filtered and retrieved almost instantaneously. As a general rule, MOLAP tools provide a robust solution to data warehousing problems. Administration, distribution, Meta data creation and deployment are all controlled from a central point. Deployment and distribution can be achieved over the Web and with client/server models. When MOLAP Tools Bring Value

Need to process information with consistent response time regardless of level of summarization or calculations selected. Need to avoid many of the complexities of creating a relational database to store data for analysis. Need fastest possible performance

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

33

5.1.1 Advantages

Excellent performance: MOLAP cubes are built for fast data retrieval, and are optimal for slicing and dicing operations. Can perform complex calculations: All calculations have been pre-generated when the cube is created. Hence, complex calculations are not only doable, but they return quickly.

5.1.2 Disadvantages

Limited in the amount of data it can handle: Because all calculations are performed when the cube is built, it is not possible to include a large amount of data in the cube itself. This is not to say that the data in the cube cannot be derived from a large amount of data. Indeed, this is possible. But in this case, only summary-level information will be included in the cube itself. Requires additional investment: Cube technology is often proprietary and does not already exist in the organization. Therefore, to adopt MOLAP technology, chances are additional investments in human and capital resources are needed.

5.2 ROLAP

This methodology relies on manipulating the data stored in the relational database to give the appearance of traditional OLAP's slicing and dicing functionality. In essence, each action of slicing and dicing is equivalent to adding a "WHERE" clause in the SQL statement.. Data warehouse sizes, users have come to realize that they cannot store all of the information that they need in MOLAP databases. The business problem that ROLAP addresses is the need to analyze massive volumes of data without having

to be a systems or software expert. Relational OLAP databases seek to resolve this dilemma by providing a multidimensional front end that creates queries to process information in a relational format. These tools provide the ability to transform two-dimensional relational data into multidimensional information. Due to the complexity and size of ROLAP implementations, the tools provide a robust set of functions for meta data creation, administration and deployment. The focus of these tools is to

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

34

provide administrators with the ability to optimize system performance and generate maximum analytical throughput and performance for users. All of the ROLAP vendors provide the ability to deploy their solutions via the Web or within a multitier client/server environment.

5.2.1 Advantages

Can handle large amounts of data: The data size limitation of ROLAP technology is the limitation on data size of the underlying relational database. In other words, ROLAP itself places no limitation on data amount. Can leverage functionalities inherent in the relational database: Often, relational database already comes with a host of functionalities. ROLAP technologies, since they sit on top of the relational database, can therefore leverage these functionalities.

5.2.2Disadvantages

Performance can be slow: Because each ROLAP report is essentially a SQL query (or multiple SQL queries) in the relational database, the query time can be long if the underlying data size is large.

Because ROLAP technology mainly relies on generating SQL statements to query the relational database, and SQL statements do not fit all needs (for example, it is difficult to perform complex calculations using SQL), ROLAP technologies are therefore

Traditionally limited by what SQL can do. ROLAP vendors have mitigated this risk by building into the tool out-of-the-box complex functions as well as the ability to allow

users to define their own functions.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

35

CHAPTER 6 APPLICATIONS OF OLAP

OLAP is Fast Analysis of Shared Multidimensional Information FASMI. There are any applications where this approach is relevant, and this section describes the characteristics of some of them. In an increasing number of cases, specialist OLAP applications have been pre-built and you can buy a solution that only needs limited customizing; in others, a generalpurpose OLAP tool can be used. A general-purpose tool will usually be versatile enough to be used for many applications, but there may be much more application development required for each. The overall software costs should be lower, and skills are transferable, but

implementation costs may rise and end-users may get less ad hoc flexibility if a more technical product is used. In general, it is probably better to have a general-purpose product which can be used for multiple applications, but some applications, such as financial reporting, are sufficiently complex that it may be better to use a pre-built application, and there are several available. OLAP applications have been most commonly used in the financial and marketing areas, but as we show here, their uses do extend to other functions. Data rich industries have been the most typical users (consumer goods, retail, financial services and transport) for the obvious reason that they had large quantities of good quality internal and external data available, to which they needed to add value. However, there is also scope to use OLAP technology in other industries. The applications will often be smaller, because of the lower volumes of data available, which can open up a wider choice of products (because some products cannot cope with very large data volumes).

6.1 Marketing And Sales Analysis

Most commercial companies require this application, and most products are capable of handling it to some degree. However, large-scale versions of this application occur in three industries,

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

36

each with its own peculiarities: Consumer goods industries often have large numbers of products and outlets, and a high rate of change of both. They usually analyze data monthly, but sometimes it may go down to weekly or, very occasionally, daily. There are usually a number of dimensions, none especially large (rarely over 100,000). Data is often very sparse because of the number of dimensions. Because of the competitiveness of these industries, data is often analyzed using more sophisticated calculations than in other industries. Often, the most suitable technology for these applications is one of the hybrid OLAPs, which combine high analytical functionality with reasonably large data capacity. Retailers, thanks to EPOS data and loyalty cards, now have the potential to analyze huge amounts of data. Large retailers could have over 100,000 products (SKUs) and hundreds of branches. They often go down to weekly or daily level, and may sometimes track spending by individual customers. They may even track sales by time of day. The data is not usually very sparse, unless customer level detail is tracked. Relatively low analytical functionality is usually needed. Sometimes, the volumes are so large that a ROLAP solution is required, and this is certainly true of applications where individual private consumers are tracked. The financial services industry (insurance, banks etc) is a relatively new user of OLAP technology for sales analysis. With an increasing need for product and customer profitability, these companies are now sometimes analyzing data down to individual customer level, which means that the largest dimension may have millions of members. Because of the need to monitor a wide variety of risk factors, there may be large numbers of attributes and dimensions, often with very flat hierarchies.

6.2 Click Stream Analysis

This is one of the latest OLAP applications. Commercial Web sites generate gigabytes of data a day that describe every action made by every visitor to the site. No bricks and mortar

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

37

retailer has the same level of detail available about how visitors browse the offerings, the route they take and even where they abandon transactions. A large site has an almost impossible volume of data to analyze, and a multidimensional framework is possibly the best way of making sense of it. There are many dimensions to this analysis, including where the visitors came from, the time of day, the route they take through the site, whether or not they started/completed a transaction, and any demographic data about customer visitors. The Web site should not be viewed in isolation. It is only one facet of an organizations business, and ideally, the Web statistics should be combined with other business data, including product profitability, customer history and financial information. OLAP is an ideal way of bringing these conventional and new forms of data together. This would allow, for instance, Web sites to be targeted not simply to maximize transactions, but to generate profitable business and to appeal to customers likely to create such business. OLAP can also be used to assist in personalizing Web sites. Many of the issues with click stream analysis come long before the OLAP tool. The biggest issue is to correctly identify real user sessions, as opposed to hits. This means eliminating the many crawler bots that are constantly searching and indexing the Web, and then grouping sets of hits that constitute a session. This cannot be done by IP address alone, as Web proxies and NAT (network address translation) mask the true client IP address, so techniques such as session cookies must be used in the many cases where surfers do not identify themselves by other means. Indeed, vendors such as Visual Insights charge much more for upgrades to the data capture and conversion features of their products than they do for the reporting and analysis components, even though the latter are much more visible.

6.3 Database Marketing

This is a specialized marketing application that is not normally thought of as an OLAP application, but is now taking advantage of multidimensional analysis, combined with other statistical and data mining technologies. The purpose of the application is to determine who are the best customers for targeted promotions for particular products or services based on the disparate information from various different systems.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

38

Database marketing professionals aim to:

Determine who the preferred customers are, based on their purchase of profitable

products. This can be done with brute force data mining techniques (which are slow and

can be hard to interpret), or by experienced business users investigating hunches using

OLAP cubes (which is quicker and easier).

Work to build loyalty packages for preferred customers via correct offerings. Once

the preferred customers have been identified, look at their product mix and buying profile to see if there are denser clusters of product purchases over particular time periods. Again, this is much easier in a multidimensional environment. These can then form the basis for special offers to increase the loyalty of profitable customers.

If these goals are met, both parties profit. The customers will have a company that knows what they want and provides it. The company will have loyal customers that generate sufficient revenue and

profits to continue a viable business. Database marketing specialists try to model (using statistical or data mining techniques) which pieces of information are most relevant for determining likelihood of subsequent purchases, and how to weight their importance. In the past, pure marketers have looked for triggers, which

works, but only in one dimension. But a well established company may have hundreds of pieces

of information about customers, plus years of transaction data, so multidimensional structures are

a great way to investigate relationships quickly, and narrow down the data which should be

considered for modeling.

Once this is done, the customers can be scored using the weighted combination of variables which compose the model. A measure can then be created, and cubes set up which mix and match across multidimensional variables to determine optimal product mix for customers. The users can determine the best product mix to market to the right customers based on segments created from a combination of the product scores, the several demographic dimensions, and the transactional data in aggregate. Finally, in a more simplistic setting, the users can break the world into segments based on combinations

of dimensions that are relevant to targeting. They can then calculate a return on investment on

these combinations to determine which segments have been profitable in the past, and which have

not. Mailings can then be made only to those profitable segments. Products like Express

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

39

allows the users to fine tune the dimensions quickly to build one-off promotions, determine how to structure profitable combinations of dimensions into segments, and rank them in order of desirability.

6.4 Financial Reporting And Consolidation

Every medium and large organization has onerous responsibilities for producing financial reports for internal (management) consumption. Publicly quoted companies or public sector bodies also have to produce other, legally required, reports. Accountants and financial analysts were early adopters of multidimensional software. Even the simplest financial consolidation consists of at least three dimensions. It must have a chart of accounts (general OLAP tools often refer to these as facts or measures, but to accountants they will always be accounts), at least one organization structure plus time. Usually it is necessary to compare different versions of data, such as actual, budget or forecast. This makes the model four dimensional. Often line of business segmentation or product line analysis can add fifth or sixth dimensions. Even back in the 1970s, when consolidation systems were typically run on time-sharing mainframe computers, the dedicated consolidation products typically had a four or more dimensional feel to them. Several of todays OLAP tools can trace their roots directly back to this ancestry and many more have inherited design attributes from these early products. To address this specific market, certain vendors have developed specialist products. The market leader in this segment is Hyperion Solutions. Other players include Cartesis, Geac, Longview Khalix, and SAS Institute, but many smaller players also supply pre-built applications for financial reporting. In addition to these specialist products, there is a school of thought that says that these types of problems can be solved by building extra functionality on top of a generic OLAP tool.

6.5 Management Reporting

In most organizations, management reporting is quite distinct from formal financial reporting. It will usually have more emphasis on the P&L and possible cash flow, and less on the balance sheet. It will probably be done more often usually monthly, rather than

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

40

annually and quarterly. There will be less detail but more analysis. More users will be interested in viewing and analyzing the results. The emphasis is on faster rather than more accurate reporting and there may be regular changes to the reporting requirements. Users of OLAP based systems consistently. Management reporting usually involves the calculation of numerous business ratios, comparing performance against history and budget. There is also advantage to be gained from comparing product groups or channels or markets against each other. Sophisticated exception detection is important here, because the whole point of management reporting is to manage the business by taking decisions. The new Microsoft OLAP Services product and the many new client tools and applications being developed for it will certainly drive down per seat prices for general purpose management reporting applications, so that it will be economically possible to deploy good solutions to many more users.

6.6 EIS

EIS is one branch of management reporting. The term became popular in the mid 1980s, when it was defined to mean Executive Information Systems; some people also used the term ESS (Executive Support System). Since then, the original concept has been discredited, as the early systems were very proprietary, expensive and hard to maintain. With this proliferation of descriptions, the meaning of the term is now irretrievably blurred. In essence, an EIS is a more highly customized, easier to use management reporting system, but it is probably now better recognized as an attempt to provide intuitive ease of use to those managers who do not have either a computer background or much patience. There is no reason why all users of an OLAP based management reporting system should not get consistently fast performance, great ease of use, reliability and flexibility not just top executives, who will probably use it much less than mid level managers.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

41

The basic philosophy of EIS was that what gets reported gets managed, so if executives could have fast, easy access to a number of key performance indicators (KPIs) and critical success factors (CSFs), they would be able to manage their organizations better. But there is little evidence that this worked for the buyers, and it certainly did not work for the software vendors who specialized in this field, most of which suffered from a very poor financial performance.

6.7 Profitability Analysis

This is an application which is growing in importance. Even highly profitable organizations ought to know where the profits are coming from; less profitable organizations have to know where to cut back. Profitability analysis is important in setting prices (and discounts), deciding on promotional activities, selecting areas for investment or divestment and anticipating competitive

pressures. Decisions in these areas are made every day by many individuals in large organizations, and their decisions will be less effective if they are not well informed about the differing levels of profitability of the companys products and customers. Profitability figures may be used to bias actions, by basing remuneration on profitability goals rather than revenue or volume. One popular way to assign costs to the right products or services is to use activity based costing. This is much more scientific than simply allocating overhead costs in proportion to revenues or floor space. It attempts to measure resources that are consumed by activities, in terms of cost drivers. Typically costs are grouped into cost pools which are then applied to products or customers using cost drivers, which must be measured. Some cost drivers may be clearly based on the volume of activities, others may not be so obvious. They may, for example, be connected with the introduction of new products or suppliers. Others may be connected with the complexity of the organization (the variety of customers, products, suppliers, production

facilities, markets etc). There are also infrastructure-sustaining costs that cannot realistically be applied to activities. Even ignoring these, it is likely that the costs of supplying the least profitable customers or products exceeds the revenues they generate. If these are known,

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

42

the company can make changes to prices or other factors to remedy the situation possibly by withdrawing from some markets, dropping some products or declining to bid for certain contracts. There are specialist ABC products on the market and these have many FASMI characteristics. It is also possible to build ABC applications in OLAP tools, although the application functionality may be less than could be achieved through the use of a good specialist tool.

6.8 Quality Analysis

Although quality improvement programs are less in vogue than they were in the early 1990s, the need for consistent quality and reliability in goods and services is as important as ever. The measures should be objective and customer rather than producer focused. The systems are just as relevant in service organizations and the public sector. Indeed, many public sector service organizations have specific service targets. These systems are used not just to monitor an organizations own output, but also that of its suppliers. There may, for example, be service level agreements that affect contract extensions and payments. Quality systems can often involve multidimensional data if they monitor numeric measures across different production facilities, products or services, time, locations and customers. Many of the measures will be non-financial, but they may be just as important as traditional financial measures in forming a balanced view of the organization. As with financial measures, they may need analyzing over time and across the functions of the organization; many organizations are committed to continuous improvement, which requires that there be formal measures that are quantifiable and tracked over long periods; OLAP tools provide an excellent way of doing this, and of spotting disturbing trends before they become too serious.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

43

CHAPTER 7 FUTURE GAZING AND CONCLUSION

What prevents the convergence of functionality of OLAP AND OLTP tools, both being business intelligence tools with data retrieval and analysis being common areas. One significant difference being the function of time; in case of an OLTP tool the transactions are being logged in real time due to the necessity of business to monitor certain parameters regularly like inventory, production processes, whereas OLAP tools are more concerned with historical data not only within a business process but also combining the effect of the total business environment for example like promotions, special external events such as holidays and weather etc. Because of their different nature in the present form the service that is required is significantly different in each case. In case of OLTP the service being online and ability to store large amount of data is critical to its success, whereas in the case of an OLAP tool, the capability to do multidimensional analysis with historical data is important. The first step in this direction might have been taken by Microsoft by integrating reporting services with its SQL Server has taken the lead in integrating functionalities in its tools.

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Online Analytical Processing

44

BIBLIOGRAPHY

1. N.R. Adam and J.C. Worthmann, Security-Control Methods for Statistical Databases: A Comparative Study, ACM Computing Surveys, vol. 21, no. 4, pp. 515556, 1989. 2. R. Agrawal, A. Evfimievski, and R. Srikant, Information Sharing Across Private Databases, Proc. ACM SIGMOD, pp. 86-97, 2003. 3. S.Lujan-Mora, J.Trujillo, I.song, Online Analytical Processing, 5th International conference on the Unified Modelling Language, p.290-304:LNS 2460

Springer,Dresden,2002 4. R. Agrawal, A. Evfimievski, and R. Srikant, Information SharingAcross Private Databases, Proc. ACM SIGMOD, pp. 86-97, 2003. 5. Buzzydlowski, J.W.Song,11-Y.,Hassell,L.:A Framework for Object Oriented OnLine Analytical Processing, D OLAP 1998. 6. J. Han and M. Kamber, Data Mining Concepts and Techniques,second ed. Morgan Kaufmann, 2006 7. IBM Redbooks. DB2 Cube Views: A Primer. Durham, NC, USA: IBM, 2003. ebrary collections. San Jose State University.

http://site.ebrary.com/lib/sjsu/Doc?id=10113016&ppg=43> 8. Jacobson, Reed, Microsoft SQL Server 2005 Analysis Services Step by Step. Microsoft Press. 9. Berry, Michael J. A. Data Mining Techniques : For Marketing, Sales, and Customer Relationship Management. Hoboken, NJ, USA: John Wiley & Sons, Incorporated, 2004. ebrary collections. San Jose State University.

http://site.ebrary.com/lib/sjsu/Doc?id=10114278&ppg=522>. 10. http://www.scribd.com/ 11. http://databases.about.com 12. http://en.wikipedia.org/wiki/Online_analytical_processing 13. http://msdn.microsoft.com/en-us/library/aa933152(v=sql.80).aspx

Computer Science and Engineering Department

KMEA Engineering College|Edathala

Vous aimerez peut-être aussi

- Applications of XMLDocument2 pagesApplications of XMLFaheema T MoosanPas encore d'évaluation

- Mgu S7 Syllabus, Computer Science and EngineeringDocument12 pagesMgu S7 Syllabus, Computer Science and Engineeringarjun c chandrathilPas encore d'évaluation

- Atm NetworksDocument7 pagesAtm NetworksFaheema T MoosanPas encore d'évaluation

- Magneto, Blackplane, FAT, IVTDocument6 pagesMagneto, Blackplane, FAT, IVTFaheema T MoosanPas encore d'évaluation

- Net CasterDocument22 pagesNet CasterFaheema T MoosanPas encore d'évaluation

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5782)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (265)