Académique Documents

Professionnel Documents

Culture Documents

System Programming

Transféré par

Aslam PashaDescription originale:

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

System Programming

Transféré par

Aslam PashaDroits d'auteur :

Formats disponibles

Ans 1> a)Lexical Analysis

The lexical analyzer is the interface between the source program and the compiler. The lexical analyzer reads the source program one character at a time, carving the source program into a sequence of atomic units called tokens. Each token represents a sequence of characters that can be treated as a single logical entity. Identifiers, keywords, constants, operators, and punctuation symbols such as commas and parentheses are typical tokens. There are two kinds of token: specific strings such as IF or a semicolon, and classes of strings such as identifiers, constants, or labels. The lexical analyzer and the following phase, the syntax analyzer are often grouped together into the same pass. In that pass, the lexical analyzer operates either under the control of the parser or a co-routine with the parser. The parser asks the lexical analyzer returns to the parser a code for the token that it found. In the case that the token is an identifier or another token with a value, the value is also passed to the parser. The usual method of providing this information is for the lexical analyzer to call a bookkeeping routine which installs the actual value in the symbol table if it is not already there. The lexical analyzer then passes the two components of the token to the parser. The first is a code for the token type (identifier), and the second is the value, a pointer to the place in the symbol table reserved for the specific value found. b) Syntax Analysis

The parser has two functions. It checks that the tokens appearing in its input, which is the output of the lexical analyzer, occur in patterns that are permitted by the specification for the source language. It also

imposes on the tokens a tree-like structure that is used by the subsequent phases of the compiler. The second aspect of syntax analysis is to make explicit the hierarchical structure of the incoming token stream by identifying which parts of the token stream should be grouped together.

Ans 2) The Reduced Instruction Set Computer or RISC is a microprocessor CPU design philosophy that favors a simpler set of instructions that all take about the same amount of time to execute. The most common RISC microprocessors are AVR, PIC, ARM, DEC Alpha, PA-RISC, SPARC, MIPS, and IBM's PowerPC. RISC, or Reduced Instruction Set Computer. Is a type of microprocessor architecture that utilizes a small, highly-optimized set of instructions, rather than a more specialized set of instructions often found in other types of architectures. RISC characteristics Small number of machine instructions: less than 150 Small number of addressing modes: less than 4 Small number of instruction formats: less than 4 Instructions bf the same length: 32 bits (or 64 bits) Single cycle execution Load / Store architecture Large number of GRPs (General Purpose Registers): more than 32 Hardwired control Support for HLL (High Level Language). Ans 3) Data Structure The second step in our design procedure is to establish the databases that we have to work with.

Pass 1 Data Structures 1. Input source program 2. A Location Counter (LC), used to keep track of each instruction's location. 3. A table, the Machine-operation Table (MOT), that indicates the symbolic mnemonic, for each instruction and its length (two, four, or six bytes) 4. A table, the Pseudo-Operation Table (POT) that indicates the symbolic mnemonic and action to be taken for each pseudo-op in pass 1. 5. A table, the Symbol Table (ST) that is used to store each label arid its corresponding value.

Pass 2 Data Structures

Copy of source program input to passl. Location Counter (LC) A table, the Machine-operation Table (MOT), that indicates for each instruction, symbolic mnemonic, length (two, four, or six bytes), binary machine opcode and format of instruction.

A table, the Pseudo-Operation Table (POT), that indicates the symbolic mnemonic and action to be taken for each pseudo-op in pass 2.

A table, the Symbol Table (ST), prepared by passl, containing each label and corresponding value.

A Table, the base table (BT), that indicates which registers are currently specified as base registers by USING pseudo-ops and what the specified contents of these registers are.

A work space INST that is used to hold each instruction as its various parts are being assembled together.

A work space, PRINT LINE, used to produce a printed listing. A work space, PUNCH CARD, used prior to actual outputting for converting the assembled instructions into the format needed by the loader.

10. An output deck of assembled instructions in the format needed by the loader.

Ans b)

Flow chart for Pass 1 algorithm

Flow chart for Pass 1 algorithm

Flow chart for Pass 2 algorithm

Flow chart for Pass 2 algorithm

Ans 4. a) Parsing Parsing is the process of analyzing a sequence of tokens to determine its grammatical structure with respect to a given formal grammar. A parser is the component of a compiler that carries out this task. Parsing transforms input text or string into a data structure, usually a tree, which is suitable for later processing and which captures the implied hierarchy of the input. Lexical analysis creates tokens from a sequence of input characters and it is these tokens that are processed by a parser to build a data structure such as parse tree or abstract syntax trees. b) Scanning

Breaking the source code text into small pieces, tokens sometimes called 'terminals' - each representing a single piece of the language, for instance a keyword, identifier or symbol names. The token language is typically a regular language, so a finite state automaton constructed from a regular expression can be used to recognize it.

c) Token Lexical analysis breaks the source code text into small pieces called tokens. Each token is a single atomic unit of the language, for instance a keyword, identifier or symbol name. The token syntax is typically a regular language, so a automaton constructed from a regular expression can be used to recognize it.

Ans 5.

Boot strapping In computing, bootstrapping refers to a process where a simple system activates another more complicated system that serves the same purpose. It is a solution to the Chicken-and-egg problem of starting a certain system without the system already functioning. The term is most often applied to the process of starting up a computer, in which a mechanism is needed to execute the software program that is responsible for executing software programs (the operating system).

Bootstrap loading The discussions of loading up to this point have all presumed that there's already an operating system or at least a program loader resident in the computer to load the program of interest. The chain of programs being loaded by other programs has to start somewhere

In modern computers, the first program the computer runs after a hardware reset invariably is stored in a ROM known as bootstrap ROM. as in pulling one's self up by the bootstraps. When the CPU is powered on or reset, it sets its registers to a known state. On x86 systems, for example, the reset sequence jumps to the address 16 bytes below the top of the systems address space. The bootstrap ROM occupies the top 64K of the address space and ROM code then starts up the computer. On IBM-compatible x86 systems, the boot ROM code reads the first block of the floppy disk into memory, or if that fails the first block of the first hard disk, into memory location zero and jumps to location zero. The program in block zero in turn loads a slightly larger operating system boot program from a known place on the disk into memory, and jumps to that program which in turn loads in the operating system and starts it.

Many Unix systems use a similar bootstrap process to get user-mode programs running. The kernel creates a process, then stuffs a tiny little program, only a few dozen bytes long, into that process. The tiny program executes a system call that runs /etc/init, the user mode

initialization program that in turn runs configuration files and starts the daemons and login programs that a running system needs.

Ans 6) Design of a linker Relocation and linking requirements in segmented addressing The relocation requirements of a program are influenced by the addressing structure of the computer system on which it is to execute. Use of the segmented addressing structure reduces the relocation requirements of program. Implementation Examples: A Linker for MS-DOS Example; Consider the program of written in the assembly language of intel 8088. The ASSUME statement declares the segment registers CS and DS to the available for memory addressing. Hence all memory addressing is performed by using suitable displacements from their contents. Translation time address o A is 0196. In statement 16, a reference to A is assembled as a displacement of 196 from the contents of the CS register. This avoids the use of an absolute address; hence the instruction is not address sensitive. Now no relocation is needed if segment SAMPLE is to be loaded with Address 2000 by a calling program. The effective operand address would be calculated as <CS>+0196, which is the correct address 2196. A similar situation exists with the reference to B in statement 17. The reference to B is assembled as a displacement of 0002 from the contents of the DS register. Since the DS register would be loaded with

the execution time address of DATA _HERE, the reference to B would be automatically relocated to the correct address.

Ans 1. Addressing Modes of CISC The 68000 addressing (Motorola) modes

Register to Register, Register to Memory, Memory to Register, and Memory to Memory Immediate mode the operand immediately follows the instruction Absolute address - the address (in either the "short" 16-bit form or "long" 32-bit form) of the operand immediately follows the instruction

68000 Supports a wide variety of addressing modes.

Program Counter relative with displacement - A displacement value is added to the program counter to calculate the operand's address. The displacement can be positive or negative.

Program Counter relative with index and displacement - The instruction contains both the identity of an "index register" and a trailing displacement value. The contents of the index register, the displacement value, and the program counter are added together to get the final address.

Register direct - The operand is contained in an address or data register.

Address register indirect - An address register contains the address of the operand.

Address register indirect with predecrement or postdecrement - An address register contains the address of the operand in memory. With the predecrement option set, a predetermined value is subtracted from the register before the

(new) address is used. With the postincrement option set, a predetermined value is added to the register after the operation completes. Addressing Modes for the Intel 80x86 Architecture

Simple Addressing Modes (3) Immediate Mode : operand is part of the instruction Example: mov ah, 09h mov dx, offset Prompt Register Addressing : operand is contained in register Example: add ax, bx Direct: operand field of instruction contains effective address Example: add ax, a

Ans 2) Non-Deterministic Finite Automaton (N-DFA) A Non-Deterministic Finite Automaton (NFA) is a 5-tuple: (S, Z, T, s, A)

43 44 45 46 47

an alphabet (I) a set of states (S) a transition function (T: S * Z > S). a start state (s e S) a set of accept states (/A e S)

Where P(S) is the power set of S and e is the empty string. The machine starts in the start state and reads in a string of symbols from its alphabet. It uses the transition relation T to determine the next state(s) using the current state and the symbol just read or the empty string. If, when it has finished reading, it is in an accepting state, it is said to accept the string, otherwise it is said to reject the string. The set of strings it accepts form a language, which is the language the NFA recognizes.

Deterministic finite automata Definition: Deterministic finite automata (DFA)

A deterministic finite automaton (DFA) is a 5-tuple: (S, I, T, s, A)

an alphabet (I) a set of states (S) a transition function (T:SxI->S).

48 49

a start state (s e S) a set of accept states (A c S)

Ans 3)a The C Preprocessor for GCC version 2 The C preprocessor is a macro processor that is used automatically by the C compiler to transform your program before actual compilation, it is called a macro processor because it allows you to define macros, which are brief abbreviations for longer constructs. The C preprocessor provides four separate facilities that you can use as you see fit:

Inclusion of header files. These are files of declarations that can be substituted into your program.

Macro expansion. You can define macros, which are abbreviations for arbitrary fragments of C code, and then the C preprocessor will replace the macros with their definitions throughout the program.

Conditional compilation. Using special preprocessing directives, you can include or exclude parts of the program according to various conditions.

Line control. If you use a program to combine or rearrange source files into an intermediate file which is then compiled, you can use line control to inform the compiler of where each source line originally came from.

ANSI Standard C requires the rejection of many harmless constructs commonly used by today's C programs. Such incompatibility would be inconvenient for users, so the GNU C preprocessor is configured to accept these constructs by default. Strictly speaking, to get ANSI Standard C, you must use the options '-trigraphs', '-undef and 'pedantic', but in practice the consequences of having strict ANSI Standard C make it undesirable to do this.

b) Conditional Assembly Means that some sections of the program may be optional, either included or not in the final program, dependent upon specified conditions. A reasonable use of conditional assembly would be to combine two versions of a program, one that prints debugging information during test executions for the developer, another version for production operation that displays only results of interest for the average user. A program fragment that assembles the instructions to print the Ax register only if Debug is true is given below. Note that true is any nonzero value.

Debug equ 1 ; Debug is true : : Mul Bx If Debug ; Assemble only when Debug is true

Push Bx Mov Bh, 0 Call PutDec$ Pop Bx Endif | Sub Cx, 12

Ans (4.

PHASES OF COMPILER

Lexical Analysis The lexical analyzer is the interface between the source program and the compiler. The lexical analyzer reads the source program one character at a time, carving the source program into a sequence of atomic units called tokens. Each token represents a sequence of characters that can be treated as a single logical entity. Identifiers, keywords, constants, operators, and punctuation symbols such as commas and parentheses are typical tokens. There are two kinds of token: specific strings such as IF or a semicolon, and classes of strings such as identifiers constants, or labels. Syntax Analysis The parser has two functions. It checks that the tokens appearing in its input, which is the output of the lexical analyzer, occur in patterns that are permitted by the specification for the source language. It also imposes on the tokens a tree-like structure that is used by the subsequent phases of the compiler. The second aspect of syntax analysis is to make explicit the hierarchical structure of the incoming token stream by identifying which parts of the token stream should be grouped together.

Code Optimization Object programs that are frequently executed should be fast and small. Certain compilers have within them a phase that tries to apply transformations to the output of the intermediate code generator, in an attempt to produce an intermediate-language version of the source program from which a faster or smaller object-language program can ultimately be produced. This phase is popularity called the optimization phase.

Code Generation The code generation phase converts the intermediate code into a sequence of machine instructions. A simple-minded code generator might map the statement A: = B+C into the machine code sequence. LOAD B ADD C STORE A

Ans 5)

Macro definition and Expansion

A macro is a unit of specification for program generation through expansion. A macro name is an abbreviation, which stands for some related lines of code. Macros are useful for the following purposes:

To simplify and reduce the amount of repetitive coding To reduce errors caused by repetitive coding To make an assembly program more readable.

A macro consists of name, set of formal parameters and body of code. The use of macro name with set of actual parameters is replaced by some code generated by its body. This is called macro expansion. Macros allow a programmer to define pseudo operations, typically operations that are generally desirable, are not implemented as part of the processor instruction, and can be implemented as a sequence of instructions. Each use of a macro generates new program instructions, the macro has the effect of automating writing of the program. Macro-name MACRO <formal parameters> cmacro body> ENDM

Macros can be defined used in many programming languages, like C, C++ etc. Example macro in C programming.Macros are commonly used in C to define small snippets of code. If the macro has parameters, they are substituted into the macro body during expansion; thus, a C macro can mimic a C function. The usual reason for doing this is to avoid the overhead of a function call in simple cases, where the code is lightweight enough that function call overhead has a significant impact on performance. For instance, #define max (a, b) a>b? A: b Defines the macro max, taking two arguments a and b. This macro may be called like any C function, using identical syntax. Therefore, after preprocessing z = max(x, y); Becomes z = x>y? X:y; While this use of macros is very important for C, for instance to define type- safe generic data-types or debugging tools, it is also slow, rather inefficient, and may lead to a number of pitfalls. C macros are capable of mimicking functions, creating new syntax within some limitations, as well as expanding into arbitrary text (although the C compiler will require that text to be valid C source code, or else comments), but they have some limitations as a programming construct. Macros which mimic functions, for instance, can be called like real functions, but a macro cannot be passed to another function using a function pointer, since the macro itself has no address. In programming languages, such as C or assembly language, a name that defines a set of commands that are substituted for the macro name wherever the name appears in a program (a process called macro expansion) when the program is compiled or assembled. Macros are

similar to functions in that they can take arguments and in that they are calls to lengthier sets of instructions. Unlike functions, macros are replaced by the actual commands they represent when the program is prepared for execution, function instructions are copied into a program only once.

Ans 6)

Compiler

A compiler is a computer program (or set of programs) that translates text written in a computer language (the source language) into another computer language (the target language). The original sequence is usually called the source code and the output called object code. Commonly the output has a form suitable for processing by other programs (e.g., a linker), but it may be a human-readable text file.

Compiler Backend While there are applications where only the compiler frontend is necessary, such as static language verification tools, a real compiler hands the intermediate representation generated by the frontend to the backend, which produces a functional equivalent program in the output language. This is done in multiple steps:

Optimization - the intermediate language representation is transformed into functionally equivalent but faster (or smaller) forms.

Code Generation - the transformed intermediate language is translated into the output language, usually the native machine language of the system. This involves resource and storage decisions, such as deciding

which variables to fit into registers and memory and the selection and scheduling of appropriate machine instructions.

Typical compilers output so called objects, which basically contain machine code augmented by information about the name and location of entry points and external calls (to functions not contained in the object). A set of object files, which need not have all come from a single compiler, may then be linked together to create the final executable which can be run directly by a user.Compiler frontend

Vous aimerez peut-être aussi

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Introduction To REXX WorkshopDocument75 pagesIntroduction To REXX WorkshopBurhan MalikPas encore d'évaluation

- Simio Release Notes 203Document99 pagesSimio Release Notes 203eritros1717Pas encore d'évaluation

- CSC 305: Programming Paradigm: Introduction To Language, Syntax and SemanticsDocument38 pagesCSC 305: Programming Paradigm: Introduction To Language, Syntax and SemanticsDanny IshakPas encore d'évaluation

- A Python Book Beginning Python Advanced Python and Python Exercises-3Document1 pageA Python Book Beginning Python Advanced Python and Python Exercises-3gjhgjgjPas encore d'évaluation

- Machine Translation Using Open NLP and Rules Based System English To Marathi TranslatorDocument4 pagesMachine Translation Using Open NLP and Rules Based System English To Marathi TranslatorEditor IJRITCCPas encore d'évaluation

- Syntax-Directed Translation: Govind Kumar Jha Lecturer, CSE Glaitm MathuraDocument21 pagesSyntax-Directed Translation: Govind Kumar Jha Lecturer, CSE Glaitm MathuraRajat GuptaPas encore d'évaluation

- Flex/Bison Tutorial: Aaron Myles Landwehr Aron+ta@udel - EduDocument57 pagesFlex/Bison Tutorial: Aaron Myles Landwehr Aron+ta@udel - EduJoão AlvesPas encore d'évaluation

- SAS Interview Questions:macrosDocument6 pagesSAS Interview Questions:macrosAnsh HosseinPas encore d'évaluation

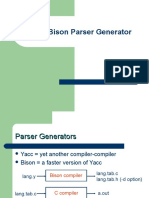

- Yacc / Bison Parser GeneratorDocument19 pagesYacc / Bison Parser GeneratorBlejan LarisaPas encore d'évaluation

- Compiler Design NotesDocument8 pagesCompiler Design NotesiritikdevPas encore d'évaluation

- Image Caption2Document9 pagesImage Caption2MANAL BENNOUFPas encore d'évaluation

- Lex YaacDocument24 pagesLex YaacMichael JamesPas encore d'évaluation

- BE Computer 2012 Course 27-8-15Document64 pagesBE Computer 2012 Course 27-8-15Bhavik ShahPas encore d'évaluation

- According To The Bible, The Rainbow Is A Token of God's Covenant With NoahDocument2 pagesAccording To The Bible, The Rainbow Is A Token of God's Covenant With NoahAndrew FanePas encore d'évaluation

- Compiler Design Case Study 2Document6 pagesCompiler Design Case Study 2UTKARSH ARYAPas encore d'évaluation

- QS User TutorialDocument333 pagesQS User TutorialsandeepkvaPas encore d'évaluation

- Unit 5 ScannerDocument14 pagesUnit 5 ScannerHuy Đỗ QuangPas encore d'évaluation

- Coursenotes Lesson1Document59 pagesCoursenotes Lesson1Aland BravoPas encore d'évaluation

- Lexical Analyzer (Compiler Contruction)Document6 pagesLexical Analyzer (Compiler Contruction)touseefaq100% (1)

- Lesson 4Document15 pagesLesson 4Alan ZhouPas encore d'évaluation

- PCD - Answer Key NOV 2019Document19 pagesPCD - Answer Key NOV 2019axar kumarPas encore d'évaluation

- Symbol Table ManagementDocument7 pagesSymbol Table ManagementJessica CunninghamPas encore d'évaluation

- Compiler Design: Computer ScienceDocument117 pagesCompiler Design: Computer SciencesecefibPas encore d'évaluation

- PPL Assignment 1Document9 pagesPPL Assignment 1Pritesh PawarPas encore d'évaluation

- Models of HCIDocument19 pagesModels of HCIAnkit Singh Chauhan100% (1)

- 1.describing Syntax and SemanticsDocument110 pages1.describing Syntax and Semanticsnsavi16eduPas encore d'évaluation

- Compiler Design - Syntax AnalysisDocument11 pagesCompiler Design - Syntax Analysisabu syedPas encore d'évaluation

- A Grammar For The C-Programming Language (Version S21) March 23, 2021Document8 pagesA Grammar For The C-Programming Language (Version S21) March 23, 2021vishwaPas encore d'évaluation

- Anna University Principle of Compiler Design Question PaperDocument5 pagesAnna University Principle of Compiler Design Question PapermasisathisPas encore d'évaluation

- TK3163 Sem2 2020 1MyCh1.1-1.2 Intro-20200211121547Document39 pagesTK3163 Sem2 2020 1MyCh1.1-1.2 Intro-20200211121547Nurul Irdina SyazwinaPas encore d'évaluation