Académique Documents

Professionnel Documents

Culture Documents

Václav Hlavác - Linear Classifiers, A Perceptron Family

Transféré par

TuhmaTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Václav Hlavác - Linear Classifiers, A Perceptron Family

Transféré par

TuhmaDroits d'auteur :

Formats disponibles

Linear classiers, a perceptron family

Vclav Hlav

Czech Technical University in Prague

Faculty of Electrical Engineering, Department of Cybernetics

Center for Machine Perception

http://cmp.felk.cvut.cz/hlavac, hlavac@fel.cvut.cz

Courtesy: M.I. Schlesinger, V. Franc.

Outline of the talk:

A classier, dichotomy, a multi-class

classier.

A linear discriminant function.

Learning a linear classier.

Perceptron and its learning.

-solution.

Learning for innite training sets.

2/22

A classier

Analyzed object is represented by

X a space of observations, a vector space of dimension n.

Y a set of hidden states.

The aim of the classication is to determine a relation between X and Y , i.e. to

nd a discriminant function f : X Y .

Classier q : X J maps observations X

n

set of class indices J, J =

1, . . . , |Y |.

Mutual exclusion of classes is required

X = X

1

X

2

. . . X

|Y |

,

X

i

X

j

= , i = j, i, j = 1 . . . |Y |.

3/22

Classier, an illustration

A classier partitions the observation space X into class-labelled regions X

i

,

i = 1, . . . , |Y |.

Classication determines to which region X

i

an observed feature vector x

belongs.

Borders between regions are called decision boundaries.

X

1

X

2

X

3

X

4

X

1

X

2

X

3

X - background

4

X

1

X

2

X

3

X

4

Several possible arrangements of classes.

4/22

A multi-class decision strategy

Discriminant functions f

i

(x) should have the property ideally:

f

i

(x) > f

j

(x) for x class i , i = j.

f (x)

1

f (x)

2

f (x)

| | Y

max

x

y

Strategy: j = argmax

j

f

j

(x)

However, it is uneasy to nd such a discriminant function. Most good

classiers are dichotomic (as perceptron, SVM).

The usual solution: One-against-All classier, One-against-One classier.

5/22

Linear discriminant function q(x)

f

j

(x) = w

j

, x +b

j

, where denotes a scalar product.

A strategy j = argmax

j

f

j

(x) divides X into |Y | convex regions.

y=1

y=2

y=3

y=4

y=5

y=6

6/22

Dichotomy, two classes only

|Y | = 2, i.e. two hidden states (typically also classes)

q(x) =

_

_

_

y = 1 , if w, x +b 0 ,

y = 2 , if w, x +b < 0 .

x

1

x

2

S

w

1

x

1

b

w

2

x

2

w

3

x

3

w

n

x

n

y

1

activation

function

threshold

weights

Perceptron by F. Rosenblatt 1957

7/22

Learning linear classiers

The aim of learning is to estimate classier parameters w

i

, b

i

for i.

The learning algorithms dier by

The character of training set

1. Finite set consisting of individual observations and hidden states, i.e.,

{(x

1

, y

1

) . . . (x

L

, y

L

)}.

2. Innite sets described by Gaussian distributions.

Learning task formulations.

8/22

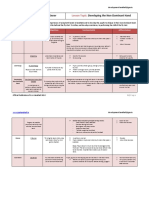

Learning tasks formulations

For nite training sets

Empirical risk minimization: dummy dummy dummy dummy dummy

True risk is approximated by R

emp

(q(x, )) =

1

L

L

i=1

W(q(x

i

, ), y

i

),

where W is a penalty.

Learning is based on the empirical minimization principle

= argmin

R

emp

(q(x, )).

Examples of learning algorithms: Perceptron, Back-propagation.

Structural risk minimization: dummy dummy dummy dummy dummy

True risk is approximated by a guaranteed risk (a regularizer securing

upper bound of the risk is added to the empirical risk,

Vapnik-Chervonenkis theory of learning).

Example: Support Vector Machine (SVM).

9/22

Perceptron learning: Task formulation

Input: T = {(x

1

, y

1

) . . . (x

L

, y

L

)}, k

i

{1, 2},

i = 1, . . . , L, dim(x

i

) = n.

Output: a weight vector w, oset b

for j {1, . . . , L} satisfying:

w, x

j

+b 0 for y = 1,

w, x

j

+b < 0 for y = 2.

X

n

w, x + b = 0

The task can be formally transcribed to a single

inequality w

, x

j

0 by embedding it into n + 1

dimensional space, where w

= [w b],

x

=

_

[x 1] for y = 1 ,

[x 1] for y = 2 .

We drop the primes and go back to w, x notation.

X

n+1

w, x = 0

10/22

Perceptron learning: the algorithm 1957

Input: T = {x

1

, x

2

, . . . , x

L

}.

Output: a weight vector w.

The Perceptron algorithm

(F. Rosenblatt):

1. w

1

= 0.

2. A wrongly classied observation x

j

is sought, i.e., w

t

, x

j

< 0,

j {1, . . . , L}.

3. If there is no misclassied

observation then the algorithm

terminates otherwise

w

t+1

= w

t

+x

j

.

4. Goto 2.

w

t

w

t+1

x

t

0

Perceptron update rule

w ,x = 0

t

11/22

Noviko theorem, 1962

Proves that the Perceptron algorithm converges in a

nite number steps if the solution exists.

Let X denotes a convex hull of points (set of ob-

servations) X.

Let D = max

i

|x

i

|, m = min

xX

|x

i

| > 0.

Noviko theorem:

If the data are linearly separable then there exists a number

t

D

2

m

2

, such that the vector w

t

satises

w

t

, x

j

> 0, j {1, . . . , L} .

What if the data is not separable?

How to terminate the perceptron learning?

D

m

origin

convex hull

12/22

Idea of the Noviko theorem proof

Let express bounds for |w

t

|

2

:

Upper bound:

|w

t+1

|

2

= |w

t

+x

t

|

2

= |w

t

|

2

+ 2 x

t

, w

t

. .

0

+|x

t

|

2

|w

t

|

2

+|x

t

|

2

|w

t

|

2

+D

2

.

|w

0

|

2

= 0, |w

1

|

2

D

2

, |w

2

|

2

2D

2

, . . .

. . . , |w

t+1

|

2

t D

2

, . . .

Lower bound: is given analogically

|w

t+1

|

2

> t

2

m

2

.

Solution: t

2

m

2

t D

2

t

D

2

m

2

.

|w |

t

2

t

13/22

An alternative training algorithm

Kozinec (1973)

Input: T = {x

1

, x

2

, . . . x

L

}.

Output: a weight vector w

.

1. w

1

= x

j

, i.e., any observation.

2. A wrongly classied observation x

t

is

sought, i.e., w

t

, x

j

< b, j J.

3. If there is no wrongly classied

observation then the algorithm nishes

otherwise

w

t+1

= (1 k) w

t

+x

t

k, k R,

where

k = argmin

k

|(1 k) w

t

+x

t

k|.

4. Goto 2.

w

t

w

t+1

b

x

t

0

w ,x = 0

t

Kozinec

14/22

Perceptron learning

as an optimization problem (1)

Perceptron algorithm, batch version, handling non-separability, another

perspective:

Input: T = {x

1

, x

2

, . . . , x

L

}.

Output: a weight vector w minimsing

J(w) = |{x X: w

t

, x < 0}|

or, equivalently

J(w) =

xX: w

t

,x<0

1 .

What would the most common optimization method, the gradient descent,

perform?

w

t

= w J(w) .

The gradient of J(w) is either 0 or undened. The gradient minimization cannot

proceed.

15/22

Perceptron learning

as an Optimization problem (2)

Let us redene the cost function:

J

p

(w) =

xX: w,x<0

w, x .

J

p

(w) =

J

w

=

xX: w,x<0

x .

The Perceptron algorithm is a gradient descent method for J

p

(w).

Learning by the empirical risk minimization is just an instance of an

optimization problem.

Either gradient minimization (backpropagation in neural networks) or convex

(quadratic) minimization (called convex programming in mathematical

literature) is used.

16/22

Perceptron algorithm

Classier learning, non-separable case, batch version

Input: T = {x

1

, x

2

, . . . x

L

}.

Output: a weight vector w

.

1. w

1

= 0, E = |T| = L, w

= 0 .

2. Find all misclassied observations X

= {x X: w

t

, x < 0}.

3. if |X

| < E then E = |X

|; w

= w

t

, t

lu

= t.

4. if tc(w

, t, t

lu

) then terminate else w

t+1

= w

t

+

t

xX

x.

5. Goto 2.

The algorithm converges with probability 1 to the optimal solution.

The convergence rate is not known.

The termination condition tc(.) is a complex function of the quality of the

best solution, time since the last update t t

lu

and requirements on the

solution.

17/22

The optimal separating plane

and the closest point to the convex hull

The problem of the optimal separation by a hyperplane

w

= argmax

w

min

j

_

w

|w|

, x

j

_

(1)

can be converted to a seek for the closest point to a convex hull (denoted by the

overline)

x

= argmin

xX

|x| .

It holds that x

solves also the problem (1).

Recall that the classier that maximizes the separation minimizes the structural

risk R

str

.

18/22

The convex hull, an illustration

w* = m

X

min

j

_

w

|w|

, x

j

_

m |w| , w X .

lower bound upper bound

19/22

-solution

The aim is to speed up the algorithm.

The allowed uncertainty is introduced.

|w

t

| min

j

_

w

t

|w

t

|

, x

j

_

origin

y

w

t

w

t+1

w =(1-y)w+y x

t+1 t t

20/22

Kozinec and the -solution

The second step of Kozinec algorithm is modied to:

A wrongly classied observation x

t

is sought, i.e.,

|w

t

| min

j

_

w

t

|w

t

|

, x

t

_

m

0

t

|w |

t

21/22

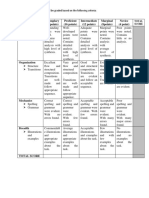

Learning task formulation

for innite training sets

The generalization of the Andersons task by M.I. Schlesinger (1972) solves a

quadratic optimization task.

It solves the learning problem for a linear classier and two hidden states

only.

It is assumed that a class-conditional distribution p

X|Y

(x| y) corresponding

to both hidden states are multi-dimensional Gaussian distributions.

The mathematical expectation

y

and the covariance matrix

y

, y = 1, 2,

of these probability distributions are not known.

The Generalized Anderson task (abbreviated GAndersonT) is an extension of

Anderson-Bahadur task (1962) which solved the problem when each of two

classes is modelled by a single Gaussian.

22/22

GAndersonT illustrated in the 2D space

Illustration of the statistical model, i.e., a mixture of Gaussians.

y=1

y=2

m

2

m

1

m

3

m

4

m

5

q

The parameters of individual Gaussians

i

,

i

, i = 1, 2, . . . are known.

Weights of the Gaussian components are unknown.

Vous aimerez peut-être aussi

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Comparative Study of English and ArabicDocument16 pagesComparative Study of English and ArabicHamid AlhajjajPas encore d'évaluation

- DLL English q1 Week 7docxDocument5 pagesDLL English q1 Week 7docxVince Rayos CailingPas encore d'évaluation

- Reflection On TRC Calls To Action - AssignmentDocument4 pagesReflection On TRC Calls To Action - Assignmentapi-547138479Pas encore d'évaluation

- Action Plan in MathDocument4 pagesAction Plan in MathRoselyn Catubig Vidad Jumadla100% (4)

- Leadership and Followership Ebook PrintableDocument173 pagesLeadership and Followership Ebook PrintableEslam NourPas encore d'évaluation

- Kai Labusch, Erhardt Barth and Thomas Martinetz - Sparse Coding Neural Gas: Learning of Overcomplete Data RepresentationsDocument21 pagesKai Labusch, Erhardt Barth and Thomas Martinetz - Sparse Coding Neural Gas: Learning of Overcomplete Data RepresentationsTuhmaPas encore d'évaluation

- Frank-Michael Schleif, Matthias Ongyerth and Thomas Villmann - Sparse Coding Neural Gas For Analysis of Nuclear Magnetic Resonance SpectrosDocument6 pagesFrank-Michael Schleif, Matthias Ongyerth and Thomas Villmann - Sparse Coding Neural Gas For Analysis of Nuclear Magnetic Resonance SpectrosTuhmaPas encore d'évaluation

- Anthony Kuh - Neural Networks and Learning TheoryDocument72 pagesAnthony Kuh - Neural Networks and Learning TheoryTuhmaPas encore d'évaluation

- T. Villmann Et Al - Fuzzy Labeled Neural Gas For Fuzzy ClassificationDocument8 pagesT. Villmann Et Al - Fuzzy Labeled Neural Gas For Fuzzy ClassificationTuhmaPas encore d'évaluation

- Kai Labusch, Erhardt Barth and Thomas Martinetz - Approaching The Time Dependent Cocktail Party Problem With Online Sparse Coding Neural GasDocument9 pagesKai Labusch, Erhardt Barth and Thomas Martinetz - Approaching The Time Dependent Cocktail Party Problem With Online Sparse Coding Neural GasTuhmaPas encore d'évaluation

- Barbara Hammer and Alexander Hasenfuss - Topographic Mapping of Large Dissimilarity Data SetsDocument58 pagesBarbara Hammer and Alexander Hasenfuss - Topographic Mapping of Large Dissimilarity Data SetsTuhmaPas encore d'évaluation

- Frank-Michael Schleif - Sparse Kernelized Vector Quantization With Local DependenciesDocument8 pagesFrank-Michael Schleif - Sparse Kernelized Vector Quantization With Local DependenciesTuhmaPas encore d'évaluation

- Clifford Sze-Tsan Choy and Wan-Chi Siu - Fast Sequential Implementation of "Neural-Gas" Network For Vector QuantizationDocument4 pagesClifford Sze-Tsan Choy and Wan-Chi Siu - Fast Sequential Implementation of "Neural-Gas" Network For Vector QuantizationTuhmaPas encore d'évaluation

- Frank-Michael Schleif Et Al - Generalized Derivative Based Kernelized Learning Vector QuantizationDocument8 pagesFrank-Michael Schleif Et Al - Generalized Derivative Based Kernelized Learning Vector QuantizationTuhmaPas encore d'évaluation

- Chang Liang Et Al - Scaling Up Kernel Grower Clustering Method For Large Data Sets Via Core-SetsDocument7 pagesChang Liang Et Al - Scaling Up Kernel Grower Clustering Method For Large Data Sets Via Core-SetsTuhmaPas encore d'évaluation

- Kai Labusch, Erhardt Barth and Thomas Martinetz - Learning Data Representations With Sparse Coding Neural GasDocument6 pagesKai Labusch, Erhardt Barth and Thomas Martinetz - Learning Data Representations With Sparse Coding Neural GasTuhmaPas encore d'évaluation

- Banchar Arnonkijpanich, Barbara Hammer and Alexander Hasenfuss - Local Matrix Adaptation in Topographic Neural MapsDocument34 pagesBanchar Arnonkijpanich, Barbara Hammer and Alexander Hasenfuss - Local Matrix Adaptation in Topographic Neural MapsTuhmaPas encore d'évaluation

- J.-H.Wang and J.-D.Rau - VQ-agglomeration: A Novel Approach To ClusteringDocument9 pagesJ.-H.Wang and J.-D.Rau - VQ-agglomeration: A Novel Approach To ClusteringTuhmaPas encore d'évaluation

- Stephen J. Verzi Et Al - Universal Approximation, With Fuzzy ART And. Fuzzy ARTMAPDocument6 pagesStephen J. Verzi Et Al - Universal Approximation, With Fuzzy ART And. Fuzzy ARTMAPTuhmaPas encore d'évaluation

- Shao-Han Liu and Jzau-Sheng Lin - A Compensated Fuzzy Hopfield Neural Network For Codebook Design in Vector QuantizationDocument13 pagesShao-Han Liu and Jzau-Sheng Lin - A Compensated Fuzzy Hopfield Neural Network For Codebook Design in Vector QuantizationTuhmaPas encore d'évaluation

- Dietmar Heinke and Fred H. Hamker - Comparing Neural Networks: A Benchmark On Growing Neural Gas, Growing Cell Structures, and Fuzzy ARTMAPDocument13 pagesDietmar Heinke and Fred H. Hamker - Comparing Neural Networks: A Benchmark On Growing Neural Gas, Growing Cell Structures, and Fuzzy ARTMAPTuhmaPas encore d'évaluation

- Jim Holmström - Growing Neural Gas: Experiments With GNG, GNG With Utility and Supervised GNGDocument42 pagesJim Holmström - Growing Neural Gas: Experiments With GNG, GNG With Utility and Supervised GNGTuhmaPas encore d'évaluation

- Evren Ozarslan Et Al - Resolution of Complex Tissue Microarchitecture Using The Diffusion Orientation Transform (DOT)Document18 pagesEvren Ozarslan Et Al - Resolution of Complex Tissue Microarchitecture Using The Diffusion Orientation Transform (DOT)TuhmaPas encore d'évaluation

- David S. Tuch Et Al - High Angular Resolution Diffusion Imaging Reveals Intravoxel White Matter Fiber HeterogeneityDocument6 pagesDavid S. Tuch Et Al - High Angular Resolution Diffusion Imaging Reveals Intravoxel White Matter Fiber HeterogeneityTuhmaPas encore d'évaluation

- Deterministic and Probabilistic Q-Ball Tractography: From Diffusion To Sharp Fiber DistributionsDocument39 pagesDeterministic and Probabilistic Q-Ball Tractography: From Diffusion To Sharp Fiber DistributionsTuhmaPas encore d'évaluation

- M. Perrin Et Al - Fiber Tracking in Q-Ball Fields Using Regularized Particle TrajectoriesDocument12 pagesM. Perrin Et Al - Fiber Tracking in Q-Ball Fields Using Regularized Particle TrajectoriesTuhmaPas encore d'évaluation

- Fabrice Rossi, Brieuc Conan-Guez and Francois Fleuret - Theoretical Properties of Functional Multi Layer PerceptronsDocument6 pagesFabrice Rossi, Brieuc Conan-Guez and Francois Fleuret - Theoretical Properties of Functional Multi Layer PerceptronsTuhmaPas encore d'évaluation

- Kalvis M Jansons and Daniel C Alexander - Persistent Angular Structure: New Insights From Diffusion Magnetic Resonance Imaging DataDocument16 pagesKalvis M Jansons and Daniel C Alexander - Persistent Angular Structure: New Insights From Diffusion Magnetic Resonance Imaging DataTuhmaPas encore d'évaluation

- Christopher P. Hess Et Al - Q-Ball Reconstruction of Multimodal Fiber Orientations Using The Spherical Harmonic BasisDocument14 pagesChristopher P. Hess Et Al - Q-Ball Reconstruction of Multimodal Fiber Orientations Using The Spherical Harmonic BasisTuhmaPas encore d'évaluation

- Maxime Descoteaux Et Al - Regularized, Fast, and Robust Analytical Q-Ball ImagingDocument14 pagesMaxime Descoteaux Et Al - Regularized, Fast, and Robust Analytical Q-Ball ImagingTuhmaPas encore d'évaluation

- P.A. Castillo Et Al - Optimisation of Multilayer Perceptrons Using A Distributed Evolutionary Algorithm With SOAPDocument10 pagesP.A. Castillo Et Al - Optimisation of Multilayer Perceptrons Using A Distributed Evolutionary Algorithm With SOAPTuhmaPas encore d'évaluation

- TheoriesDocument14 pagesTheoriesChristine Joy DelaCruz CorpuzPas encore d'évaluation

- STLABDocument5 pagesSTLABKrishna RajbharPas encore d'évaluation

- Virtual Private ServerDocument3 pagesVirtual Private Serverapi-284444897Pas encore d'évaluation

- On Your Date of Joining, You Are Compulsorily Required ToDocument3 pagesOn Your Date of Joining, You Are Compulsorily Required Tokanna1808Pas encore d'évaluation

- Lesson 4 - Developing The Non-Dominant HandDocument6 pagesLesson 4 - Developing The Non-Dominant HandBlaja AroraArwen AlexisPas encore d'évaluation

- Lesson Plan Songs and PoetryDocument13 pagesLesson Plan Songs and PoetryDeena ChandramohanPas encore d'évaluation

- Health EducationDocument2 pagesHealth EducationRhea Cherl Ragsag IIPas encore d'évaluation

- Peg Leg The Pirate DesignDocument4 pagesPeg Leg The Pirate Designapi-371644744Pas encore d'évaluation

- Impact of Online LearningDocument16 pagesImpact of Online LearningJessabel RafalloPas encore d'évaluation

- Graduate Nurse Resume ExamplesDocument9 pagesGraduate Nurse Resume Examplesoyutlormd100% (1)

- Alumni Id Lastname First Name Stream City2 Residential Address Year of Passing Departmen TDocument145 pagesAlumni Id Lastname First Name Stream City2 Residential Address Year of Passing Departmen Tshimoo_whoPas encore d'évaluation

- Back Propagation Neural Network 1: Lili Ayu Wulandhari PH.DDocument8 pagesBack Propagation Neural Network 1: Lili Ayu Wulandhari PH.DDewa Bagus KrisnaPas encore d'évaluation

- You Will Learn How To: Introduce Yourself. Ask People About Some Specific InformationDocument4 pagesYou Will Learn How To: Introduce Yourself. Ask People About Some Specific InformationPaula Valentina Hernandez RojasPas encore d'évaluation

- 00 공학을위한컴퓨터과학적사고 WelcomeDocument10 pages00 공학을위한컴퓨터과학적사고 Welcomebagminju46Pas encore d'évaluation

- Gilbert CH 6 TXTBK NotesDocument3 pagesGilbert CH 6 TXTBK NotesBillie WrobleskiPas encore d'évaluation

- John TrussDocument7 pagesJohn Trussrobinson robertsPas encore d'évaluation

- CircularVaccnies 01032024Document3 pagesCircularVaccnies 01032024bidafo2019Pas encore d'évaluation

- Chapter 1 Research PaperDocument5 pagesChapter 1 Research PaperMae DeloriaPas encore d'évaluation

- Yield Stress Sa 240 304Document1 pageYield Stress Sa 240 304faizalPas encore d'évaluation

- 20 Points RubricsDocument1 page20 Points RubricsJerome Formalejo,Pas encore d'évaluation

- Volume of Cylinders Lesson PlanDocument5 pagesVolume of Cylinders Lesson Planapi-310264286Pas encore d'évaluation

- TG Read Up 3Document98 pagesTG Read Up 3DinPas encore d'évaluation

- Senior Scientist Microbiologist in Boston MA Resume Adhar MannaDocument2 pagesSenior Scientist Microbiologist in Boston MA Resume Adhar MannaAdharMannaPas encore d'évaluation

- Translating A Competency Standard Into A Competency-Based CurriculumDocument11 pagesTranslating A Competency Standard Into A Competency-Based Curriculumxycor madlaPas encore d'évaluation

- The Abington Journal 02-01-2012Document20 pagesThe Abington Journal 02-01-2012The Times LeaderPas encore d'évaluation