Académique Documents

Professionnel Documents

Culture Documents

The Use of Mobile Technology For Work-Based Assessment The Student Experience

Transféré par

hilmidemiralDescription originale:

Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

The Use of Mobile Technology For Work-Based Assessment The Student Experience

Transféré par

hilmidemiralDroits d'auteur :

Formats disponibles

British Journal of Educational Technology doi:10.1111/j.1467-8535.2009.01022.

Vol 42 No 2 2011

251265

The use of mobile technology for work-based assessment: the student experience

_1022 251..265

Ceridwen Coulby, Scott Hennessey, Nancy Davies and Richard Fuller

Ceridwen Coulby is the Staff Development ofcer in the Leeds Institute of Medical Education at the University of Leeds, with research interests in mobile learning and assessment. Email: c.coulby@ leeds.ac.uk; Scott Hennessey is a learning technologist at the Leeds Institute of Medical Education at the University of Leeds. His work centres around researching and implementing applications for mobile technologies in higher education. Email: s.hennessey@leeds.ac.uk; Nancy Davies is a learning Technologist at the Leeds Institute of Medical Education at the University of Leeds with research interests in technology enhanced learning. Email: n.e.davies@leeds.ac.uk; Richard Fuller is Chair of the MBChB management committee at the Leeds Institute of Medical Education at the University of Leeds and a Consultant Physician. His role also includes running the nal year programme. Dr Fuller has research interests in assessment (work place and OSCEs), transitions into practice and curriculum development. Email: r.fuller@leeds.ac.uk; All based at Leeds Institute for Medical Education, School of Medicine, University of Leeds, Level 7 Worsley Building, Clarendon Way, Leeds, LS2 9NL. Please use lead author telephone contact only.

Abstract This paper outlines a research project conducted at Leeds University School of Medicine with Assessment & Learning in Practice Settings Centre for Excellence in Teaching and Learning, collaboration between the Universities of Leeds, Hudderseld, Bradford, Leeds Metropolitan University and the University of York St John. The research conducted is a proof of concept, examining the impact of delivering competency based assessment via personal digital assistants (PDAs) amongst a group of nal year undergraduate medical students. This evaluation reports the student experience of mobile technology for assessment with positive effects; concluding that overall the students found completing assessments using a PDA straight forward and that the structured format of the assessment resulted in an increased, improved level of feedback, allowing students to improve their skills during the placement. A relationship between using the PDA for learning and setting goals for achievement was clearly demonstrated.

Introduction Using mobile technology for teaching and learning is a rapidly evolving area of educational research (Collins, 1996; Frohberg, 2002; Preece, 2000; Vavoula, Pachler & Kukulska-Hulme, 2009). The current research focus is in general evaluative, with a

2009 The Authors. British Journal of Educational Technology 2009 Becta. Published by Blackwell Publishing, 9600 Garsington Road, Oxford OX4 2DQ, UK and 350 Main Street, Malden, MA 02148, USA.

252

British Journal of Educational Technology

Vol 42 No 2 2011

variety of practitioners exploring the delivery, methodology and feasibility of mobile device usage in a wide range of education contexts, including the building of information technology (IT) infrastructure, technical support and other resources required. (Ally, 2009; Kukulska-Hulme & Traxler, 2005) Medical education research reects this wider eld with a signicant amount of mobile learning research focusing on feasibility combined with data on user experience (Dearnley, Haigh & Fairhall, 2007; Fisher & Baird, 2006; Garrett & Jackson, 2006; Shim & Viswanathan, 2007; Triantallou, Georgiadou & Economides, 2008). These studies outline the type of infrastructure used to support mobile learning (m-learning), the issues encountered when testing systems and report positive user experiences of the use of mobile technology for learning and workload management. A small amount of research in health care education has focused on the benets of using technology for assessment, suggesting heightened understanding of assessment method by students and a link with improvement in the quality of students work (McGuire, 2005). In contrast, Kneebone and Brenton (2005) have found the use of personal digital assistants (PDAs) for assessed written reection too slow and time consuming, reducing the quality of work. Several studies have identied the need to work with industry to create mobile devices specically suited to learning (Kramer, 2009; Naismith, Lonsdale, Vavoula & Sharples, 2004) and new products on the market such as the iPhone are indicative of the progress made in this area so far. However, appropriate use of m-learning is key to the emerging attitudes of staff and students to more general application. Whilst cultural change is necessary for m-learning to become main stream within Higher Education Institutions overall, within the health and social care environment a major paradigm shift may be needed to accept this type of technology as an everyday part of learning amongst staff, students and patients. This is due to the somewhat unique curriculum design of health and social care courses where much of the teaching is delivered by educators not employed by the University in work-based settings, and a constant observation of students by the public whilst learning. Previous National Health Service (NHS) policies banning mobile phones in hospitals and other clinical areas tend to result in a taboo attitude towards the use of mobile devices amongst health care practitioners and patients. This attitude is heightened by perceptions amongst staff and patients that students using mobile devices in clinical settings are doing so for personal use, such as texting or messaging their friends rather than for assessment and accessing reference material; mobile devices are not associated with learning (Koskimaa et al, 2007).

Why mobile learning? Despite the challenges (and barriers) to m-learning in clinical contexts, we reasoned that there are still clear benets to warrant exploration in this study. When students are in the workplace it is often difcult to know what they are learning and if they are improving. Work-based placements are typically assessed summatively at conclusion, with little formal formative assessment taking place prior to this.

2009 The Authors. British Journal of Educational Technology 2009 Becta.

Mobile technology for students

253

As a result, students may not maximise their learning opportunities, or focus on improving areas of weakness when they have the opportunity to do so. Mobile assessments allow tutors to review students progress remotely, and for students to receive more feedback on a more frequent basis. Much has been written about the net generation (Tapscott, 1998) and digital natives (Prensky, 2001b) as a description of young people who have grown up surrounded by technology and the need to adapt teaching and learning to their experience and abilities. Whilst engaging with new technologies to ensure a modern and interesting learning experience is important for competitive growth in the University sector, whether students actually achieve better qualications as a result of these technologies is debatable; and if students are in fact condent in the use of technology for education remains questionable. (Ramanau, Sharpe & Beneld, 2008). The ndings of this paper, though too small to be considered representative of all students seem to present a challenge to the concept of students as digital natives and potentially support the ndings of Ramanau, Sharpe and Beneld. This may be due to the attributes of a medical student population, (increasingly a diverse student group, from a wide range of backgrounds due to the widening participation agenda) or simply that not all young people are in fact digital natives.

Context In this project the students were asked to complete competency assessments (MiniClinical Evaluation Exercise [CEX]) whilst on a work-based placement. Mini-CEX is a validated generic tool used to undertake assessment of core competencies within health care professions such as communication, physical examination, reasoning and practical skills. These assessments are most often used in Medicine in postgraduate professional portfolios (Holmboe, 2001). The participant identies an opportunity to conduct an assessment with a clinical supervisor. Consent is sought from any patient examined as part of the assessment and the student then conducts the examination of the patient whilst a supervisor/assessor evaluates their performance. The evaluation is conducted using a form comprising of rater scales (19) to assess competence in various skills such as organisation, professionalism and clinical reasoning followed by an overall global competence rating. A free text box at the end of the form allows comments and action planning (see Figure A1 in the appendix for an example of the form used in this study). Mini-CEX is well established in postgraduate Medicine, often delivered via the Internet or work-based PC (Durning, 2002; Holmboe, 2001; Norcini, Blank, Duffy & Fortna 2003), and more recently (Hauer, 2000 and Kogan, Bellin & Shea, 2002) the practice of using mini-CEX with undergraduate students has been developed. Work based, formative assessment (mini-CEX) allows the student to identify areas of weakness and improve these by undergoing assessment and receiving feedback on their performance. This type of self-directed, personalised learning is attractive, as work based assessment of competence is of key practical use to medical students.

2009 The Authors. British Journal of Educational Technology 2009 Becta.

254

British Journal of Educational Technology

Vol 42 No 2 2011

Based on our assumptions of the need to generate a method of assessment that would capture the needs of our net generation and provide grounded feedback, we hypothesised that the use of mini-CEX via PDAs may achieve these goals. To assist the exploration of this hypothesis, we reviewed the small amount of research within health disciplines into the use of PDAs or other forms of mobile technology to deliver assessment (Axelson, Wardh, Strender & Nilsson, 2007; Finlay, Norman, Stolberg, Weaver & Keane, 2006; Kneebone et al, 2008). Work in Wisconsin with 3rd year medical students tested feasibility and user satisfaction with a PDA-based mini-CEX form (Torre, Simpson, Elnicki, Sebastian & Holmboe, 2007). Whilst the study found that students, faculty and residents were highly satised with the device to undertake assessment, the very small number of assessments undertaken precluded description of any incremental improvement in learning through using mini-CEX. The aim of this project was to pilot the use of a mini-CEX delivered via PDAs to support learning within an extended period of recapitulatory study for 13 nal year students who had failed their nal qualifying examinations. This period of recapitulatory study would take place in a work-based placement. On completion of the placement, students would retake their practical, nal exams. Previous research (Draper, Cargill & Cutts 2002; Holmboe et al, 2004; Nicol & MacFarlane-Dick, 2006; Sadler, 1998) has shown the inuence of formative assessment and feedback for learning, and identied that the actual amount of feedback that students receive when undertaking work placements is very little, and can be of a very poor quality (Black & Wiliam, 1998; Norcini & Burch, 2007). We felt that the recapitulatory nature of the placement was an excellent opportunity to use the mini-CEX assessment as the extensive use of structured assessment over a 12-week period would provide students with considerable feedback on their performance, especially benecial to underperforming students. The structured, formative nature of the assessment combined with opportunistic learning opportunities captured by the PDA would provide high quality and consistency of feedback allowing students and staff to review student progress throughout the placement and shape the direction of their learning accordingly. Methodology We sought to explore attitudes and behaviours associated with the use of mobile technology in this delivery of formative assessment. Therefore a grounded theory approach was chosen to elicit an in depth reective response from the students and assessors regarding their experiences. Grounded theory mirrors much of the inductive nature of qualitative research, developing concepts and themes as the research process evolves that are grounded in the data (Glaser & Strauss, 1968). By utilising existing theory as a base, we were able to develop a project that would build on existing good practice and provide a deeper analysis (Strauss & Corbin, 1990). Initially, four of the 13 students due to undertake the placement were invited to try using the mobile devices and the mini-CEX forms. The outline of the project was explained. The students would be expected to conduct a minimum of eight mini-CEX assessments in four areas of competence, communication, physical examination, clini 2009 The Authors. British Journal of Educational Technology 2009 Becta.

Mobile technology for students

255

cal reasoning and practical skills over a 12-week remediation placement. The students were to conduct at least two assessments in each area of competence. All assessments would be formative in nature. The placement would be summatively assessed by casebased discussion (a presentation by the student of a particular patients treatment, care planning, etc) as per routine practice. These four students reported that the project was workable so a training session was arranged for the entire cohort of 13 students a week later. At this training session the students were issued with a T-mobile Vario 2 Mobile Digital Assistant device, loaded with Windows Mobile 5 and mForms (software used to create mini-CEX forms). It included an unlimited data connection with a fair use policy of 1 Gb per month. The students were trained how to use the devices and the mini-CEX forms, email access provided and the project outlined. Although some students were quite familiar with the concept of PDAs and would probably have not required much training to use the device (evidenced by several running ahead of the training), others were complete novices and needed considerable support. None of the students had encountered mForms previously so a large proportion of the session was spent exploring how it worked and resolving initial problems. The initial face-to-face training was important to the success of the project as we were aware that it would have been nearly impossible to train all clinical staff students might come into contact with. Therefore, it was important that students felt condent with the PDA as they would need to explain the use of it to assessors. However, whilst we expected some assessors to need assistance with device usage, we did expect the majority of the assessors to be familiar with mini-CEX assessment, and many to have had experience of using this either via the Internet or work-based PC. Students were told they would be expected to complete a feedback questionnaire at the end of the placement and attend a focus group. The questionnaire developed by the research team was open-ended and themed into sections, such as usability, assessment feasibility and future embedding derived from our reading of current literature (see example of questions in Figure A2 of the Appendix). This questionnaire was disseminated via email to students after completion of summative assessments. Of the 13 students that completed the placement, 10 lled in the questionnaire and two did not ll in the questionnaire but attended the focus group. In addition to the questionnaire, the physical assessment data collated via the PDA was also subject to analysis by two researchers through a process of reading and coding themes within the free text feedback section of the mini-CEX tool. Each students individual data was studied for patterns in grading throughout the placement (an example in Figure A3 of the Appendix). As a further method of triangulation, we held a focus group attended by seven students. Having conducted initial coding, two members of the research team constructed three prompts. (Ice breakers, additional usage of the PDA and any differences in feedback received) based on questionnaire responses to initiate discussion at the group. Discus 2009 The Authors. British Journal of Educational Technology 2009 Becta.

256

British Journal of Educational Technology

Vol 42 No 2 2011

sion was held around each of these themes and the research team took notes of the discussion content, noting down particular quotes verbatim. In triangulating the data collated from the assessor and student questionnaires, focus group and assessment data we were able to construct individual student learning journeys. That is, view which assessments the student had conducted and when and how their scores and feedback had developed throughout the placement. Study limitations This study is small scale and based on the views of 13 medical students. The student experience of using PDAs for assessment may vary dependent on professional context and setting. The students involved are all recapitulatory students, in need of additional support following failure at nal exams therefore the views expressed are not representative of the whole student population. A larger scale study with an entire cohort of students is needed to assess the attitude towards this method of assessment as well as the feasibility of mobile assessment as a wider curriculum component in terms of infrastructure, technical support and staff training. Findings Across the questionnaire and focus group, a total of 12 of the 13 recapitulatory students gave their feedback. Seven were male and ve female (age 2226). Where appropriate direct quotes from students have been used to illustrate ndings. Analysis of the PDA revealed students conducted 196 assessments in total (median 15, range 825). Assessments were conducted by 80 assessors (41 were junior hospital doctors, 29 were Senior [Registrar/Consultant] and 10 were Allied Health Professions). The median time for a mini-CEX assessment plus feedback was 15 minutes for observation, 8 minutes for feedback; in keeping with that seen in early postgraduate practice (Norcini & Burch, 2007). Feedback in text form was available in 67% of the assessments completed. In 33% of cases, no comments were added. The triangulated data was themed into the areas highlighted below. Attitudes to device in clinical areas The research team were interested in the response of others (clinicians, other hospital staff and patients) to the use of PDAs in a clinical setting given potential concerns with condentiality and infection control. Three students reported encountering a mixed response, with some colleagues very open to the technology and others resistant. Surprising was the number of positive comments, and it became apparent that we had overestimated resistance considerably.

They really liked it. [It]Enabled us to speak to other members of the teamgave us a reason (Student 5).

2009 The Authors. British Journal of Educational Technology 2009 Becta.

Mobile technology for students

257

The same student later comments that this was a key difference between this placement and previous placements,

We were able to have a reason to chat to the staff (Student 5).

It would appear that instead of creating barriers by using such a device in this setting, in this instance it actually broke some down. The PDA acted as a catalyst for cultural change (Wenger, 1999), and the resulting dialogue can be regarded as a signicant advantage to students when moving into a new clinical placement and working with an unfamiliar team. Attitudes to learning using a PDA by students All of the students found the PDA comparatively easy to use, reecting the ndings of Torre et al (2007) and Kenny, Park, Neste-Kenny, Burton and Meiers (2009), though the three commented that they found it a bit ddly to ll in. This comment was explored at the focus group and traced to the inputting of free text assessor comments. This reects the ndings of Kneebone and Brentons study, where writing free text entries was abandoned midway through the project due to practicality. Rekkedal and Dye (2009) solved this problem by providing students with keyboards in addition to the PDA. In our study, inputting free text comments could have been resolved by the use of the audio function of the device. For future studies this was suggested as the most practical way of collecting feedback. These issues demonstrate the need for a review of how mobile technology is currently used (Kukulska-Hulme & Pettit, 2009) to ensure appropriate design of learning material. The research team were interested to know what other functions of the device the students used to benet themselves and their learning. Nine students used the device for accessing their email, three to access their diary and seven to access the Internet. This mirrors the usage reported by PDA users in Kukulska-Hulme and Pettit (2009) study. Four of the students commented that they would have accessed electronic resources such as the electronic British National Formulary and an e-version of clinical handbooks if these options had been available, echoing the comments of nurses in Garrett and Kleins (2008) study where access to reference materials was cited as a signicant factor in the adoption of PDAs for use in the workplace. Nine out of the ten students that completed the questionnaire said they would nd it helpful to have such a device when qualied. Attitudes to assessment and assessor behaviour by students Two students did suggest that if the assessors had their own login, assessments could be completed later at the clinicians convenience. However, this option would have allowed for assessments to be left unnished and the chances for obtaining feedback would decrease considerably. Additionally the timely delivery of feedback is seen as a critical factor in student assimilation of learning points (Kneebone et al, 2008). Another student commented,

2009 The Authors. British Journal of Educational Technology 2009 Becta.

258

British Journal of Educational Technology

Vol 42 No 2 2011

the actual process of completing the assessments did help in terms of dening goals for us personally as well as giving our assessors an idea of the goals we were working towards so they knew how to feedback. I think it is a good idea to keep the assessments simple as they are in current format (Student 10).

Three students noticed that the junior doctors seemed more open to or familiar with the devices. This theme was strengthened as when all students were asked whom they tended to ask to ll in assessments, over half specied junior doctors. Whilst the students were commenting on the junior doctors familiarity with the devices, it is also possible that these junior doctors were also more familiar with the mini-CEX itself, as many of them will have utilised these assessments in postgraduate education. All the students had to demonstrate how to use the device to their assessors. When asked how they felt about doing this seven were happy to do so,

I had no problems doing this ... especially if it provided a means of obtaining useful feedback from them. And usually they did not require much prompting as it was fairly straight forward to use (Student 7).

Another added, it was good for once teaching them something! (Student 3) However the effect of this practice was questioned,

[I] feel because I was needed to ll it in, it may have altered their feedback (Student 8).

Condence versus digital nativity All the students felt that the initial training was necessary and useful, and two felt reassured by having a helpline number to call. This positive response to training, and the attitude of the students observed in this pilot and our current larger study indicates that in this case many students did not feel automatically comfortable with the technology, mirroring the experience of both Kneebone and Brenton (2005) and Garrett and Klein (2008) where user support was seen as key by both participants and researchers. These ndings would seem to challenge the idea of all young people being digital natives, and the need to utilise technology enhanced learning (TEL) for engagement. The use of the PDA was intended to provide the instant feedback and therefore gratication supposedly sought by this generation of students (Prensky, 2001), in a format they would feel comfortable using. However; the lack of condence observed in these studies indicates that caution is needed when using TEL that digital competence is not assumed.

Overall impact Students were nally asked to compare this placement with their previous placements, and whilst two did not seem to be aware of a specic difference, over half found the placement more focused and goal orientated.

2009 The Authors. British Journal of Educational Technology 2009 Becta.

Mobile technology for students

259

I think that with the device I felt much more focused towards particular goals and it was also a good way for me to keep an eye on how I was doing (Student 2).

Analysis of the actual assessment scores and feedback allows us to chart a journey of incremental improvement from each student as they progressed through the placement, echoing the students own feelings of progression (Figure A3). These learning journeys are a powerful pedagogical tool for both faculty and students alike. The recognisable incremental improvement observed throughout is a convincing justication for the inclusion of formative assessment as part of curriculum assessment strategy and personally provided the students with an improved feeling of condence. Every student felt the formative assessments helped them to prepare for the summative presentation and nal examinations, and all 13 students passed the nal exam on the second attempt; however, larger scale research is needed to prove the effect of formative assessment on summative exam performance.

Discussion Our study demonstrates a measurable improvement in student performance through the use of structured formative assessment, echoing the results seen in Kogan et als (2002) study using mini-CEX with undergraduates. Our students reported a higher level of feedback on assessment during this placement, supporting the claim that often feedback during placements can be minimal (Black & Wiliam, 1998, Norcini & Burch, 2007), this reiterates the importance of formative assessment for learning (Draper et al 2002; Sadler, 1998) as part of an overall curriculum strategy. The use of the PDA for assessment delivery provided some interesting and unexpected ndings. Within this study, students found that technology acted as an ice breaker, encouraging engagement amongst nonmedical ward staff that historically the students had not encountered. In this way, the PDA as an object became a representation of the experience taking place, a process of reication. The introduction of a new device for learning into the community of practice facilitated an opportunity for the staff and students to open a dialogue and further develop shared meaning and experience (Wenger, 1999). This phenomenon, could be a considerable benet for future cohorts, increasing wider inter-professional working and learning. Unfortunately there is a possibility that the new found dialogue could be short lived due to the novelty factor resulting from unawareness of these devices. Therefore, consolidation work must be undertaken to prevent this from happening. In terms of the assessment process itself, one student suggested approaching patients for feedback via the PDA in addition to teacher/assessors. Whilst certain health and social care professions (such as social work) actively involve patients in teaching and curriculum design using patients for assessment in the workplace is rare. Involving patients in assessment is an ongoing stream of work within the Assessment & Learning in Practice Settings programme, and is increasingly apparent in postgraduate medical education.

2009 The Authors. British Journal of Educational Technology 2009 Becta.

260

British Journal of Educational Technology

Vol 42 No 2 2011

Although previous work has identied that training is key to implementing mobile projects (Dearnley et al, 2007; Garrett & Klein, 2008; Kneebone & Brenton, 2005), perhaps the most surprising nding was the reluctance and anxiety on the part of some of the students towards using the device. These students were typically aged 2324 years old, and were a very heterogeneous group in terms of their IT nativity. Although this work naturally prevents generalisation, it would appear that perhaps whilst age and computer literacy are clearly linked, age is not the only variable that governs our response to new technologies and personality traits and maturity in learning are likely to be equally important. The concept that students do not know how to use technology for learning purposes is also a possibility (Ramanau et al, 2008). Conclusion The use of PDA-based mini-CEX with nal year recapitulatory students provided a key opportunity to undertake a large number of assessments within an intensive revision period and provided students with considerably more opportunity for feedback on their skills. All of the students, whilst some found the actual number of assessments to be done hard work, found the placement benecial and enjoyable, and one commented it was the best placement they had been on,

I have really enjoyed this placement. As compared to other placements I think I had a clearer sense of my goals and what I wanted to achieve. I really felt supported by people on the team and felt learning opportunities were made easily available (Student 9).

The use of the PDA encourages immediate feedback that the students in our study found really useful; however, there is ongoing debate regarding the optimum time to provide students with feedback (Kneebone et al, 2008; McOwen, Kogan and Shea, 2008). The structure provided by the mini-CEX assessments seemed to encourage them to be more goal orientated. Additionally, the ownership the students had of their device was echoed in their learning, the students were much more aware of the selfdirected nature of this learning experience and the opportunity for personalised learning. This theme is present in other m-learning research (Hartnell-Young & Vetere, 2008; Sharples, Taylor & Vavoula, 2005) and is a signicant advantage to the use of mobile technology for learning. A high level of structure and direction is benecial during a recapitulatory placement and the use of the mini-CEX and PDA provided both. Implications of the study provide an initial proof of concept for those considering the use of mobile devices in work-based assessment or for providing students with a link to the University when on work placement. If mobile learning can be implemented within clinical or a workplace setting, then there is scope for implementation of mobile learning and assessment across a wide variety of educational situations. With the development of specialist academies (DFCS, 2007), set up to provide young people with the skills employers are seeking, the future of work-based learning is on the ascent. Increasingly many more university programmes include work placements to enhance courses,

2009 The Authors. British Journal of Educational Technology 2009 Becta.

Mobile technology for students

261

engaging with employers and increasing their graduates employability. These placements provide students with unique opportunities, but can also leave them isolated. In addition to this, universities can nd it hard to quality assure the learning that takes place within these work placements. Mobile technology and assessment offers one solution to this, with further research needed into cost effectiveness, quality assurance and IT infrastructure. Further research The majority of current educational m-learning research is still small scale (Ally, 2009; Kukulska-Hulme & Traxler, 2005) and this is reected in our own health centric study. A large scale study is needed to establish the feasibility of mobile learning as a sustainable curriculum component. Our current work concentrates on the use of PDA-based mini-CEX with the whole 5th year during their nal year placements. Further areas for research to better our understanding of what and how technology can improve learning and assessment might include longitudinal studies examining student attitudes and attainment, behaviours and changes in learning styles. In scoping forward, it is important to acknowledge that PDAs in researching teaching and learning allow us access to placement learning information we have not previously had access to, opening a new opportunity for research. Acknowledgements Acknowledgements go to Shervanthi Homer-Vanniasinkam, University of Leeds for development of Mini-CEX form, Gareth Frith, University of Leeds for procurement of devices and Trudie Roberts, University of Leeds for concept development. References

Ally, M. (2009). Mobile learning: transforming the delivery of education and training. Alabama, USA: AU Press. Axelson, C., Wardh, I., Strender, L. E. & Nilsson, G. (2007). Using medical knowledge studies on handheld computersa qualitative study among junior doctors. Medical Teacher, 29, 6, 611 618. Black, P. J. & Wiliam, D. (1998). Inside the black box: raising standards through classroom assessment. London: Kings College London School of Education. Collins, B. (1996). Tele-learning in a digital world: the future of distance learning. London: International Thompson Computer Press. Dearnley, C., Haigh, J. & Fairhall, J. (2007). Using mobile technologies for assessment and learning in practice settings: a case study. Nurse Education in Practice, 8, 3, 197204. Department for Children Schools and Families (2007). Building on the best: nal report and implementation plan of the review of the 14-19 work related learning. Retrieved February 10, 2009, from http://www.dcsf.gov.uk/14-19/documents/14-19workrelatedlearning_web.pdf Draper, S. W., Cargill, J. & Cutts, Q. (2002). Electronically enhanced classroom interaction. Australian Journal of Educational Technology, 18, 1, 1323. Durning, S. J., Cation, L. J., Markert, R. J. & Pangaro, L. N. (2002). Assessing the reliability and validity of the mini-clinical evaluation exercise for internal medicine residency training. Academic Medicine, 77, 900904.

2009 The Authors. British Journal of Educational Technology 2009 Becta.

262

British Journal of Educational Technology

Vol 42 No 2 2011

Finlay, K., Norman, G. R., Stolberg, H., Weaver, B. & Keane, D. R. (2006). In-training evaluation using handheld computerized clinical work sampling strategies in radiology residency. Journal of Canadian Association of Radiology, 57, 232237. Fisher, M. & Baird, D. E. (2006). Making mLearning work: utilizing mobile technology for active exploration, collaboration, assessment, and reection in higher education. Journal of Education Technology Systems, 35, 1, 330. Frohberg, D. (2002). Communities- the MOBIlearn perspective. Workshop on ubiquitous and mobile computing for educational communities: enriching and enlarging community spaces, international conference on communities and technologies, Amsterdam, 19 September 2003. Retrieved February 10, 2009, from http://www.idi.ntnu.no/~divitini/umocec2003/Final/ frohberg.pdf Garrett, B. & Klein, G. (2008). Value of wireless personal digital assistants for practice: perceptions of advanced practice nurses. Journal of Clinical Nursing, 17, 16, 21462154. Garrett, B. M. & Jackson, C. (2006). A mobile clinical E-portfolio for nursing and medical students, using wireless personal digital assistants. Nurse Education in Practice, 6, 339346. Glaser, B. & Strauss, A. L. (1968). Time for dying. Chicago: Aldine. Hartnell-Young, E. & Vetere, F. (2008). A Means of Personalising Learning: Incorporating Old and New Literacies in the Curriculum with Mobile Phones. Curriculum Journal, 19, 4, 283292. Hauer, K. E. (2000). Enhancing feedback to students using the mini-CEX (clinical evaluation exercise). Academic Medicine, 75, 534. Holmboe, E. S., Fiebach, N. H., Galatay, L. A. & Huot, S. (2001). Effectiveness of a focused educational intervention on resident evaluations from faculty: a randomized controlled trial. Journal of General Internal Medicine, 16, 427434. Holmboe, E. S., Williams, F., Yepes, M., Norcini, J. J., Blank, L. L. & Huot, S. J. (2004). The mini clinical examination exercise and interactive feedback: preliminary results. Journal of General Internal Medicine, 16, 1, 100. Kenny, R. F., Park, C., Van Neste-Kenny, J., Burton, P. A. & Meiers, J. (2009). Using mobile learning to enhance the quality of nursing practice education. In M. Ally (Ed.), Mobile learning: transforming the delivery of education and training (pp. 5175). Alabama, USA: AU Press. Kneebone, R. & Brenton, H. (2005). Training perioperative specialist practitioners. In A. Kukulska-Hulme & J. Traxler (Ed.), Mobile learning: a handbook for educators and trainers (pp. 106115). London: Routledge. Kneebone, R., Bello, F., Nestel, D., Mooney, N., Codling, A., Yadollahi, F. et al (2008). Learnercentered feedback using remote assessment of clinical procedures. Medical Teacher, 30, 8, 795801. Kogan, J. R., Bellin, L. M. & Shea, J. A. (2002). Implementation of the mini-CEX to evaluate medical students clinical skills. Academic Medicine, 77, 11561157. Koskimaa, R., Lehtonen, M., Heinonen, U., Ruokama, H., Tisarri, V., Vahtivuori-Hnninen, S. et al (2007). A cultural approach to networked-based mobile education. International Journal of Educational Research, 46, 34, 204214. Kramer, M. (2009) Deciphering the future of learning through daily observation. Paper presentation, 3rd WLE Mobile Learning Symposium: Mobile learning Cultures across Education, Work and Leisure, 27 March, WLE Centre, IOE London, UK Kukulska-Hulme, A. & Pettit, A. J. (2009). Using mobile learning to enhance the quality of nursing practice education. In M. Ally (Ed.), Mobile learning: transforming the delivery of education and training (pp. 5175). Alabama: AU Press. Kukulska-Hulme, A. & Traxler, J. (2005). Mobile learning: a handbook for educators and trainers. London: Routledge. McGuire, L. (2005). Assessment using new technology. Innovations in Education and Teaching International, 42, 3, 265276. McOwen, K. S., Kogan, J. R. & Shea, J. A. (2008). Elapsed Time Between Teaching and Evaluation: Does it Matter? Academic Medicine, 83, 10, S2932.

2009 The Authors. British Journal of Educational Technology 2009 Becta.

Mobile technology for students

263

Naismith, L., Lonsdale, P., Vavoula, G. & Sharples, M. (2004). Report 11: literature Review in mobile technologies and learning. A report for NESTA Futurelab. University of Birmingham. Retrieved February 3, 2009, from http://futurelab.org.uk/research/reviews/reviews_11_ and12/11_02.htm Nicol, D. & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: a model and seven principles of good feedback practice. Studies in Higher Education, 31, 2, 199 218. Norcini, J. & Burch, V. (2007). Workplace-based assessment as an educational tool: AMEE Guide No.31. Medical Teacher, 29, 9, 855871. Norcini, J. J., Blank, L. L., Duffy, F. D. & Fortna, G. (2003). The mini CEX: a method for assessing clinical skills. Annals of Internal Medicine, 138, 476481. Preece, J. (2000). Online communities: designing usability, supporting sociability. Chichester: Wiley. Prensky, M. (2001a). Digital natives, digital immigrants, on the horizon. NCB University Press, 9, 5, 16. Prensky, M. (2001b). Digital natives, digital immigrants, Part II: do they really think differently? on the horizon. NCB University Press, 9, 6, 17. Ramanau, R., Sharpe, R. & Beneld, G. (2008). Exploring patterns of student learning technology use in their relationship to self-regulation and perceptions of learning community. Sixth International Conference of Networked Learning, Denmark, 56 May 2008. Retrieved 10th February 2009 from http://www.networkedlearningconference.org.uk/abstracts/PDFs/Ramanau_ 334-341.pdf Sadler, R. (1998). Formative assessment: revisiting the territory. Assessment in Education: Principles, Policy & Practice, 5, 1, 7784. Sharples, M., Taylor, J. & Vavoula, G. (2005). Towards a theory of mobile learning. Proceedings of mLearn 2005 Conference, Cape Town [online] Accessed August 5th 2008 from http://www.lsri.nottingham.ac.uk / msh / Papers / Towards%20a%20theory%20of%20mobile %20learning.pdf Shim, S. J. & Viswanathan, V. (2007). User assessment of personal digital assistants used in pharmaceutical detailing: system features, usefulness and ease of use. Journal of Computer Information Systems, 48, 1, 1421. Strauss, A. & Corbin, J. (1990). Basics of qualitative research: grounded theory procedures and techniques. California: Sage. Tapscott, D. (1998). Growing up digital: the rise of the net generation. New York: McGraw-Hill Companies. Torre, D. M., Simpson, D. E., Elnicki, D. M., Sebastian, J. L. & Holmboe, E. S. (2007). Feasibility, Reliability and User satisfaction With a PDA-Based Mini-CEX to Evaluate the Clinical Skills of Third-Year Medical Students. Teaching and Learning in Medicine, 19, 3, 271277. Triantallou, E., Georgiadou, E. & Economides, A. A. (2008). The design and evaluation of a computerized adaptive test on mobile devices. Computers & Education, 50, 4, 13191330. Vavoula, G., Pachler, N. & Kukulska-Hulme, A. (2009). Researching mobile learning: frameworks, tools and research designs. Oxford, UK: Peter Lang. Wenger, E. (1999). Communities of practice: learning, meaning, and identity. Cambridge, UK: Cambridge University Press.

2009 The Authors. British Journal of Educational Technology 2009 Becta.

264

British Journal of Educational Technology

Vol 42 No 2 2011

Appendix

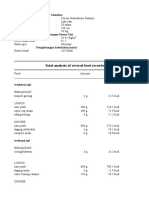

Figure A1: Example of Mini-CEX Form used on PDA

2009 The Authors. British Journal of Educational Technology 2009 Becta.

Mobile technology for students

265

All Questions open ended How did you find using the PDA device? Did you use the device for anything other than the assessments? If yes what for? How usable was the assessment format? How did you find using MiniCex? What were your experiences of bringing a device into a clinical setting? How did others react to it? Did you have to give support to the assessors in their use of the device and assessments? If yes how did you feel about doing this? How did you rate the initial training? In light of your experience how necessary do you think training is? Were there any differences between your placement with the device and without the device? How would you feel about having the device if you were a qualified doctor?

Figure A2: Questionnaire extract

professionalism

communication

Technical skill

Practical skills

Physical exam skills

Assessor comments Overall competence 6 good systematic history and presentation 7 7 7 8 9 needs more practice. Very good comprehensive summary and good knowledge base. Needs to build on confidence. Acted as competent house officer but needs to have self confidence. difficult patient was patient and thorough. good communication skills polite. good clinical knowledge and formulated good management plan. excellent thoughtful, approach liked By me and patient

Competency type

organization 6

Date and Time 04/0 9/20 0717:0 3:22 05/0 9/20 0719:1 4:26

Patient problem

Moderate

haemoptysis

History

Clinical judgement 0

Clinical reasoning

Level of difficulty

Moderate

l sided weakness

History

06/0 9/20 0710:5 8:21 07/0 9/20 0717:1 4:00

Cholangitis, deferential of epigastric pain/jaundice.

Moderate

History

Management of bay of 4 patients throughout working day.

History

High

19/0 9/20 0716:4 9:52 22/0 9/20 0712:1 5:24

Moderate

chest pain

History

Moderate

renal failure

History

Figure A3: Extract of Mini CEX data for one student in study showing incremental improvement in scores and performance

2009 The Authors. British Journal of Educational Technology 2009 Becta.

Vous aimerez peut-être aussi

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Strep Throat FactsDocument2 pagesStrep Throat FactsFactPaloozaPas encore d'évaluation

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Writing The Motherland From The DiasporaDocument23 pagesWriting The Motherland From The DiasporaIfeoluwa Watson100% (1)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- QUIZ7 Audit of LiabilitiesDocument3 pagesQUIZ7 Audit of LiabilitiesCarmela GulapaPas encore d'évaluation

- Level 9 - Unit 34Document7 pagesLevel 9 - Unit 34Javier RiquelmePas encore d'évaluation

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- ECO-321 Development Economics: Instructor Name: Syeda Nida RazaDocument10 pagesECO-321 Development Economics: Instructor Name: Syeda Nida RazaLaiba MalikPas encore d'évaluation

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- SCL NotesDocument4 pagesSCL NotesmayaPas encore d'évaluation

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Tugas Gizi Caesar Nurhadiono RDocument2 pagesTugas Gizi Caesar Nurhadiono RCaesar 'nche' NurhadionoPas encore d'évaluation

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- A Guide To LU3 PDFDocument54 pagesA Guide To LU3 PDFMigs MedinaPas encore d'évaluation

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Management of AsthmaDocument29 pagesManagement of AsthmaAbdullah Al ArifPas encore d'évaluation

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- EarthWear Clothier MaterialsDocument1 pageEarthWear Clothier MaterialsZhining LimPas encore d'évaluation

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Evolution of Fluidized Bed TechnologyDocument17 pagesEvolution of Fluidized Bed Technologyika yuliyani murtiharjonoPas encore d'évaluation

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- 9701 w03 QP 4Document12 pages9701 w03 QP 4Hubbak KhanPas encore d'évaluation

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- Cdd161304-Manual Craftsman LT 1500Document40 pagesCdd161304-Manual Craftsman LT 1500franklin antonio RodriguezPas encore d'évaluation

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- Timberwolf TW 230DHB Wood Chipper Instruction ManualDocument59 pagesTimberwolf TW 230DHB Wood Chipper Instruction Manualthuan100% (1)

- Diabetes Mellitus Nursing Care PlanDocument7 pagesDiabetes Mellitus Nursing Care PlanjamieboyRN91% (32)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Adjectives Comparative and Superlative FormDocument5 pagesAdjectives Comparative and Superlative FormOrlando MiguelPas encore d'évaluation

- Phyilosophy of Midwifery Care 2Document13 pagesPhyilosophy of Midwifery Care 2Noella BezzinaPas encore d'évaluation

- Resume Rough DraftDocument1 pageResume Rough Draftapi-392972673Pas encore d'évaluation

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- Quant Job Application ChecklistDocument4 pagesQuant Job Application Checklistmetametax22100% (1)

- Retropharyngeal Abscess (RPA)Document15 pagesRetropharyngeal Abscess (RPA)Amri AshshiddieqPas encore d'évaluation

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- Interventional Cardiology and SurgeryDocument19 pagesInterventional Cardiology and SurgeryDEV NANDHINI RPas encore d'évaluation

- UntitledDocument2 pagesUntitledapi-236961637Pas encore d'évaluation

- Report On Marketing of ArecanutDocument22 pagesReport On Marketing of ArecanutsivakkmPas encore d'évaluation

- 5219-PRP Support Tool CounsellingDocument10 pages5219-PRP Support Tool CounsellingRodhi AnshariPas encore d'évaluation

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- 30356-Article Text-56848-1-10-20210201Document14 pages30356-Article Text-56848-1-10-20210201Mel FaithPas encore d'évaluation

- Muet Topic 10 City Life Suggested Answer and IdiomsDocument3 pagesMuet Topic 10 City Life Suggested Answer and IdiomsMUHAMAD FAHMI BIN SHAMSUDDIN MoePas encore d'évaluation

- Lesson 1 Animal CareDocument8 pagesLesson 1 Animal CareLexi PetersonPas encore d'évaluation

- Unit Plan Adult Health Nursing Unit IIDocument7 pagesUnit Plan Adult Health Nursing Unit IIDelphy VarghesePas encore d'évaluation

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Chapter 23Document9 pagesChapter 23Trixie Myr AndoyPas encore d'évaluation

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)