Académique Documents

Professionnel Documents

Culture Documents

Combining Support Vector Machines: 6.1. Introduction and Motivations

Transféré par

Summrina KanwalDescription originale:

Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Combining Support Vector Machines: 6.1. Introduction and Motivations

Transféré par

Summrina KanwalDroits d'auteur :

Formats disponibles

Chapter 6

77

Combining Support Vector Machines

This chapter attempts to extend the applicability of GP based combination technique to optimize the performance of individual SVM classifiers. Component SVM classifiers are trained over a certain range of decision thresholds. Optimal composite classifiers are developed by genetic combination of SVM classifiers. Various experiments seek to explore the effectiveness and discrimination power of the GP combined decision space. In this way, the combined decision space is more informative and discriminant. OCC has shown improved performance than that of optimized individual SVM classifiers using grid search. The results obtained in this chapter demonstrate that the composite SVM classifiers are more effective, robust and generalized. The chapter is organized such that in the section 1, chapter introduction and motivations are given. Section 2 describes goals of the chapter. In section 3, theoretical background of SVMs is explained. Section 4, proposed methodology and the architecture of a classification system are given. This section includes the method of combining SVM classifiers. Implementation details are given in section 5. Results and discussion are presented in section 6. Finally, conclusions are given.

6.1.

Introduction and Motivations

Research on classical pattern recognition models was challenged by the recent development of a novel SVMs based approach. SVMs not only attempt to make the traditional pattern recognition model out of date, but also SVMs are benefiting from the research on classifier ensembles [105]. SVM based discriminant approach is preferred in high dimension image space. SVM classification models could be developed by using different kernel functions. Different SVM models have been selected due to their high discrimination power and low generalization error for new test examples. Optimization of SVM models is an active area of research. Usually two issues are tried to solve, in order to optimize SVM models, e.g. the selection of suitable kernel function and its associated parameters (model selection) [107]-[113]. In optimal kernel selection, first, SVM are tested on various kernels for improved classification performance and minimum training error [106]. While, in kernel model selection, mostly iterative search is applied in order to optimize the parameters within the range of [1015 1015 ] [110]. However, these exhaustive search methods are based on trial and error. They become intractable when the number of parameters exceeds two [107]. Various researchers have offered application dependent solutions. However, no intelligent method has been

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

78

developed to optimize SVM models. Therefore, research in the development of high performance generalized method is required. Currently, the combination of SVM classifiers has attained a considerable attention. Due to the lack of general classifiers combination rules, problem specific solutions have been offered. In a recent work, Moguerza1 et.al in [115] have worked on combining SVM classifiers. However, they have used the concept of linear combination of kernels to perform functional (matrix) combination of kernels. Their combination uses class conditional probabilities and nearest neighbor techniques. Previously, GP has been used in combining different classification models like, Artificial Neural Network [22]-[23], Decision Trees [101] and Nave Bayes to produce a composite classifier, therefore, there are good chances for GP based combination of SVM classifiers to perform better. In this chapter, various GP simulations are carried out under different feature sets in order to show the superiority of OCC.

6.2.

Chapter Goals

The main goal of this chapter is the optimization of SVM models. This work tries to show that GP based combination of classifiers scheme is more informative and generalized. This chapter seeks to address the following research questions: 1. Can SVM component classifiers be combined through GP search to produce higher performance composite classifier? 2. Is it possible to achieve high accuracy with fewer features set with GP? 3. Is it possible to analyze the accuracy and complexity involved in composite classifier? In this chapter, we will try to address these research questions.

6.3.

SVM Classifiers

Conventional neural network methods have demonstrated difficulties to nd a good generalization performance [116]. Because of these difficulties, in the last few years the scientic community has been experimenting with learning through SVMs. This learning method is more promising. It has started receiving more attention. Support vector machine is developed for binary classification problems. SVMs are margin based classifiers with good generalization capabilities. They have been used widely in various classification, regression and image processing tasks [2], [106]. SVMs were developed to solve classication problems and initial work was focused on optical character recognition applications [2]. However, some recent applications and extensions of SVMs include isolated handwritten digit recognition, object recognition speaker identication, and face detection in images and text categorization. All of the above cases are applications of SVMs for classication. SVMs have also been applied to a number of different types of problems including regression estimation and novelty detection. Support Vector Machines (SVMs) were introduced by Vapnik in [2]. SVMs are based on the Structural Risk Minimization (SRM) principle from statistical learning

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

79

theory. SRM minimizes a bound on the test error. In opposite to this, the Empirical Risk Minimization (ERM) principle is used by neural networks to minimize the error on the training data. SVM performs pattern classification between two classes to find a decision surface that has maximum distance to the closest points in the training set. These closest points are called support vectors. SVM views the classification problem as a quadratic optimization problem and avoids the curse of dimensionality by placing an upper bound on the margin between classes. The training principle of SVM is to find the optimal linear hyper-plane such that the classification error for new test samples is minimized. For a linearly separable data, such a hyper-plane is determined by maximizing the distance between the support vectors. Consider n training pairs ( xi , yi ), where xi R N and yi {1, 1 } , the decision surface is defined as:

f (x) =

where the coefficient i > 0 is the Langrange multiplier in

1 i y i x iT . x + b i=

(6.1) an optimization problem. A

vector xi that corresponds to i > 0 is called a support vector. f ( x) is independent of the dimension of the feature space and the sign of f ( x) gives the membership class of x . In case of linear SVM, the kernel function is simply the dot product of two points in the input space. In order to find an optimal hyperplane for non-separable patterns, the solution of the following optimization problem is sought.

( w, ) =

T subject to the condition yi w ( xi ) + b 1 i , i 0.

N 1 T w w + C i 2 i =1

(6.2)

where C > 0 is the penalty parameter of the error term

i =1

and ( x) is nonlinear mapping.

The weight vector w minimizes the cost function term wT w . For nonlinear data, we have to map the data from the low dimension N to higher dimension M through ( x) such that : R N F M , M >> N . Each point ( x) in the new space is subject to Mercers theorem. Different kernel functions are defined as: K ( xi , x j ) = ( xi ). ( x j ) . We can construct the nonlinear decision surface f ( x) in terms of i > 0 and kernel function K ( xi , x j ) as:

NS NS

f ( x) = i yi K ( xi , x) + b = i yi ( xi ) ( x) + b

i =1 i =1

(6.3)

where, N s is the number of support vectors. In SVMs, there are two types of kernel functions, i.e. local (Gaussian) kernels and global (linear,

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

80

polynomial, sigmoidal) kernels. The measurement of local kernels is based on a distance function while the performance of global kernels depends on the dot product of data samples. Linear, polynomials and radial basis functions are mathematically defined as:

K ( xi , x j ) = xi . x j (Linear kernel with parameter C )

T

K ( xi , x j ) = exp(

xi x j ) (RBF with Gaussian kernel parameters ,C )

K ( xi , x j ) = [ < xi , x j > + r ]d (Polynomials kernel with parameters , r , d and C )

Linear, RBF and polynomial kernels have one, two, and four adjustable parameters respectively. All these kernels share one common cost parameter C , , which represents the constraint violation of the data point occurring on the wrong side of the SVM boundary. The parameter in the RBF shows the width of Gaussian functions. In order to obtain optimal values of these parameters, there is no general rule about the selection of grid range and step size. In the present work to select the optimal parameters of kernel functions, grid search method is used

6.4.

Proposed Methodology: Combining SVM Classifiers

This chapter attempt to combine different SVM classifiers developed through kernel functions. This technique automatically tries to select optimal kernel functions and model selection to achieve high performance composite classifier. First component SVM classifiers are constructed through the learning of SVMs by different kernel functions. Then, the predicted information of these SVM classifiers is used to develop an OCC. To study the performance of classifiers, gender classification problem is taken as a test case. ROC curve is chosen to analyze the performance of classifiers [43]. AUCH of ROC curve is used as a fitness function during GP evolution process. The scheme of combination of classifiers is carried out using the concept of stacking the predictions of component SVM classifiers to form a new feature space [94], [95]. Suppose we have m kernel functions { K f 1 , K f 2 ,L , K fm } , to represent different SVM learning algorithms. The dataset S with examples si = ( xi , ci ) is partitioned into

1 2 non-overlapping sets, such that {St Stst S tst } = . First step is the construction of a set of m

component classifiers C1 , C2 ,L , Cm . They are obtained by training kernel functions at training data set St i.e. C j = K fj ( St ) , j = 1, 2,L , m . Second step is the construction of a set of

1 predictions i.e. c j = C j ( xi ), at xi Stst

j = 1, 2, L , m . GP learning process is based on

i i i

the training of new meta-space of the form of ((c1 , c2 ,L , cm ), ci ), instead with examples

1 of ( xi , ci ) Stst . GP combine the predictions of component classifiers (c1 , c2 ,L , cm ) , to obtain

an optimal classifier. These predictions of component classifiers are used as unary functions in GP

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

81

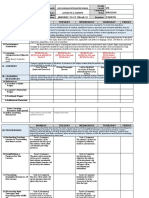

tree to represent combination of SVM classifiers. These unary functions are combined during crossover and mutation operations to develop OCC. The main modules in our scheme are shown in Figure 6.1 with double dashed boxes. A brief introduction of each module includes the formation and normalization of database; feature selection; classifiers performance evaluation criteria and combination of SVM classifiers as follows: 6.4.1. Normalization of Face Database Various databases are combined to form a generalized unbiased database for binary classification task. Different face images are collected from the standard databases of ORL, YALE, CVL and Stanford medical student. In this way, a general database is formed. Images are taken under various conditions of illumination with different head orientations. In the normalization stage, CSU Face Identification Evaluation System [118] is used to convert all images in the uniform state. Images within the databases are of different sizes taken under various conditions. CSU system includes standardized image pre-processing software to study the unbiased performance of classifiers. In order to convert each image of size 103 by 150 into a normalized form, this system performs various image preprocessing tasks. First, human faces are aligned according to the chosen eye coordinates. Then, an elliptical mask is formed and images are cropped such that the face from forehead to chin and cheek to cheek is visible. At last, the histogram of the unmasked part of the image is equalized. A sample of a few images, their normalized form, as well as the block diagram for processing images is shown in Figure 6.2 (a )-(b). 6.4.2. Feature Selection by using SIMBA Feature selection is the task of reducing the dimension by selecting a small subset of the features, which contains the needed information. Feature selection reduces the feature space, which in turn speeds up the classification process. Such features make the data more decisive because it is easier to visualize and compute low dimensional data then high dimensional data. There exist many methods for feature selection. The feature space may be reduced by selecting the most distinguishing features through minimization of the feature set size [123]. Classification rule can assign some confidence to its prediction. This confidence is called margin. An algorithm like Support Vector Machines is an example for the significance of margin. In this way, a large margin is obtained by combining for feature selection, which generates efficient feature selection algorithm. This margin is used to evaluate the fitness of sets of features to the underlying classification task. Different search methods are used to find a good set of features based on the margin criteria. Margin based feature selection methods are also being used. We have used margin based Simba Algorithm [27] for optimal the image pixel selection. In order to analyze the performance of classifiers for various feature sets, different values of thresholds are used for this weight vector. After picking the desired image pixels, three separate datasets; training data1, testing data1 and testing data2 are formed. A database S, for which, we

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

82

have selected 300 male and 300 female images. Thus, each part of this dataset contains 100 male and 100 female images. This data is then used in various training and testing stages.

Input Facial image database Facial image normalization Feature selection using Simba algo. Data formation (training data1 for SVM kernels)

Linear SVM Testing data1

Poly SVM

RBF SVM

GP module

Initial population generation No Fitness evaluation Termination criteria = ?

Stacking the predictions Combining classifiers by GP tree

Testing data1

GP evolved numerical classifiers testing AUCH calculation module Fitness values

yes Save the best OCC

OCC testing Output performance

Testing data2

Figure 6.1: Architecture of proposed classification system 6.4.3. Classifiers Performance Criteria

In GP evolution process, the choice of fitness function is very important. This function strongly affects the performance of evolved GP programs so that a combination of classifiers could be obtained as accurate as possible. To obtain an ROC curve, each classifier is tuned by changing its sensitivity level (threshold). When the sensitivity is at the lowest level, the classifier neither produces false alarms, nor detects positive cases. When the sensitivity is increased, the classifier detects examples that are more positive but may also start generating false alarms. Ultimately, the sensitivity may become so high that the classifier always claims each case is positive.

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

83

Head search using multiscale

Feature search algorithm

Scaling

Warp

Masking

Figure 6.2: (a), Face alignment system with stages

Figure 6.2: (b), A few input faces and processed f 6.4.4. GP based Combination of SVM Classifiers In order to design optimal composite classifier, first step is to train SVMs with different kernel functions on training data and their predicted information is extracted using another data. The predictions of different SVM models such as linear SVM, polynomial SVM and radial basis function SVM are stacked in the form of vectors to form a new meta-data for GP learning. The role of the SVMs in the first layer of the combination scheme is similar to the first layer of neural network. The predictions of these individual SVM classifiers are used as special unary functions in a GP tree and a variable terminal T is used as a threshold. Figure 6.3 shows the configuration of a combination of SVMs classifiers. The combination of classifiers is performed in homogenous or heterogeneous way. In homogenous combination, trained SVM classifiers on the different feature sets are combined through GP. This composite classifier OCC is a function of any one of the SVM classifiers;

C L , C P , C R . In heterogeneous combination, two or more classifiers trained on the

same/different feature sets are combined. The composite classifier is a function of two or more SVM classifiers i.e. f (C L , C P ), f (CP , CR ) or f (C L , CP , C R ) . OCC might have a better chance to produce higher AUCH of ROC curve than its component SVM classifiers. GP Module In context of classification, GP-based technique comes under the category of stochastic methods, in which randomness plays a crucial role in searching and learning. We represent a classifier as a

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

84

candidate solution with a tree like-data structure. Initially, a population of individuals is created randomly as a possible solution space. Next, score of each classifier is obtained for a certain classification task. In this way, the fitness of each classifier is calculated. The survival of fittest is carried out by retaining the best classifiers. The rest are deleted and replaced by their offspring. These retained classifiers and their offspring are used for the next generation. During genetic evolution, each new generation has a slightly higher average score. In this way, the solution space is refined and converges to the optimal solution [13]. We have used GPLAB software [119] to develop OCC. All the necessary settings are given in Table 6.1. In order to represent possible solutions in the form of a complex numerical function, suitable functions, terminals, and fitness criteria are defined. Different functions of our GP module are as follows: GP function set: GP Function set is a collection of various mathematical functions available in the GP module. In GP simulations, we have used simple functions, including four binary floating arithmetic operators (+, -, *, and protected division), LOG, EXP, SIN and COS. Three component SVM classifiers prediction (L, P and R) are stacked and used as unary functions with input being threshold T. method of obtaining these predictions are described in the implantation section 4. Our functions set also consist of application specific logical statements e.g. greater than (gt) and less than (lt). GP terminals: To create population of candidate OCC, we consider OCC as a class mapping function which consists of the independent variables and constants. Threshold T is taken as a variable terminal. Randomly generated numbers in the range of [0, 1] are used as constant terminals in GP tree. In this way, GP is allowed to combine the decision space of different SVM classifiers. Population initiaization method: Initial popoulation is generated by using ramped half and half method. In this methood, an equal number of components are initialized for each depth, with the number of depths considered from two to the initial tree depth value. For each tree depth level, half of the components are initialized using Grow method and the other half components using Full method. Fitness evaluation function: We have used the concept of AUCH of ROC curve as a fitness function. A fitness function grades each component in the population. It provides feedback to the GP module about the fitness of components. Figure 6.1 shows the usage of fitness function as a feedback. Every component in the population is evaluated under this criterion. GP operators used: We have used replication, mutation and crossover operators for producing a new GP generation. Replication is the copying of a component into the next generation without any change. In mutation, a small part of a components genome is changed. This small random change often brings diversity in the solution space. Crossover creates an offspring by exchanging genetic material between two component parents. It tries to mimic recombination and sexual

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

85

reproduction. Crossover helps in converging onto an optimal solution. GPLAB software, automatically adjusts the ratio of crossover and mutation. Termination criterion: The simulation is stopped if one of the two conditions is encountered:

The fitness score exceeds 0.9999. The number of generations reaches the preset maximum generations. Table 6.1: GP Parameters setting in combing SVM classifiers

Objective: Function Set: Special Function: Terminal Set: Fitness : Expected no. of offspring Selection: Wrapper: Population Size: Initial Tree Depth Limit: Initial population: Operator prob. Type Sampling Survival mechanism Real max level Termination:

To develop an OCC with maximum AUCH +, -, *, protected division, gt, le, log, abs, sin and cos Prediction of SVM classifiers (L, P, and R) are used as unary functions in GP tree. Threshold T and some randomly generated constants in the range of [0, 1] AUCH of ROC curve. rank85 Generational Positive if >= 0, else Negative. 300 6 Ramped half and half Variable Tournament Keep best 28 Generation 80

Testing Phase At the end of a GP run, the best expression of a GP individual is obtained. Its performance is then evaluated using testing data2.

Figure 6.3: A combination of SVM classifiers represented in GP trees

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

86

6.5.

Implementation Details

The experimental results are obtained using Pentium IV machine (1.6 GHz, 256MB RAM). In the first study, the performance of OCC is analyzed with individual SVM classifiers by priori fixing the values of kernel parameters, i.e. cost parameter C = 1, kernel parameter = 1, coefficient r = 0 and degree d = 3. In the 2nd study, the optimal values of these parameters are adjusted by using grid search. Suitable grid range and step size is estimated for SVM kernels. PolySVM has four adjustable parameters; d , r , and C . However, to simplify problem, the values of degree and coefficient are fixed at d = 3, r = 1 . The optimum values of and C are then selected. In case of PolySVM, a grid range of C = [24 , 25 ] with step size C = 0.2 and = [27 , 22 ] with step size = 0.2 are used. In case of RbfSVM, the range of grid and step size of C and is selected as: C = [215 , 210 ], C = 0.2 and = [210 , 215 ] , = 0.2 . The optimal value of C parameter for linear kernel has been obtained by adjusting the grid range of C = [21 , 25 ] with C = 0.2 . Efforts have also been made to minimize the problem of over-fitting in the training of both individual SVM and composite classification models. Appropriate size of training and testing data is selected in the holdout method. Tuning parameters of GP evolution are also selected carefully.

6.6.

Results and Discussion

The following experiments have been conducted to study the behavior of OCC. Comparative performance analysis of OCC is also carried out with that of the individual SVM classifiers. Analysis of accuracy versus complexity We investigate the behavior of the best GP individuals developed for 1000 features set. Figure 6.4 (a) shows how the fitness of best individual increases in one GP runs. Figures 6.4 (b-c) shows the complexity of the best individuals expressed in terms of tree depth and no. of nodes. It is observed that in the search of better predictions, size of the best GP individual increases after each generation. As a result, the best genomes total number of nodes increases and its average tree depth becomes very large. We can observe from Figure 6.4(d), the large increasing behavior of median, average, and maximum fitness of the best individual. During crossover and mutation operations, more and more constructive blocks build up that minimize the destruction of useful building blocks. As a result, the size of GP program grows after each generation. This might be due to bloating phenomenon of GP evolution. Due to this phenomenon, many branches within the best GP program generally do not contribute in improving its performance. However, such branches increase the size of GP program.

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

1

87

0.995

Max.fitness and std.dev.

0.99

0.985

0.98

Max.Fitness Std.dev

0.975

0.97

0.965 0

10

20

30 40 Generations

50

60

70

80

90

Figure 6.4(a) the maximum fitness of the best individuals in one GP run ( = 0.007 ).

Figure 6.4 (b)-(c): (Left) No. of nodes versus generations; (Right) Tree depth versus generations of the best individuals in one GP run.

1 0.995 0.99 Max. Median and avg. fitness 0.985 0.98 0.975 0.97 0.965 0.96 0.955 0.95 0 10 20 30 40 50 60 70 80 90

Max Fitness Median fitness Avg fitness

Generations

Figure 6.4 (d): The behavior of maximum, median, and average fitness of the best individuals.

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

88

Polynomial SVM for different feature sets In order to study the effect of increasing the size of feature sets, on the performance of individual SVM classifiers, with PolySVM is selected as a test case. Its performance in terms of ROC curves for different feature sets is shown in Figure 6.5. This Figure shows the improvement in ROC curves with the increase of feature sets, e.g. PolySVM-10 (trained on 10 features) has AUCH = 0.8831 and PolySVM-1000 has AUCH = 0.9575. AUCH of ROC curve gives the overall performance of the classifier in a summarized form and it is not necessary to have high TPR values for all FPR values. Practically, initial high values of TPR and low FPR are more crucial for significant overall performance. For example, PolySVM-100 has overall lower values of AUCH, even though, it has high values of TPR for FPR 0.4 than that of PolySVM-1000.

1 0.95 0.9 0.85 0.8

TPR

0.75 0.7 0.65 0.6 0.55 0.5 polyFS10, AUCH = 0.8831 polyFS100, AUCH = 0.9325 polyFS500, AUCH = 0.9451 polyFS1000, AUCH = 0.9575

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

FPR

Figure 6.5: ROC curves of PolySVM. (For simplicity, in this figure and in the next figures, only those points that recline on the convex hull of the ROC curve are shown.) Performance comparison of OCC with individual SVM classifiers This experiment is carried out to analyze the performance of OCC and SVM classifiers. Figures 6.6 (a-d) show AUCHs of ROC curves obtained for various feature sets. These figures demonstrate that with the provision of more information, TPR increases, while FPR decreases. Our OCC has outperformed individual SVM classifiers. This improvement in ROC curves is due to low values of FPR and high values of TPR. These values help the points shifting towards upper left corner and thus providing better decision. Such kind of behavior is desirable in those applications where the cost of FPR is too important. For example, a weak patient cannot afford high FPR. Minor damage of healthy tissues may be a matter of life and death. On the other hand, if attempts are made to reduce FPR by simply adjusting decision threshold T, the risk of false negative cases might rise in a poor prediction model. Such kind of prediction models, specifically in medical applications, might cause high misclassification cost in various fatal diseases such as lungs, liver, and breast cancer.

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

89

Bar chart in Figure 6.7 shows the comparison of experimental results in a more summarized form for various feature sets. It is observed that linear SVM has the lowest AUCH values. Due to the nonlinearity in the feature space, Linear SVM is unable to learn this space effectively. However, with the increase of feature sets, it improves AUCH performance. To promote diversity, usually diverse types of component classifiers are used in the development of composite classifiers. As a result, linear classifier is included to enhance diversity in the decision space. As far as the performances of PolySVM and RbfSVM classifier are concerned, they have shown relatively equal performance. Both are capable of constructing a nonlinear decision boundary. However, the performance of our OCC is superior to both these individual SVM classifiers. During GP evolution process, composite classifiers might have extracted useful information from the decision boundaries of constituent kernels. Another advantage gained is that composite classifiers have shown higher performance, specifically for small feature sets of sizes 5, 10, and 20. The general order of performance of classifiers is:

OCCAUCH > (PolySVM AUCH RbfSVM AUCH ) > LinSVM AUCH

1 0.9 0.8

1 0.9 0.8 0.7 0.6 0.5 0.4 0

FPR

0.6 0.5 0.4 0

linSVM polySVM rbfSVM OCC

0.2

0.4

0.6

0.8

TPR

0.7

linSVM polySVM rbfSVM OCC

0.2

0.4

0.6

0.8

TPR

FPR

Figure 6.6 (a): ROC for 10 features

1 0.9 0.8 0.7

Figure 6.6 (b): ROC for 100 features

1 0.9 0.8

0.6 0.5 0.4 0

linSVM polySVM rbfSVM OCC

TPR

TPR

0.7 0.6 0.5 0.4 0

linSVM polySM rbfSVM OCC

0.2

0.4

0.6

0.8

0.2

0.4

0.6

0.8

FPR

FPR

Figure 6.6 (c): ROC for 500 features

Figure 6.6 (d): ROC for 1000 features

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

90

LinearSVM 1 0.95 0.9

PolySVM

RbfSVM

OCC

AUCH

0.85 0.8 0.75 0.7 0.65 5 10 20 50 100 250 500 1000

Features

Figure 6.7: AUCHs of ROC curves of different classifiers Overall classifier performance It is observed from Figure 6.7 that AUCH of classifiers is enhanced with the increase of features. Further analysis is carried out to study the performance of classifier in terms of AUCH of AUCHs. This measure has shown more compactness by incorporating the performance of a classifier with respect to the variation in the feature sets. The procedure adopted is as follows: in the first step, different AUCH values of a classifier for different feature sets are obtained. Graph is plotted between AUCH versus different feature sets as shown in Figure 6.8. Finally, AUCH of these AUCH curves is computed. In the second step, average AUCH of each classifier is also calculated. The difference between AUCH of AUCH and average AUCH of each classifier is determined. The value of difference represents the variation in classifiers performance with respect to the size of feature set. Higher difference indicates lower robustness of a classifier. Bar chart in the Figure 6.9 shows the overall performance of classifiers in terms of AUCH of AUCHs, average AUCH and their percentile difference. It is observed that linear SVM has the lowest AUCH of AUCHs value of 0.9205 and the largest percentile difference of 7.45 (0.92050.846). The other two component classifiers have comparatively the same AUCH of AUCH values and relatively small percentile difference. However, OCC has the largest AUCH of AUCHs value of 0.988 and the smallest percentile difference of 2.9 (0.988-0.96). These results illustrate two main advantages of OCC, i.e. higher optimality and robustness against the variation in feature sets.

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

91

1

AUCH of AUCH AVG. AUCH

0.95 0.9 0.85 AUCH 0.8 0.75 linSVM polySVM rbfSVM OCC

Classifiers performance

1 0.97 0.94 0.91 0.88 0.85 0.82 LinearSVM 0.9205 0.846 PolySVM 0.9603 0.909 Classifiers RbfSVM 0.9586 0.91 OCC 0.988 0.96

AUCH of AUCH

0.7 10

50

100 No.of Features

250

500

1000

AVG. AUCH

Figure 6.8: (left) AUCH curves of different classifiers; Figure 6.9: (right) Overall Classifiers performance Performance comparison of OCC with Optimized SVM classifiers The quantitative results in the Table 6.2 and Figure 6.10 highlight the improved performance exhibited by optimized SVM classifiers. Even though, the component SVM classifiers of OCC are not optimized, still OCC gives a margin of improvement as compared with optimized SVM classifiers. Table 6.2 shows that the optimum values of and C depend on the type of kernel function and the size of the feature set. It is observed that the optimal values of and C varies randomly with the increase of features. This table also indicates an improvement for SVM classifiers after optimization. For example, the performance of linear SVM is improved but not appreciably. SVM classifier based on RBF kernel is more accurate than linear SVM. It is also more efficient than polynomial kernel due to the less number of parameters to be adjusted. Table 6.2: performance comparison of OCC with Optimized SVM classifiers

Classifiers /Feature set 5 10 20 50 100 250 500 1000 5000 Opt_LinSVM AUCH 0.6779 0.7896 0.8167 0.8726 0.9007 0.927 0.9263 0.9329 0.9529 Optimal C 1.1 1.2 4.6 3.8 5.1 4.4 4.8 4.8 5.1 AUCH 0.7655 0.8864 0.9071 0.9609 0.9689 0.9701 0.9730 0.9754 0.9816 Opt_PolySVM Opt_RbfSVM AUCH 0.8587 0.9196 0.9319 0.9603 0.9699 0.9728 0.9785 0.9784 0.9846 OCC AUCH 0.8900 0.9344 0.9482 0.9629 0.9705 0.9766 0.9871 0.9947 0.9926 Optimal C 16 0. 06 5.65 22.62 32.01 2.02 0.08 0.176 0.21 Optimal C 32 2.0 4.0 128 128 4.02 2.2 4.2 8.0

0. 25 2.0 0.25 0.35 0.17 0.71 2.82 2.82 3.1

8.0 4.0 2.0 0.25 0.12 2.01 8.1 2.1 2.0

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

92

opt_LinSVM 1 0.95 0.9

opt_PolySVM

opt_RbfSVM

OCC

AUCH

0.85 0.8 0.75 0.7 0.65 5 10 20 50 100 250 500 1000

Features

Figure 6.10: AUCHs of ROC curves of different classifiers Figures 6.11 to 6.13 show the effect of varying and C on the performance of different kernels for 100 features. It is observed that as compared to parameter C , parameter has more significant effect on the performance of SVM kernels. Figure 6.11 shows the behavior of RbfSVM with respect to and C parameters. Its highest AUCH value is 0.9699 at = 0.12 and C=128 . In Figure 6.12, the highest AUCH value of PolySVM is 0.9689 at = 0.17 and C=32. Figure 6.13 indicate the highest AUCH value of Linear SVM is 0.9007 at C = 5.1 .

Figure 6.11: (left) AUCH surface of RBF kernel parameterized by and C ; Figure 6.12: AUCH surface of polynomial kernel parameterized by and C at d = 3, coefficient r = 1

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

93

Figure 6.13: AUCH versus parameter C of Linear kernel Temporal cost of Optimized SVM classifiers and OCC Table 6.3 shows comparison between the training and testing times of the optimized SVM classifiers and OCC. It is observed that temporal cost increases with the increase of size of feature set. In case of optimizing kernel parameters through grid search, the temporal cost depends on the grid range and its step size. In this table the training and testing time is reported for 200 data samples. Overall temporal cost of the classification models is:

OCC > opt _ PolySVM > opt _ RbfSVM > opt _ LinSVM

The optimized Linear SVM takes less training and testing time but its classification performance is poor. Our OCC takes comparatively more training and testing time but it keeps better classification performance. OCC training/testing time depends on various factors, like, training data size, length of feature set, maximum tree depth, tree nodes and the size of search space. Table 6.3: Comparison between the training and testing time (in sec.)

Classifiers /Feature set 5 10 50 100 500 Opt_LinSVM Train_t Train 2.781 3.001 3.105 4.328 18.42 Test_t Test 0.0050 0.0051 0.0051 0.0051 0.0151 Opt_PolySVM Train_t Train 201.02 217.80 220.11 271.81 1263.2 Test_t Test 0.0160 0.0310 0.0150 0.0320 0.2500 Opt_RbfSVM Train_t Train 2278.4 1343.4 1864.1 5491.4 19984.1 Test_t Test 0.0470 0.0580 0.0560 0.0780 0.4780 OCC Train_t Train 3612.4 4343.7 5643.1 9021.4 40402.1 Test_t Test 1.470 2.580 2.860 2.070 3.480

OCC behavior in a partially feature space OCC is trained on a particular feature space and we study its behavior on a partially different feature space. In table 6.4, OCC-10 is trained for 10 feature sets. However, its performance is analyzed on the same feature space as well as on other feature spaces. This table shows that AUCH value of OCC-10 is maximum (0.934) for 10 features as compared to other partially

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

94

different feature spaces. Thus, as expected, improved behavior of OCC along the diagonal path is observed. In each column, from top to bottom, there is a gradual increase in the values of AUCH. This behavior of OCC resembles a normal classifier, i.e. more information would result in higher performance. However, OCC perform randomly along horizontal. This might be due to the diverse nature of the GP search space in each run. In each run, the optimal solution may be partially/entirely different from the previous GP solution. Table 6.4: OCC performance at various feature spaces

Features size FS = 10 FS = 20 FS = 50 FS = 100 FS = 500 FS=1000 FS=5000 OCC-10 0.934 0.890 0.900 0.913 0.937 0.945 0.957 OCC-20 0.870 0.938 0.906 0.919 0.947 0.950 0.959 OCC-50 0.890 0.892 0.962 0.938 0.941 0.958 0.959 OCC-100 0.863 0.884 0.911 0.970 0.948 0.947 0.965 OCC-500 0.8776 0.9002 0.9032 0.9316 0.9871 0.953 0.9426 OCC-1000 0.7999 0.8179 0.843 0.893 0.9084 0.9947 0.9262 OCC-5000 0.8751 0.902 0.9276 0.9313 0.9458 0.9537 0.9826

OCC behavior in an entirely different feature space In this case, we analyze the performance of OCC on entirely different feature spaces but of equal size. In the first experiment, the features in the testing dataset2 are sorted and the last 250 most distinctive features are selected in ascending order. These selected features are divided into five separate but equal feature sets. Each feature set contain 50 features and the last feature set contains the most discriminant features. OCC is trained for the last feature space. Same steps are carried out in the second experiment to construct a feature space of 100 feature sets. Their experimental results are given in bar charts of Figures 6.14 and 6.15. It is observed from these figures that OCC performs well for the last feature set. Because, OCC was trained on this specific feature space, while on the other feature spaces, the performance of OCC is equal to that of PolySVM or RbfSVM. This is because those SVM classifiers are tested and trained on their own feature spaces, but this is not the case for OCC. Even then, the performance of OCC is not less than the best of its component classifiers. These figures also illustrate continuous degradation in the performance of classifiers. This may be due to the gradual decrease in the discrimination power of SIMBA feature selection algorithm. These figures show that the last feature spaces are the most discriminant. Another point is that there is a steadily degradation in performance of the classifiers along various feature sets. This may be due to the gradual decrease in the discrimination power of SIMBA feature selection algorithm. We have sorted the features such that the last feature set is associated with most distinctive features, while the least distinguishable features lie at the first feature set.

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

95

LinearSVM 1 0.98 0.96 0.94

PolySVM

RbfSVM

OCC

1 0.98 0.96 0.94

LinearSVM

PolySVM

RbfSVM

OCC

AUCH

1st-50 2nd-50 3rd-50 4th-50 5th-50

AUCH

0.92 0.9 0.88 0.86 0.84 0.82 0.8

0.92 0.9 0.88 0.86 0.84 0.82 0.8 1st-100 2nd-100 3rd-100 4th-100 5th-100

Features

Features

Figure 6.14: (left) (right) shows the performance of classifiers at 50; Figure 6.15: (right) shows the performance of classifiers at 100 features An exemplary numerical expression classifier, prefix form, developed by GP at 1000 features is:

f(L,R,P)=plus(le(sin(P),le(plus(sin(L),sin(sin(P))),abs(minus(L,abs(minus(sin(sin(L)),0.12826)))))),plus(mi nus(R,0.15),plus(plus(sin(plus(le(0.047,abs(le(plus(R,plus(tmes(kozadivide(P,plus(sin(abs(L)),plus(cos( L),sin(sin(R))))),0.51),P)),le(sin(sin(L)),abs(minus(sin(L),plus(sin(plus(le(0.047,abs(minus(sin(sin(L)),0.12 ))),sin(L))),minus(sin(L),abs(minus(sin(sin(L)),0.12)))))))))),sin(abs(le(plus(0.047,sin(absus(minus(R,0.15) ,plus(plus(sin(plus(le(0.047,abs(le(plus(R,plus(times(kozadivide(P,plus(sin(abs(L)),plus(cos(L),sin(sin(R) )))),0.51),P)),le(sin(sin(L)),abs(minus(sin(L),plus(sin(plus(le(0.047,abs(minus(sin(sin(L)),0.12))),sin(L))),m inus(sin(L),abs(minus(sin(sin(L)),0.12)))))))))),(mnus(R,0.15),pmnus(L,plus(sin(plus(le(0.04,abs(minus(si n(abs(minus(sin(L),plus(sin(plus(le(sin(L),abs(minus(sin(sin(L)),0.12))),sin(L))),minus(sin(L),abs(minus(si n(sin(L)),0.12))))))),0.12))),sin(R))),R))))),le(sin(sin(abs(minus(sin(L),plus)))))))).

This expression shows that OCC function depends on predicted arrays (L, R, P) of the kernels, random constants, arithmetic operators and other special operators.

6.7.

Summary of the Chapter

The chapter has described the development of OCC. Attempts are made to answer the research questions of the section 6.2. 1. Answer relating to the first research question is confirmed by the experimental results. It is concluded that OCC is more robust and general at almost all feature sets. During GP evolution, OCC learns the most favorable distribution within the data space. This technique has better chance to combine the decision space efficiently. OCC gives higher classification performance than that of its individual optimized SVM classifiers. The second most significant finding is that our method has eliminated the requirement of an optimal kernel function and model selection. Because, during GP search mechanism suitable combination functions are automatically generated. Therefore, it possible to achieve high performance SVM classification model without resorting to finding an optimal kernel function and model selection.

CHAPTER 6. COMBINING SUPPORT VECTOR MACHINES

96

2. The results demonstrate that OCC is comparatively more accurate at less information. It attains high margin of improvement at small feature sets. 3. The answer to the last research question is that it is possible to analyze graphically relation between the accuracy and complexity involved in order to develop a composite classifier. Complexity within the numerical evolved expressions increases as more and more constructive blocks builds up within the GP tree. The contribution of this chapter with respect to wider sense is that it has been explored the applicability of GP based combination technique on SVM classification models. Our current and next investigations will find GP potential to optimal combine the decision information from its component classifiers.

Vous aimerez peut-être aussi

- Optimal Feature Selection For Support Vector Machines: IndependentlyDocument25 pagesOptimal Feature Selection For Support Vector Machines: IndependentlyGabor SzirtesPas encore d'évaluation

- SVM in R (David Meyer)Document8 pagesSVM in R (David Meyer)alexa_sherpyPas encore d'évaluation

- 0 mbm08cvprDocument8 pages0 mbm08cvprDanny Von Castillo SalasPas encore d'évaluation

- FastLSVM MLDM09Document16 pagesFastLSVM MLDM09Yến HuỳnhPas encore d'évaluation

- SVM Basics PaperDocument7 pagesSVM Basics PaperMohitRajputPas encore d'évaluation

- Experiential Study of Kernel Functions To Design An Optimized Multi-Class SVMDocument6 pagesExperiential Study of Kernel Functions To Design An Optimized Multi-Class SVMAnonymous lPvvgiQjRPas encore d'évaluation

- SvmdocDocument8 pagesSvmdocEZ112Pas encore d'évaluation

- Cloudsvm: Training An SVM Classifier in Cloud Computing SystemsDocument13 pagesCloudsvm: Training An SVM Classifier in Cloud Computing SystemssfarithaPas encore d'évaluation

- SVM Model Selection Using PSO For Learning HandwrittenDocument14 pagesSVM Model Selection Using PSO For Learning HandwrittenElMamounPas encore d'évaluation

- Support Vector Machines: The Interface To Libsvm in Package E1071 by David Meyer FH Technikum Wien, AustriaDocument8 pagesSupport Vector Machines: The Interface To Libsvm in Package E1071 by David Meyer FH Technikum Wien, AustriaAmish SharmaPas encore d'évaluation

- K-SVM: An Effective SVM Algorithm Based On K-Means ClusteringDocument8 pagesK-SVM: An Effective SVM Algorithm Based On K-Means ClusteringSalmaElfellahPas encore d'évaluation

- Support Vector Machines: The Interface To Libsvm in Package E1071 by David Meyer FH Technikum Wien, AustriaDocument8 pagesSupport Vector Machines: The Interface To Libsvm in Package E1071 by David Meyer FH Technikum Wien, AustriaDoom Head 47Pas encore d'évaluation

- Rainfall PredictionDocument4 pagesRainfall PredictionoutriderPas encore d'évaluation

- Mining Stock Market Tendency Using GA-Based Support Vector MachinesDocument10 pagesMining Stock Market Tendency Using GA-Based Support Vector Machineswacdev DevelopmentPas encore d'évaluation

- Tutorial On Support Vector Machine (SVM) : AbstractDocument13 pagesTutorial On Support Vector Machine (SVM) : AbstractEZ112Pas encore d'évaluation

- A Practical Guide To Support Vector ClassificationDocument16 pagesA Practical Guide To Support Vector ClassificationJônatas Oliveira SilvaPas encore d'évaluation

- Article 18 ColasDocument10 pagesArticle 18 ColasPratima RohillaPas encore d'évaluation

- The SVM Classifier Based On The Modified Particle Swarm OptimizationDocument9 pagesThe SVM Classifier Based On The Modified Particle Swarm OptimizationsamPas encore d'évaluation

- Feature Selection For SVMS: J. Weston, S. Mukherjee, O. Chapelle, M. Pontil T. Poggio, V. VapnikDocument7 pagesFeature Selection For SVMS: J. Weston, S. Mukherjee, O. Chapelle, M. Pontil T. Poggio, V. VapnikaaayoubPas encore d'évaluation

- Important QuestionsDocument18 pagesImportant Questionsshouryarastogi9760Pas encore d'évaluation

- 1.4. Support Vector Machines - Scikit-LearnDocument6 pages1.4. Support Vector Machines - Scikit-LearnAmit DeshaiPas encore d'évaluation

- Machine Learning MidtermDocument18 pagesMachine Learning MidtermserialkillerseeyouPas encore d'évaluation

- Generalization of Linear and Non-Linear Support Vector Machine in Multiple Fields: A ReviewDocument14 pagesGeneralization of Linear and Non-Linear Support Vector Machine in Multiple Fields: A ReviewCSIT iaesprimePas encore d'évaluation

- When Ensemble Learning Meets Deep Learning. A New Deep SupportDocument7 pagesWhen Ensemble Learning Meets Deep Learning. A New Deep Supportmohdzamrimurah_gmailPas encore d'évaluation

- Machine Learning Algorithms in Web Page ClassificationDocument9 pagesMachine Learning Algorithms in Web Page ClassificationAnonymous Gl4IRRjzNPas encore d'évaluation

- A Study On Support Vector Machine Based Linear and Non-Linear Pattern ClassificationDocument5 pagesA Study On Support Vector Machine Based Linear and Non-Linear Pattern ClassificationPratik DixitPas encore d'évaluation

- Gaussian Kernel Optimization For Pattern Classification: Jie Wang, Haiping Lu, Juwei LuDocument28 pagesGaussian Kernel Optimization For Pattern Classification: Jie Wang, Haiping Lu, Juwei LuAnshul SuneriPas encore d'évaluation

- Support Vector Machine DissertationDocument7 pagesSupport Vector Machine DissertationProfessionalCollegePaperWritersMcAllen100% (1)

- Advanced Data Analytics: Simon ScheideggerDocument75 pagesAdvanced Data Analytics: Simon ScheideggerRuben KempterPas encore d'évaluation

- Evaluation of Different ClassifierDocument4 pagesEvaluation of Different ClassifierTiwari VivekPas encore d'évaluation

- Support Vector MachineDocument11 pagesSupport Vector MachineSung Woo Jang100% (2)

- Ijetae 0812 11Document4 pagesIjetae 0812 11vamgaduPas encore d'évaluation

- Support Vector Machine - Wikipedia, The Free EncyclopediaDocument12 pagesSupport Vector Machine - Wikipedia, The Free EncyclopediaSengottaiyan GovindasamyPas encore d'évaluation

- Fault Detection in Industrial Plant Using - Nearest Neighbors With Random Subspace MethodDocument6 pagesFault Detection in Industrial Plant Using - Nearest Neighbors With Random Subspace MethodsachinPas encore d'évaluation

- Machine Learning: Support Vectors Machine (SVM)Document66 pagesMachine Learning: Support Vectors Machine (SVM)arshia saeed100% (1)

- Svmprotein PDFDocument12 pagesSvmprotein PDFasdasdPas encore d'évaluation

- Chang Liang Et Al - Scaling Up Kernel Grower Clustering Method For Large Data Sets Via Core-SetsDocument7 pagesChang Liang Et Al - Scaling Up Kernel Grower Clustering Method For Large Data Sets Via Core-SetsTuhmaPas encore d'évaluation

- IJRS Final ModifiedDocument14 pagesIJRS Final ModifiedMahesh PalPas encore d'évaluation

- Face Recognition Using PCA and SVMDocument5 pagesFace Recognition Using PCA and SVMNhat TrinhPas encore d'évaluation

- A Practical Guide To Support Vector Classification: I I I N LDocument15 pagesA Practical Guide To Support Vector Classification: I I I N LrabbityeahPas encore d'évaluation

- 2019 ArticleDocument11 pages2019 Articlemesba HoquePas encore d'évaluation

- C. Cifarelli Et Al - Incremental Classification With Generalized EigenvaluesDocument25 pagesC. Cifarelli Et Al - Incremental Classification With Generalized EigenvaluesAsvcxvPas encore d'évaluation

- A Parallel Mixture of Svms For Very Large Scale ProblemsDocument8 pagesA Parallel Mixture of Svms For Very Large Scale ProblemsDhakshana MurthiPas encore d'évaluation

- ISKE2007 Wu HongliangDocument7 pagesISKE2007 Wu HongliangaaayoubPas encore d'évaluation

- Support Vector Machine (SVM) : Basic TerminologiesDocument2 pagesSupport Vector Machine (SVM) : Basic Terminologiesrohitkumar2021Pas encore d'évaluation

- A Review of Supervised Learning Based Classification For Text To Speech SystemDocument8 pagesA Review of Supervised Learning Based Classification For Text To Speech SystemInternational Journal of Application or Innovation in Engineering & ManagementPas encore d'évaluation

- Support Vector Machine - A SurveyDocument5 pagesSupport Vector Machine - A SurveyChanPas encore d'évaluation

- E Cient Sparse Approximation of Support Vector Machines Solving A Kernel LassoDocument9 pagesE Cient Sparse Approximation of Support Vector Machines Solving A Kernel LassoRaja Ben CharradaPas encore d'évaluation

- Data Classification Using Support Vector Machine: Durgesh K. Srivastava, Lekha BhambhuDocument7 pagesData Classification Using Support Vector Machine: Durgesh K. Srivastava, Lekha BhambhuMaha LakshmiPas encore d'évaluation

- Optimizing Support Vector Machine Parame PDFDocument6 pagesOptimizing Support Vector Machine Parame PDFDIONLY onePas encore d'évaluation

- Multiple ClassifiersDocument4 pagesMultiple ClassifiersmiusayPas encore d'évaluation

- Colas-Brazdil2006 Chapter ComparisonOfSVMAndSomeOlderCla-2Document10 pagesColas-Brazdil2006 Chapter ComparisonOfSVMAndSomeOlderCla-2ANDRES DAVID DOMINGUEZ ROZOPas encore d'évaluation

- Classifier Based Text Mining For Radial Basis FunctionDocument6 pagesClassifier Based Text Mining For Radial Basis FunctionEdinilson VidaPas encore d'évaluation

- ML Model Paper 3 SolutionDocument22 pagesML Model Paper 3 SolutionRoger kujurPas encore d'évaluation

- 1 A Review of Kernel Methods in Remote Sensing Data AnalysisDocument34 pages1 A Review of Kernel Methods in Remote Sensing Data AnalysisChun F HsuPas encore d'évaluation

- SVM - Hype or HallelujahDocument13 pagesSVM - Hype or HallelujahVaibhav JainPas encore d'évaluation

- Fault Diagnosis of Power Transformer Based On Multi-Layer SVM ClassifierDocument7 pagesFault Diagnosis of Power Transformer Based On Multi-Layer SVM ClassifierFelipe KaewPas encore d'évaluation

- Support Vector Machine (SVM)Document4 pagesSupport Vector Machine (SVM)Smarth KapilPas encore d'évaluation

- Course Details1Document1 pageCourse Details1Summrina KanwalPas encore d'évaluation

- Day 1 ProgramDocument1 pageDay 1 ProgramSummrina KanwalPas encore d'évaluation

- The Virtues of Suratul Faatihah Worksheet (Part One)Document8 pagesThe Virtues of Suratul Faatihah Worksheet (Part One)Summrina KanwalPas encore d'évaluation

- Daily TimetableDocument1 pageDaily TimetableSummrina KanwalPas encore d'évaluation

- Application of Computer-Aided Diagnosis On Breast UltrasonographyDocument8 pagesApplication of Computer-Aided Diagnosis On Breast UltrasonographySummrina KanwalPas encore d'évaluation

- SFB637 A5 10 014 IcDocument8 pagesSFB637 A5 10 014 IcSummrina KanwalPas encore d'évaluation

- DFS PDFDocument3 pagesDFS PDFSummrina KanwalPas encore d'évaluation

- GreedyDocument2 pagesGreedySummrina KanwalPas encore d'évaluation

- Ncu AppraisalDocument2 pagesNcu AppraisalSummrina KanwalPas encore d'évaluation

- ICT Department: Mantalongon National High SchoolDocument4 pagesICT Department: Mantalongon National High SchoolVelijun100% (7)

- DLL - 4th QRTR - Week 1Document6 pagesDLL - 4th QRTR - Week 1Jaycelyn BaduaPas encore d'évaluation

- Fenstermarcher Gary - The Knower and The KnownDocument61 pagesFenstermarcher Gary - The Knower and The KnownClaudia De LaurentisPas encore d'évaluation

- Advanced Data Analytics For IT Auditors Joa Eng 1116Document8 pagesAdvanced Data Analytics For IT Auditors Joa Eng 1116WPJ AlexandroPas encore d'évaluation

- Bruh Research AgainDocument54 pagesBruh Research Againpenaraanda pendracko100% (1)

- PROJ6000 - Assessment 2 Brief - 29022020 PDFDocument5 pagesPROJ6000 - Assessment 2 Brief - 29022020 PDFMadeeha AhmedPas encore d'évaluation

- UNITDocument1 pageUNITsaritalodhi636Pas encore d'évaluation

- Trinidadian English PronounsDocument14 pagesTrinidadian English PronounsHassan BasarallyPas encore d'évaluation

- Course Outline - Cognitive PsychDocument4 pagesCourse Outline - Cognitive PsychJeng Mun SamPas encore d'évaluation

- FAFU Landscape Architecture Student Success: Framing Eastern Vs Western Design, Context, and Culture in Learning SuccessDocument4 pagesFAFU Landscape Architecture Student Success: Framing Eastern Vs Western Design, Context, and Culture in Learning SuccessRick LeBrasseurPas encore d'évaluation

- Testy Focus 2Document3 pagesTesty Focus 2Marta AdamczykPas encore d'évaluation

- 01 Mission and VissionDocument14 pages01 Mission and VissionOmkar RanadePas encore d'évaluation

- HG Learners Development Assessment-Tool-MdlDocument2 pagesHG Learners Development Assessment-Tool-MdlElsie NogaPas encore d'évaluation

- Enquiry EssayDocument2 pagesEnquiry EssayisoldePas encore d'évaluation

- IDEA TemplateDocument2 pagesIDEA TemplateJay100% (1)

- Edm 104 ScriptDocument8 pagesEdm 104 ScriptMohit GautamPas encore d'évaluation

- Assertive Communication For Healthcare Professionals NewDocument34 pagesAssertive Communication For Healthcare Professionals NewGS Gabrie;Pas encore d'évaluation

- Poker ChipsDocument2 pagesPoker Chipsmaria isabel diazPas encore d'évaluation

- SLR Chatbots in EduDocument10 pagesSLR Chatbots in EdubahbibiPas encore d'évaluation

- Individual Learner Monitoring PlanDocument4 pagesIndividual Learner Monitoring Planalvin mandapatPas encore d'évaluation

- Week 29 - The World Around UsDocument6 pagesWeek 29 - The World Around UsvignesPas encore d'évaluation

- Sample Topic MemorandumDocument2 pagesSample Topic MemorandumAlexandros XouriasPas encore d'évaluation

- What Are The Expected Tasks You Have Successfully AccomplishedDocument1 pageWhat Are The Expected Tasks You Have Successfully AccomplishedImmortality Realm67% (18)

- Study File 7Document19 pagesStudy File 7Leones JessiejunePas encore d'évaluation

- Xaam - in-TOPPERS STRATEGY SHIVANI GUPTA UPSC CSE 2017 AIR 121 FIRST ATTEMPT WITHOUT COACHINGDocument4 pagesXaam - in-TOPPERS STRATEGY SHIVANI GUPTA UPSC CSE 2017 AIR 121 FIRST ATTEMPT WITHOUT COACHINGChinmay JenaPas encore d'évaluation

- The Advance Generation: The Relationship Between The Instructional Approches and Academic Performance in Oral Communication of Grade 11 Students of Norzagaray National HighschoolDocument39 pagesThe Advance Generation: The Relationship Between The Instructional Approches and Academic Performance in Oral Communication of Grade 11 Students of Norzagaray National HighschoolMARIO RIVERA LAZARO JR.Pas encore d'évaluation

- Project 4 - Reflection RWS 280Document5 pagesProject 4 - Reflection RWS 280Alex Madarang100% (1)

- The Cleveland Scale For Activities of DaDocument14 pagesThe Cleveland Scale For Activities of DaJesena SalvePas encore d'évaluation

- Neuroscience and Saint Thomas AquinasDocument3 pagesNeuroscience and Saint Thomas AquinasAnonymous Vld2tfTAJCPas encore d'évaluation

- Group 1 Permission Letter For Teacher FinalDocument3 pagesGroup 1 Permission Letter For Teacher FinalEra G. DumangcasPas encore d'évaluation