Académique Documents

Professionnel Documents

Culture Documents

Chap5.5 Regularization

Transféré par

phamngovinhphuc123Description originale:

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Chap5.5 Regularization

Transféré par

phamngovinhphuc123Droits d'auteur :

Formats disponibles

Machine Learning

Srihari

Regularization in Neural Networks

Sargur Srihari

Machine Learning

Srihari

Topics in Neural Network Regularization

What is regularization? Methods

1. Determining optimal number of hidden units 2. Use of regularizer in error function

Linear Transformations and Consistent Gaussian priors

3. Early stopping

Invariances

Tangent propagation

Training with transformed data Convolutional networks Soft weight sharing

2

Machine Learning

Srihari

What is Regularization?

In machine learning (also, statistics and inverse problems):

introducing additional information to prevent over-tting

(or solve ill-posed problem)

This information is usually a penalty for complexity, e.g.,

restrictions for smoothness bounds on the vector space norm

Theoretical justication for regularization:

attempts to impose Occam's razor on the solution

From a Bayesian point of view

Regularization corresponds to imposition of prior distributions on model parameters

3

Machine Learning

Srihari

1. Regularization by determining no. of hidden units

Number of input and output units is determined by dimensionality of data set Number of hidden units M is a free parameter

Adjusted to get best predictive performance

Possible approach is to get maximum likelihood estimate of M for balance between under-tting and over-tting

Machine Learning

Srihari

Effect of Varying Number of Hidden Units

Sinusoidal Regression Problem Two layer network trained on 10 data points M = 1, 3 and 10 hidden units Minimizing sum-of-squared error function Using conjugate gradient descent Generalization error is not a simple function of M due to presence of local minima in error function

Machine Learning

Srihari

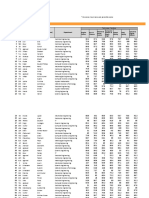

Using Validation Set to determine no of hidden units

Plot a graph choosing random starts and different numbers of hidden units M

30 random starts for each M

Sum of squares Test error for polynomial data

Overall best validation Set performance happened at M=8

Number of hidden units, M

6

Machine Learning

Srihari

2. Regularization using Simple Weight Decay

Generalization error is not a simple function of M

Due to presence of local minima

Need to control network complexity to avoid over-tting

Choose a relatively large M and control complexity by addition of regularization term

Simplest regularizer is weight decay

T

E (w) = E(w) + 2 w w

Effective model complexity determined by choice of regularization coefcient

Regularizer is equivalent to a zero mean Gaussian prior over weight vector w

Simple weight decay has certain shortcomings

Machine Learning

Srihari

Consistent Gaussian priors

Simple weight decay is inconsistent with certain scaling properties of network mappings To show this, consider a multi-layer perceptron network with two layers of weights and linear output units Set of input variables {xi} and output variables {yi} Activations of hidden units in rst layer have the form z = h w x + w

j

ji i

j0

Activations of output units are

y k = w kj z j + w k 0

j

Machine Learning

Srihari

Linear Transformations of input/output Variables

Suppose we perform a linear transformation of input data We can arrange for mapping performed by network to be unchanged

if we transform weights and biases from inputs to hidden units as

x i x i = ax i + b

w ji w ji =

1 b w ji and w j 0 = w j 0 w ji a a i

Similar linear transformation of output variables of network is

y k y k = cy k + d

Can be achieved by transformation of second layer weights and biases w k j w kj = cw kj and w k 0 = cw k 0 + d

9

Machine Learning

Srihari

Desirable invariance property of regularizer

If we train one network using original data

Another for which input and/or target variables are transformed by one of the linear transformations

Then they should only differ by the weights as given

Regularizer should have this property

Otherwise it arbitrarily favors one solution over another

Simple weight decay does not have this property

Treats all weights and biases on an equal footing

10

Machine Learning

Srihari

Regularizer invariant under linear transformation

A regularizer invariant to re-scaling of weights and shifts of biases is 1 w2 + 2 w2

2

w W1

w W2

where W1 are weights of rst layer and W2 of second layer

This regularizer remains unchanged under weight transformations provided

1 a1/ 2 1 and 2 c 1/ 2 2

However, it corresponds to prior of the form

2 2 2 1 p(w | 1, 2 ) exp 2 w 2 w w W1 w W2

1 and 2 are hyper-parameters

This is an improper prior which cannot be normalized

Leads to difculties in selecting regularization coefcients and in model comparison within Bayesian framework Instead include separate priors for biases with their own hyper-parameters

Machine Learning

Srihari

Example: Effect of hyperparameters

Network with single input (x value Draw samples from prior, plot network ranging from -1 to +1), Five samples correspond to ve colors single linear output (y value For each setting function is learnt and plotted ranging from -60 to +40) 12 hidden units with tanh activation functions output

functions

Priors are governed by four hyper-parameters 1b, precision of Gaussian of rst layer bias 1w, ..of rst layer weights 2b,. ..of second layer bias 2w, . of second layer weights

input

12

Machine Learning

Srihari

3. Early Stopping

Alternative to regularization

In controlling complexity

Training Set Error

Error measured with an independent validation set

shows initial decrease in error and then an increase

Iteration Step Validation Set Error

Training stopped at point of smallest error with validation data

Effectively limits network complexity

Iteration Step

13

Machine Learning

Srihari

Interpreting the effect of Early Stopping

Consider quadratic error function Axes in weight space are parallel to eigen vectors of Hessian In absence of weight decay, weight vector starts at origin and proceeds to wML Stopping at w is similar to weight decay

E (w) = E(w) + w T w 2

Contour of constant error wML represents minimum

Effective number of parameters in the network grows during course of training

14

Machine Learning

Srihari

Invariances

Quite often in classication problems there is a need

Predictions should be invariant under one or more transformations of input variable

Example: handwritten digit should be assigned same classication irrespective of position in the image (translation) and size (scale)

Such transformations produce signicant changes in raw data, yet need to produce same output from classier

Examples: pixel intensities,

in speech recognition, nonlinear time warping along time axis

15

Machine Learning

Srihari

Simple Approach for Invariance

Large sample set where all transformations are present

E.g., for translation invariance, examples of objects in may different positions

Impractical

Number of different samples grows exponentially with number of transformations

Seek alternative approaches for adaptive model to exhibit required invariances

16

Machine Learning

Srihari

Approaches to Invariance

1. Training set augmented by transforming training patterns according to desired invariances

E.g., shift each image into different positions

2. Add regularization term to error function that penalizes changes in model output when input is transformed.

Leads to tangent propagation.

3. Invariance built into pre-processing by extracting features invariant to required transformations

4. Build invariance property into structure of neural network (convolutional networks)

Local receptive elds and shared weights

17

Machine Learning

Srihari

Approach 1: Transform each input

Original Image Warped Images

Synthetically warp each handwritten digit image before presentation to model Easy to implement but computationally costly

Random displacements x,y (0,1) at each pixel Then smooth by convolutions of width 0.01, 30 and 60 resply

18

Machine Learning

Srihari

Approach 2: Tangent Propagation

Regularization can be used to encourage models to be invariant to transformations

by techniques of tangent propagation

input vector xn Continuous transformation sweeps a manifold M in Ddimensional input space

Two-dimensional input space showing effect of continuous transformation with single parameter Vector resulting from transformation is s(x,) so that s(x,0)=x Tangent to curve M is given by

n =

s(x n , ) = 0

19

Machine Learning

Srihari

Tangent Propagation as Regularization

Under transformation of input vector

Output vector will change D D y k x i Derivative of output k wrt is given by y k = = J ki i = 0 i=1 x i = 0 i=1 where Jki is the (k,i) element of the Jacobian Matrix J

Result is used to modify the standard error function

To encourage local invariance in neighborhood of data point

E = E + where is a regularization coefficient and y 2 1 D 2 1 = nk = J nki ni 2 n k = 0 2 n k i=1

20

Machine Learning

Srihari

Tangent vector from nite differences

Original Image x Tangent vector corresponding to small rotation

In practical implementation tangent vector n is approximated

using nite differences by subtracting original vector xn from the corresponding vector after transformations using a small value of and then dividing by

Tangent Distance used to build invariance properties with nearest-neighbor classiers is a related technique

Adding small contribution from tangent vector to image x+

True image rotated for comparison

21

Machine Learning

Srihari

Equivalence of Approaches 1 and 2

Expanding the training set is closely related to tangent propagation Small transformations of the original input vectors together with Sum-of-squared error function can be shown to be equivalent to the tangent propagation regularizer

22

Machine Learning

Srihari

Approach 4: Convolutional Networks

Creating a model invariant to transformation of inputs Neural network structure:

Layer of convolutional units

Instead of treating image as input to a fully connected network , local receptive elds are used

Layer of convolutional units followed by a layer of sub-sampling

23

Machine Learning

Srihari

Soft weight sharing

Reducing the complexity of a network Encouraging groups of weights to have similar values Only applicable when form of the network can be specied in advance Division of weights into groups, mean weight value for each group and spread of values are determined during the learning process

24

Vous aimerez peut-être aussi

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Earth Pressure At-Rest PDFDocument7 pagesEarth Pressure At-Rest PDFvpb literaturaPas encore d'évaluation

- DSP Manual Autumn 2011Document108 pagesDSP Manual Autumn 2011Ata Ur Rahman KhalidPas encore d'évaluation

- Safety Instrumented Systems SummersDocument19 pagesSafety Instrumented Systems SummersCh Husnain BasraPas encore d'évaluation

- Neuroview: Neurobiology and The HumanitiesDocument3 pagesNeuroview: Neurobiology and The Humanitiesports1111Pas encore d'évaluation

- API DevDocument274 pagesAPI Devruggedboy0% (1)

- Jurnal Internasional Tentang ScabiesDocument9 pagesJurnal Internasional Tentang ScabiesGalyPas encore d'évaluation

- CP100 Module 2 - Getting Started With Google Cloud PlatformDocument33 pagesCP100 Module 2 - Getting Started With Google Cloud PlatformManjunath BheemappaPas encore d'évaluation

- PhantasmagoriaDocument161 pagesPhantasmagoriamontgomeryhughes100% (1)

- BS 8901 SEG Press ReleaseDocument3 pagesBS 8901 SEG Press Releasetjsunder449Pas encore d'évaluation

- Welspun One Logistics Parks: Hazard Risk AssessmentDocument2 pagesWelspun One Logistics Parks: Hazard Risk AssessmentR. Ayyanuperumal AyyanuperumalPas encore d'évaluation

- Physics Sample Problems With SolutionsDocument10 pagesPhysics Sample Problems With SolutionsMichaelAnthonyPas encore d'évaluation

- Barrier Free EnvironmentDocument15 pagesBarrier Free EnvironmentRavi Chandra100% (2)

- Innoventure List of Short Listed CandidatesDocument69 pagesInnoventure List of Short Listed CandidatesgovindmalhotraPas encore d'évaluation

- Emergency Stop in PL E: SINUMERIK Safety IntegratedDocument10 pagesEmergency Stop in PL E: SINUMERIK Safety IntegratedVladimirAgeevPas encore d'évaluation

- Technical Report No. 1/12, February 2012 Jackknifing The Ridge Regression Estimator: A Revisit Mansi Khurana, Yogendra P. Chaubey and Shalini ChandraDocument22 pagesTechnical Report No. 1/12, February 2012 Jackknifing The Ridge Regression Estimator: A Revisit Mansi Khurana, Yogendra P. Chaubey and Shalini ChandraRatna YuniartiPas encore d'évaluation

- Ten Strategies For The Top ManagementDocument19 pagesTen Strategies For The Top ManagementAQuh C Jhane67% (3)

- APO Overview Training - PPDSDocument22 pagesAPO Overview Training - PPDSVijay HajnalkerPas encore d'évaluation

- Ibrahim Kalin - Knowledge in Later Islamic Philosophy - Mulla Sadra On Existence, Intellect, and Intuition (2010) PDFDocument338 pagesIbrahim Kalin - Knowledge in Later Islamic Philosophy - Mulla Sadra On Existence, Intellect, and Intuition (2010) PDFBarış Devrim Uzun100% (1)

- Nina Harris Mira Soskis Thalia Ehrenpreis Stella Martin and Lily Edwards - Popper Lab Write UpDocument4 pagesNina Harris Mira Soskis Thalia Ehrenpreis Stella Martin and Lily Edwards - Popper Lab Write Upapi-648007364Pas encore d'évaluation

- Guideline For Grade and Compensation FitmentDocument5 pagesGuideline For Grade and Compensation FitmentVijit MisraPas encore d'évaluation

- Nonverbal Communication (BAS105 UNIT-4)Document16 pagesNonverbal Communication (BAS105 UNIT-4)sachinnonofficialmailPas encore d'évaluation

- Chemistry Project On FertilizersDocument24 pagesChemistry Project On FertilizersKamal Thakur75% (4)

- A Sourcebook in Chinese LongevityDocument34 pagesA Sourcebook in Chinese Longevitytanpausing67% (3)

- Storage Center: Command Set 4.1Document174 pagesStorage Center: Command Set 4.1luke.lecheler5443Pas encore d'évaluation

- 2nd Announcement 13th InaSH Meeting 2019Document14 pages2nd Announcement 13th InaSH Meeting 2019adinda permataPas encore d'évaluation

- QuickUSB User Guide v2.11.41Document75 pagesQuickUSB User Guide v2.11.41Vport Port100% (1)

- Journal of Teacher Education-2008-Osguthorpe-288-99 PDFDocument13 pagesJournal of Teacher Education-2008-Osguthorpe-288-99 PDFFauzan WildanPas encore d'évaluation

- Master SC 2015 enDocument72 pagesMaster SC 2015 enNivas Kumar SureshPas encore d'évaluation

- Message Staging and Logging Options in Advanced Adapter Engine of PI - PO 7.3x - 7.4 - SAP Blogs PDFDocument26 pagesMessage Staging and Logging Options in Advanced Adapter Engine of PI - PO 7.3x - 7.4 - SAP Blogs PDFSujith KumarPas encore d'évaluation

- Carl Jung - CW 18 Symbolic Life AbstractsDocument50 pagesCarl Jung - CW 18 Symbolic Life AbstractsReni DimitrovaPas encore d'évaluation