Académique Documents

Professionnel Documents

Culture Documents

Subtitles 3

Transféré par

Edgar YoveraDescription originale:

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Subtitles 3

Transféré par

Edgar YoveraDroits d'auteur :

Formats disponibles

So now we're getting into Bayesian land.

And we're finally going to start talking about the actual representations that are going to be the bread and butter of what we're going to describe in this class. And, so we're going to start by defining the basic semantics of a Bayesian network, and how it's constructed, from a set of, from a set of factors. So let's start by looking at a running example that will see us throughout a large part of at least the first part of this course and this is what we call the student example. So in the student example we have a student who's taking the class for a grade and we're going to use the first letter of, of the word to denote the name of the random variable just like we did in previous examples. So here the random variable is going to be G. Now the grade of the student obviously depends on how difficult the course that he or she is taking and the intelligence of the student. So that gives us, in addition to G. We also have D and I. And we're going to add just a couple of extra random variables just to make things a little bit more interesting. So we're going to assume that the student has taken the SAT. So he may, may or may not have scored well on the SAT's. So that's another random variable S. And then finally we have this case of the disappearing line. We also have the recommendation letter L that the student gets from the instructor of the class. 'Kay? And we're going to grossly oversimplify this problem by, basically, binarizing everything except for grades. So, everything has only two values except for grades that has three. And then so that only so that I can write things compactly. This is not a limitation of the framework, it's just so that the probability distribution won't become unmanageable. Okay, so now let's think about how we can construct the dependancies of, Of this. In this probability distribution. Okay, so let's start with the random variable grade. I am gonna put it in the middle and ask ourselves what the grade of the student depends on and it seems just you know from completely intuitive perspective it seems clear that the grade of the student depends on the difficulty of the course and on intelligence of the students. So we already have a little baby Bayesian network with three random variables, let's now take the other random variables and introduce them into the mix so for example

the SAT score of the student doesn't seem to depend on the difficulty of the course or on the grade the student gets in the course the only thing its' likely to depend on in the context of this model is the intelligence of the student. And finally, caricaturing the way in which instructors write recommendation letters, we're going to assume that the quality of a letter depends only on the student's grades. The professor's teaching, you know, 600 students or maybe 100,000 online students. And so the only thing that one can, say about the student, is by looking as their actual grade record. And so the, and so, regardless of anything else, the quality of a letter depends only on the grade. Now, this is. A model of the dependencies. It's only one model that one can construct for these dependencies. So, for example, I could easily imagine other models. For instance, ones that have the students who are brighter taking harder courses. In which case, there might be potentially an edge between I and V but we're not going to use that model (so let's erase that), because we are going to stick with the simpler model for the time being. But this is only to highlight the fact that a model is not set in stone. It's a representation of how we believe the world works. So here is a model drawn out a little bit more nicely than, than the picture before. And now lets think about what we need to do in order to turn this into our presentation on probability distribution, because right now all it is, is a bunch of you know, of nodes stuck together with edges, so how do we actually get this to represent the probability distribution? And the way in which we're going to do that, is we're going to annotate each of the nodes in the network with what's called, with CPDs. So we previously defined CPDs. CPDs - just as a reminder - is a conditional probability distribution. I'm using the abreviation here. And each of these is a CPD so we have five knows, we have five CPDs. Now if you look at some of these CPDs, they're kind of degenerate. So, for example, the difficulty CPD isn't actually conditioned on anything. It just a unconditional probability distribution that tell us, for example, that courses are only 40% likely to be difficult and 60 percent to be easy. Here is a similar unconditional probability distribution for intelligence. Now this gets more interesting when you look at the actual conditional probability

distributions. So here, for example, is a CPD that we've seen before. This is the CPD of the grades A B and C. So, here is the conditional probability base of distribution that we've already seen before for the probability of grade given intelligence and difficulty. And we've already discussed how each of these rows necessarily sums to one because the probability distribution over the variable grade. And we have two other CPDs here in this case the probability of SAT given. Intelligence and the probability of letter given grade. So just to write this out completely, we have P and B. P of I, P of G given IV, P of L given D and P of F given [sound]. And that now is a fully parametrized Bayesian network and what we'll show next is how this Bayesian network produces a joint-probability distribution over these five variables. So here are my CPD's and what we are going to define now is the chain rule for Bayesian networks, and that chain rule basically takes these CPDS, all these little CPDS, and basically multiplies them together, like that. Now, before we think of what that means, let us first note that this is actually a factor product in exactly the same way that we just defined. So here, we have five factors, they have overlapping scopes. And what we end up with is a factor product that gives us a big, big factor, whose scope is five variables. What does that translate into when we apply the chain rule for Bayesian network in the context of a particular example. So let's look us at this particular assignment and there's going to be a bunch of these different assignments. And I'm just going to compute this other probability of this one. So the probability of d0, i1, g3, s1 and l1. Well, so the first thing you need is the probability of d0. And the probability of d0 is 0.6. The next thing from the next factor is the probability of i1. Which is 0.3. What about the next one? The next one now is a conditional factor and for that we need the probability of g3 because we have g3 here. So we want from this column over here and which row do we want? We want to [inaudible] the row corresponds to d0 and i1. Which is this row, so 0.02. Continuing on. We know now that we, we now have G3. So we want from this row. And we want the probability of L1, so we want this entry. 0.01... And finally, here we have probability of S1 given, given I1. So that would be this one

over here, 0.8. And in this exact same fashion we're going to end up defining all of the entries in this joint probability distribution. Great. So, what does that give us as a definition? A Bayesian network is a directed, acyclic gra ph. Acyclic means it has no cycle, that is you don't, you can't traverse the edges and get back to where you started. This is typically abbreviated as a DAG, and we're going to use the letter g to denote, to denote directed acyclographs. And the [inaudible] of this directed acyclograph represent the random variables that we're interested in reasoning about, the x1 of the xm. And for each node in the graph, 'xi', we have a CPD that denote the dependence of'xi' on its parent in the graph, 'g'. Okay? So this is a set of variables. So this would be like the probability of'g' given'ind'. So this would be'g', the'xi' would be'g' and the parents of'g', of'xi' would be'ind'. And the BN this Bayesian network represents a joint probability distribution via the chain rule for Bayesian networks which is the rule that's written over here and this is just a generalization of the rule that we wrote on the previous slide where we multiplied one CPD for each variable here we have again we're multiplying we're multiplying over all the variables I and multiplying the CPD for each of the XI's and this is once again a factor product. Help. That's great and I just defined the joint probability distribution. Now, whose to say that, that joint probability distribution is even a joint probability distribution? So what you have to show in order to demonstrate that something's a joint probability distribution. The first thing you have to show is that it is greater or equal to zero because probability distributions are [inaudible] be non negative. As so in order to do that we need to show that for this distribution p, and the way in, and this as it happens is quite trivial because p is a product of [inaudible]. Actually the products of CPU and CPD's are non-negative. And if you multiply a bunch of non-negative factors, you get a non-negative factor. That's fairly, fairly straightforward. Next one's a little bit trickier. The other thing we have to show or to demonstrate that, something's illegal distribution, is to prove that it [inaudible] to one. So how do we prove that something's [inaudible] to, that this probability distribution [inaudible] to one? So, lets, actually

work through this, and one of the reasons for working through this is not just to convince you that I'm not lying to you. But more importantly, the technique that we're going to use for this simple argument are going to accompany us throughout much of the first part of the course. And so it's worth going through this in a little bit of detail, but I'm going to show that in the context of the example of the distribution that we just showed, we just end up cluttered with notation, but the, but the idea is still exactly the same. So. But to sum up, over all possible assigments, [inaudible] of this [inaudible], of this hopefully probability distribution that I just defined. The P of DIGS and L, okay? So we're gonna break this up using the chain rule. For Bayesian networks. Because that's how we define this distribution p. Okay. Now here is the magic trick that we use whenever we deal with distribution like that. It's to notice that when we have the summation over several random variables here and here inside the summation we have a bunch of functions inside here. [laugh] Only some of the, the, each factor only involves a small sub-set of the variables. And, when you do, when you have a situation like that, you can push in the summations. So, specifically, we're going to start out by pushing in the summation over L. And, which factors involve L? Only the last one. So we can move in the summation over L to this position ending up with a following expression so notice there's now the summation over here, that's the first trick. A hack-en trick is [sound] definition of CPB's and we observe here that the summation over L is summing up over all possible mutually exclusive and exhaustive values of the variable L in it's conditional probability distribution P of L given D and that means. Basically we're summing up over a row of the CPD and that means that the sum has to be one. And so this term effectively cancels out, which gives us this. And now we do exactly the same thing. We can take S. And move it over here. And that gives us that. And this too, is a sum over a row of a c p d. So, this too, canceled and can be written as one. And we can do the same with g. And again. This has to be equal to one so we can cancel this and so on and so forth, and so by the end of this you're going to have you're going to have canceled all of the variables in this summation and what

remains is one. Now, this is a very simple proof but these tools of pushing information and removing variables that are not relevant are going to turn out to be very important. Okay. So now it's just the final little bit of terminology that will again accompany us later on. We are going to say that the distribution T factorizes over G means, we can represent it over the graph G, if we can and call it using the chain roll for Bayesian networks. So a distribution factorizes over, over a graph G, if I can represent it in this way as a product of these conditional probabilities. Well, let me just conclude this with a, a little example, which is, arguably, the very first example of bayesian networks ever. It was an example of baysian networks before anybody even called them bayesian networks. And it was defined, actually, by statistical geneticists who were trying to mull the notion of genetic inheritance in, in a population. And so here is a and example of genetic inheritance of blood type. So let me just a little bit of a background on the genetics of this. So in this very simple. Oversimplify an example, a persons genotype is defined by two values, because we have two copies of, of, each of those, of each of those chromosomes in this case. And so it, so for each chromosome we have three possible values, so we have, either the A value, the B value or the O value. These are familiar to many of you from blood types, which is what we're modeling here. And so the total number of genotypes that we have is listed over here. We have the AA genotype. Ab, AO, BO, , and OO. So we have a total of six distinct genotypes. However, we have fewer phenotypes, because it turns out that AA and AO manifest exactly the same way as a blood type A. And VO and VB manifests as blood type B and so we have only four phenotypes. So how do we model genetic inheritance in a Bayesian network. Here is a little Bayesian network for genetic inheritance. We can see that for each individual, say Marge. We have a genotype variable which takes on six values and one of the six values which we saw before and the phenotype in this case the blood type depends only on the person's genotype. We also see that a person's windpipe. Depends on the genotype of her parents. Because, after all, she inherits one chromosome from her mother, and one chromosome from her father. And so, and so the, a person's

individual genotype just depends on those two variables. And so this is very nice and very compelling, [inaudible] network. Because everything just fits in beautifully in terms of, things really do depend only on the, only on the variables that, that we, stipulate, and the models they depend on.

Vous aimerez peut-être aussi

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5795)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- 37 73 1 PBDocument7 pages37 73 1 PBAndrésSiepaPas encore d'évaluation

- Sheet Metal Forming - 2Document3 pagesSheet Metal Forming - 2HasanBadjriePas encore d'évaluation

- Unit V:: Design and Analysis of AlgorithmsDocument7 pagesUnit V:: Design and Analysis of AlgorithmsSairam N100% (1)

- Maron CalculusDocument453 pagesMaron CalculusArkadebSengupta100% (4)

- Y6 Autumn Block 1 WO6 Compare and Order Any Integers 2022Document2 pagesY6 Autumn Block 1 WO6 Compare and Order Any Integers 2022M alfyPas encore d'évaluation

- Father of GeometryDocument11 pagesFather of GeometrymohammedismayilPas encore d'évaluation

- Towards an ω-groupoid model of Type Theory: Based on joint work with Ondrej RypacekDocument33 pagesTowards an ω-groupoid model of Type Theory: Based on joint work with Ondrej RypacekΓιάννης ΣτεργίουPas encore d'évaluation

- Worksheet 1.1 CirclesDocument2 pagesWorksheet 1.1 CirclesJeypi CeronPas encore d'évaluation

- Inverse Z-TransformsDocument15 pagesInverse Z-TransformsShubham100% (1)

- GAMS - The Solver ManualsDocument556 pagesGAMS - The Solver ManualsArianna IsabellePas encore d'évaluation

- LM Business Math - Q1 W1-2 MELCS1-2-3 Module 1Document15 pagesLM Business Math - Q1 W1-2 MELCS1-2-3 Module 1MIRAFLOR ABREGANAPas encore d'évaluation

- Action Plan in Mathematics PDF FreeDocument3 pagesAction Plan in Mathematics PDF FreeMary June DemontevwrdePas encore d'évaluation

- Factor Analysis: (Dataset1)Document5 pagesFactor Analysis: (Dataset1)trisnokompPas encore d'évaluation

- Worksheet # 79: Using CPCTC With Triangle CongruenceDocument2 pagesWorksheet # 79: Using CPCTC With Triangle CongruenceMaria CortesPas encore d'évaluation

- Formal Languages, Automata and Computation: Slides For 15-453 Lecture 1 Fall 2015 1 / 25Document56 pagesFormal Languages, Automata and Computation: Slides For 15-453 Lecture 1 Fall 2015 1 / 25Wasim HezamPas encore d'évaluation

- DGDGDocument66 pagesDGDGmeenuPas encore d'évaluation

- Does Hegel Have Anything To Say To - Paterson PDFDocument15 pagesDoes Hegel Have Anything To Say To - Paterson PDFg_r_rossiPas encore d'évaluation

- Pathway Areas: School of Computer Science and EngineeringDocument31 pagesPathway Areas: School of Computer Science and EngineeringnighatPas encore d'évaluation

- ORHandout 2 The Simplex MethodDocument16 pagesORHandout 2 The Simplex MethodDave SorianoPas encore d'évaluation

- .3 Integration - Method of SubstitutionDocument16 pages.3 Integration - Method of Substitutionhariz syazwanPas encore d'évaluation

- 'S Melodien (Article)Document9 pages'S Melodien (Article)julianesque100% (1)

- Lifework Week1Document7 pagesLifework Week1Jordyn BlackshearPas encore d'évaluation

- Audio Processing in Matlab PDFDocument5 pagesAudio Processing in Matlab PDFAditish Dede EtnizPas encore d'évaluation

- Rubik's Cube Move Notations ExplanationDocument1 pageRubik's Cube Move Notations Explanationgert.vandeikstraatePas encore d'évaluation

- Assignment EKC 245 PDFDocument8 pagesAssignment EKC 245 PDFLyana SabrinaPas encore d'évaluation

- Mcq-Central Tendency-1113Document24 pagesMcq-Central Tendency-1113Jaganmohanrao MedaPas encore d'évaluation

- MCQ in Differential Calculus (Limits and Derivatives) Part 1 - Math Board ExamDocument13 pagesMCQ in Differential Calculus (Limits and Derivatives) Part 1 - Math Board ExamAF Xal RoyPas encore d'évaluation

- Module 5 Parent LetterDocument1 pageModule 5 Parent Letterapi-237110114Pas encore d'évaluation

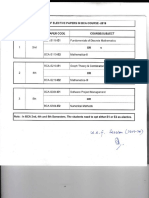

- N, 4athematics-Ll: Students To Opt Eilher orDocument4 pagesN, 4athematics-Ll: Students To Opt Eilher orDjsbs SinghPas encore d'évaluation

- M.Sc. (Maths) Part II Sem-III Assignments PDFDocument10 pagesM.Sc. (Maths) Part II Sem-III Assignments PDFShubham PhadtarePas encore d'évaluation