Académique Documents

Professionnel Documents

Culture Documents

Iterative Solution of Algebraic Riccati Equations For Damped Systems

Transféré par

boffa126Description originale:

Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Iterative Solution of Algebraic Riccati Equations For Damped Systems

Transféré par

boffa126Droits d'auteur :

Formats disponibles

Iterative Solution of Algebraic Riccati Equations for Damped Systems

Kirsten Morris and Carmeliza Navasca

AbstractAlgebraic Riccati equations (ARE) of large dimen-

sion arise when using approximations to design controllers for

systems modelled by partial differential equations. We use a

modied Newton method to solve the ARE. Since the modied

Newton method leads to a right-hand side of rank equal to

the number of inputs, regardless of the weights, the resulting

Lyapunov equation can be more efciently solved. A low-rank

Cholesky-ADI algorithm is used to solve the Lyapunov equation

resulting at each step. The algorithm is straightforward to code.

Performance is illustrated with an example of a beam, with

different levels of damping. Results indicate that for weakly

damped problems a low rank solution to the ARE may not

exist. Further analysis supports this point.

INTRODUCTION

A classical controller design objective is to nd a control

u(t) so that the objective function

0

x(t), Qx(t) +u

(t)Ru(t)dt (1)

is minimized where R is a symmetric positive denite matrix

and Q is symmetric positive semi-denite. It is well-known

that the solution to this problem is found by solving an

algebraic Riccati equation

A

P +PAPBR

1

B

P = Q. (2)

for a feedback operator

K = R

1

B

P. (3)

If the system is modelled by partial differential or delay

differential equations then the state x(t) lies in an innite-

dimensional space. The theoretical solution to this problem

for many innite-dimensional systems parallels the theory for

nite-dimensional systems [11], [12], [18], [19]. In practice,

the control is calculated through approximation. The matrices

A, B, and C arise in a nite dimensional approximation of

the innite dimensional system. Let n indicate the order of

the approximation, m the number of control inputs and p

the number of observations. Thus, A R

nn

, B R

nm

and C R

pn

. There have been many papers written

describing conditions under which approximations lead to

approximating controls that converge to the control for the

original innite-dimensional system [4], [12], [15], [18],

[19]. In this paper we will assume that an approximation

has been chosen so that a solution to the Riccati equation (2)

exists for sufciently large n and also that the approximating

feedback operators converge. Unfortunately, particularly in

partial differential equation models with more than one space

dimension, many innite-dimensional control problems lead

to Riccati equations of large order. A survey of currently

used methods to solve large ARE can be found in [8]. Two

common iterative methods are Chandrasekhar [3], [9] and

Newton-Kleinman iterations [14], [17], [28]. Here we use a

modication to the Newton-Kleinman method rst proposed

by by Banks and Ito [3] as a renement for a partial solution

to the Chandraskehar equation.

Solution of the Lyapunov equation is a key step in im-

plementing either modied or standard Newton-Kleinman.

The Lyapunov equations arising in the Newton-Kleinman

method have several special features: (1) the model order

n is generally much larger than the number of controls m

or number of observations p and (2) the matrices are often

sparse. We use a recently developed method [20], [26] that

uses these features, leading to an efcient algorithm. Use

of this Lyapunov solver with a Newton-Kleinman method is

described in [27], [6], [7], [8].

In the next section we describe the algorithm to solve the

Lyapunov equations. We use this Lyapunov solver with both

standard and modied Newton-Kleinman to solve a number

of standard control examples, including one with several

space variables. Our results indicate that modied Newton-

Kleinman achieves considerable savings in computation time

over standard Newton-Kleinman. We also found that using

the solution from a lower-order approximation as an ansatz

for a higher-order approximation signicantly reduced the

computation time.

I. DESCRIPTION OF ALGORITHM

One approach to solving large Riccati equations is the

Newton-Kleinman method [17]. The Riccati equation (2) can

be rewritten as

(ABK)

P +P(ABK) = QK

RK. (4)

We say a matrix A

o

is Hurwitz if (A

o

) C

.

Theorem 1.1: [17] Consider a stabilizable pair (A, B)

with a feedback K

0

so that A BK

0

is Hurwitz. Dene

S

i

= ABK

i

, and solve the Lyapunov equation

S

i

P

i

+P

i

S

i

= QK

i

RK

i

(5)

for P

i

and then update the feedback as K

i+1

= R

1

B

P

i

.

Then

lim

i

P

i

= P

with quadratic convergence.

The key assumption in the above theorem is that an initial

estimate, or ansatz, K

0

, such that A BK

0

is Hurwitz

is available. For an arbitrary large Riccati equation, this

condition may be difcult to satisfy. However, this condition

is not restrictive for Riccati equations arising in control of

innite-dimensional systems. First, many of these systems

are stable even when uncontrolled and so the initial iterate

K

0

may be chosen as zero. Second, if the approximation

procedure is valid then convergence of the feedback gains is

obtained with increasing model order. Thus, a gain obtained

from a lower order approximation, perhaps using a direct

method, may be used as an ansatz for a higher order

approximation. This technique was used successfully in [14],

[24], [28].

A modication to this method was proposed by Banks and

Ito [3]. In that paper, they partially solve the Chandrasekhar

equations and then use the resulting feedback K as a

stabilizing initial guess. Instead of the standard Newton-

Kleinman iteration (5) above, Banks and Ito rewrote the

Riccati equation in the form

(ABK

i

)

X

i

+X

i

(ABK

i

) = D

i

RD

i

,

D

i

= K

i

K

i1

, K

i+1

= K

i

B

X

i

.

Solution of a Lyapunov equation is a key step in each

iteration of the Newton-Kleinman method, both standard and

modied. Consider the Lyapunov equation

XA

o

+A

o

X = DD

(6)

where A

o

R

nn

is Hurwitz and D R

nr

. Factor

Q into C

C and let n

Q

indicate the rank of C. In the

case of standard Newton-Kleinman, r = m + n

Q

while for

modied Newton-Kleinman, r = m. If A

o

is Hurwitz, then

the Lyapunov equation has a symmetric positive semidenite

solution X. As for the Riccati equation, direct methods such

as Bartels-Stewart [5] are only appropriate for low model

order and do not take advantage of sparsity in the matrices.

In [3] Smiths method was used to solve the Lyapunov

equations. For p < 0, dene U = (A

o

pI)(A

o

+ pI)

1

and V = 2p(A

o

+pI)

1

DD

(A

o

+pI)

1

. The Lyapunov

equation (6) can be rewritten as

X = U

XU +V.

In Smiths method [30], the solution X is found by using

successive substitutions: X = lim

i

X

i

where

X

i

= U

X

i1

U +V (7)

with X

0

= 0. Convergence of the iterations can be improved

by careful choice of the parameter p e.g. [29, pg. 297].

This method of successive substitition is unconditionally

convergent, but has only linear convergence.

The ADI method [21], [32] improves Smiths method by

using a different parameter p

i

at each step. Two alternating

linear systems,

(A

o

+p

i

I)X

i

1

2

= DD

X

i1

(A

o

p

i

I)

(A

o

+p

i

I)X

i

= DD

i

1

2

(A

o

p

i

I)

are solved recursively starting with X

0

= 0 R

nn

and

parameters p

i

< 0. If all parameters p

i

= p then the above

equations reduce to Smiths method. If the ADI parameters

p

i

are chosen appropriately, then convergence is obtained

in J iterations where J n. The parameter selection

problem has been studied extensively [10], [21], [31]. For

TABLE I

COMPLEXITY OF CF-ADI AND ADI [20]

CF-ADI ADI

Sparse A O(Jrn) O(Jrn

2

)

Full A O(Jrn

2

) O(Jrn

3

)

TABLE II

CHOLESKY-ADI METHOD

Given Ao and D

Choose ADI parameters {p

1

, . . . , p

J

} with (p

i

) < 0

Dene z

1

=

2p

1

(A

o

+ p

1

I)

1

D

and Z

1

= [z

1

]

For i = 2, . . . , J

Dene W

i

= (

2p

i+1

2p

i

)[I (p

i+1

p

i

)(A

o

+ p

i+1

I)

1

]

(1) z

i

= W

i

z

i1

(2) If z > tol

Z

i

= [Z

i1

z

i

]

Else, stop.

symmetric A the optimal parameters can be easily calculated.

A heuristic procedure to calculate the parameters in the

general case is in [26]. If the spectrum of A is complex,

we estimated the complex ADI parameters as in [10]. As

the spectrum of A attens to the real axis the ADI parameters

are closer to optimal.

If A

o

is sparse, then sparse arithmetic can be used in

calculation of X

i

. However, full calculation of the dense

iterates X

i

is required at each step. By setting X

0

= 0,

it can be easily shown that X

i

is symmetric and positive

semidenite for all i, and so we can write X = ZZ

where Z is a Cholesky factor of X [20], [26]. (A Cholesky

factor does not need to be square or be lower triangular.)

Substituting Z

i

Z

i

for X

i

, setting X

0

= 0, and dening

M

i

= (A

o

+p

i

I)

1

we obtain the following iterates

Z

1

=

2p

1

M

1

D

Z

i

= [

2p

i

M

i

D, M

i

(A

o

p

i

I)Z

i1

].

Note that Z

1

R

nr

, Z

2

R

n2r

, and Z

i

R

nir

.

The algorithm is stopped when the Cholesky iterations

converge within some tolerance. In [20] these iterates are

reformulated in a more efcient form, using the observation

that the order in which the ADI parameters are used is

irrelevant. This leads to the algorithm shown in Table II.

This solution method results in considerable savings in

computation time and memory. In calculation of the feedback

K, the full solution X never needs to be constructed. Also,

the complexity of this method (CF-ADI) is also considerably

less than that of standard ADI as shown in Table I. Recall

that for standard Newton-Kleinman, r is the sum of the rank

of the state weight n

Q

and controls m. For modied Newton-

Kleinman r is equal to m. The reduction in complexity

of CF-ADI method over ADI is marked for the modied

Newton-Kleinman method.

E 2.68 10

10

N/m

2

I

b

1.64 10

9

m

4

1.02087 kg/m

Cv 2 Ns/m

2

C

d

2.5 10

8

Ns/m

2

L 1 m

I

h

121.9748 kg m

2

d .041 kg

1

TABLE III

TABLE OF PHYSICAL PARAMETERS.

II. CONTROL OF EULER-BERNOULLI BEAM

In this section, we consider a Euler-Bernoulli beam

clamped at one end (r = 0) and free to vibrate at the other

end (r = 1). Let w(r, t) denote the deection of the beam

from its rigid body motion at time t and position r. The

deection is controlled by applying a torque u(t) at the

clamped end (r = 0). We assume that the hub inertia I

h

is much larger than the beam inertia I

b

so that I

h

u(t).

The partial differential equation model with Kelvin-Voigt and

viscous damping is

w

tt

(r, t) +C

v

w

t

(r, t) +...

2

r

2

[C

d

I

b

w

rrt

(x, t) +EI

b

w

rr

(r, t)] =

r

I

h

u(t),

with boundary conditions

w(0, t) = 0

w

r

(1, t) = 0.

EI

b

w

rr

(1, t) +C

d

I

b

w

rrt

(1, t) = 0

r

[EI

b

w

rr

(r, t) +C

d

I

b

w

rrt

(r, t)]

r=1

= 0.

The values of the physical parameters in Table II are as in

[2].

Let

H =

w H

2

(0, 1) : w(0) =

dw

dr

(0) = 0

be the closed linear subspace of the Sobolev space H

2

(0, 1)

and dene the state-space to be X = HL

2

(0, 1) with state

z(t) = (w(, t),

t

w(, t)). A state-space formulation of the

above partial differential equation problem is

d

dt

x(t) = Ax(t) +Bu(t),

where

A =

0 I

EI

b

d

4

dr

4

C

d

I

b

d

4

dr

4

Cv

and

B =

0

r

I

h

with domain

dom(A) = {(, ) X : H, M(L) =

d

dr

M(L) = 0}

where M = EI

b

d

2

dr

2

+C

d

I

b

d

2

dr

2

H

2

(0, 1).

We use R = 1 and dene C by the tip position:

w(1, t) = C[w(x, t) w(x, t)].

Then Q = C

C. We also solved the control problem with

Q = I.

Let H

2N

H be a sequence of nite-dimensional

subspaces spanned by the standard cubic B-splines with a

uniform partition of [0, 1] into N subintervals. This yields

an approximation in H

2N

H

2N

[16, e.g.] of dimension

n = 4N. This approximation method yields a sequence

of solutions to the algebraic Riccati equation that converge

strongly to the solution to the innite-dimensonal Riccati

equation corresponding to the original partial differential

equation description [4], [22].

III. LOW RANK APPROXIMATIONS TO LYAPUNOV

FUNCTION

Tables IV,V show the effect of varying C

d

on the number

of iterations required for convergence. Larger values of C

d

(i.e. smaller values of ) leads to a decreasing number of

iterations. Small values of C

d

lead to a large number of

required iterations in each solution of a Lyapunov equation.

Recall that the CF-ADI algorithm used here starts with a

rank 1 initial estimate of the Cholesky factor and the rank of

the solution is increased at each step. The efciency of the

Cholesky-ADI method relies on the existence of a low-rank

approximation of the solution X to the Lyapunov equation.

This is true of many other iterative algorithms to solve large

Lyapunov equations.

Theorem 3.1: For any symmetric, positive semi-denite

matrix X of rank n let

1

2

...

n

be its eigenvalues.

For any rank k < n matrix X

k

,

X X

k

2

X

2

k+1

1

.

Proof: See, for example, [13, Thm. 2.5.3].

Thus, the relative size of the largest and smallest eigen-

values determines the existence of a low rank approximation

X

k

that is close to X, regardless of how this approximation

is obtained.

The ratio

k+1

/

1

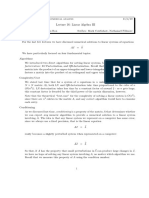

is plotted for several values of C

d

in

Figure 1. For larger values of C

d

the solution X is closer to

a low rank matrix than it is for smaller values of C

d

.

A bound on the rank of the solution to a Lyapunov

equation where A is symmetric is given in [25]. A tighter

bound on the error in a low-rank approximation has been

obtained [1] in terms of the eigenvalues and eigenvectors of

A. This bound is applicable to all diagonalizable matrices

A. The bound for the case where the right-hand-side D has

rank one is as follows.

Theorem 3.2: [1, Thm 3.1] Let V be the matrix of right

eigenvectors of A, and denote its condition number by (X).

Denote the eigenvalues of A by

1

, ...

n

. There is a rank

k < n approximation to X satisfying

X X

k

2

(n k)

2

k+1

((V )D

2

)

2

(8)

where

k+1

=

1

2Real(

k+1

)

k

j=1

k+1

k+1

+

j

2

.

The eigenvalues

i

are ordered so that

i

are decreasing.

In order to calculate this bound, all the eigenvalues and

eigenvectors of A are needed.

If the condition number (V ) is not too large, for instance

if A is normal, then

k+1

/

1

gives a relative error estimate of

the error

k+1

/

1

in the approximation X

k

. This estimate

is observed in [1] to estimate the actual decay rate of the

eigenvalues of X quite well, even in cases where the decay

is very slow.

Consider a parabolic partial differential equation, with A-

matrix of the approximation is symmetric and negative de-

nite. Then all the eigenvalues are real. A routine calculation

yields that

k+1

k

and so the rate of growth of the eigenvalues determines the

accuracy of the low rank approximation. The accuracy of

the low-rank approximant can be quite different if A is non-

symmetric. The solution X to the Lyapunov equation could

be, for instance, the identity matrix [1], [25] in which case

the eigenvalues of X do not decay.

It is observed through several numerical examples in [1]

that it is not just complex eigenvalues that cause the decay

rate to slow down, but the dominance of the imaginary parts

of the eigenvalues as compared to the real parts, together with

absence of clustering in the spectrum of A. This effect was

observed in the beam equation. Figure 2 shows the change

in the spectrum of A as C

d

is varied. Essentially, increasing

C

d

increases the angle that the spectrum makes with the

imaginary axis. Note that the eigenvalues do not cluster. As

the damping is decreased, the dominance of the imaginary

parts of the eigenvalues of A increases and the decay rate of

the eigenvalues of X slows. The Cholesky-ADI calculations

for the beam with C

d

= 0 did not terminate on a solution

X

k

with k < n.

These observations are supported by results in the theory

of control of partial differential equations. If C

d

> 0 the

semigroup for the original partial differential equation is

parabolic and the solution to the Riccati equation converges

uniformly in operator norm [18, chap.4]. However, if C

d

= 0,

the partial differential equation is hyperbolic and only strong

convergence of the solution is obtained [19]. Thus, one might

expect a greater number of iterations in the Lyapunov loop

to be required as C

d

is decreased. Consider the bound (8)

for the beam with C

d

= 0, and an eigenfunction basis for

the approximation so that the eigenvalues of A are the exact

eigenvalues. Dening c

v

= C

v

/, c

2

= EI

b

/ and indicating

the roots of 1 +cos(a)cosh(a) by a

k

, the eigenvalues are

k

= c

v

/2 i

k

where

k

=

ca

2

k

c

2

v

/4

TABLE IV

BEAM: : EFFECT OF CHANGING C

d

(STANDARD NEWTON-KLEINMAN)

Cv C

d

N.-K. Its Lyapunov Itns CPU time

2 1E04 1.5699

3E05 1.5661

4E05 1.5654 3 1620;1620;1620 63.14

1E07 1.5370 3 1316;1316;1316 42.91

1E08 1.4852 3 744;744;744 18.01

5E08 1.3102 3 301;301;301 5.32

TABLE V

BEAM: EFFECT OF CHANGING C

d

(MODIFIED NEWTON-KLEINMAN)

Cv C

d

N.-K. Its Lyapunov Its CPU time

2 1E04 1.5699 2

3E05 1.5661 2

4E05 1.5654 2 1620;1 24.83

1E07 1.5370 2 1316;1 16.79

1E08 1.4852 2 744;1 7.49

5E08 1.3102 2 301;1 2.32

[23]. A simple calculation yields that for all k,

1

=

k1

j=1

1

1 +

4c

2

v

(w

k

wj)

2

1.

This indicates that the eigenvalues of the Lyapunov solution

do not decay.

IV. CONCLUSIONS

The results indicate a problem with solving the algebraic

Riccati equation for systems with light damping where the

eigenvalues are not decaying rapidly. Although better choice

of the ADI parameters might help convergence, the fact

that the low rank approximations to the solution converge

very slowly when the damping is small limits the achievable

improvement. This may have consequences for control of

coupled acoustic-structure problems where the spectra are

closer to those of hyperbolic systems than those of parabolic

systems.

0 5 10 15 20 25 30 35 40

10

!80

10

!70

10

!60

10

!50

10

!40

10

!30

10

!20

10

!10

10

0

k

!

k

/

!

1

Eigenvalue Ratio for N=96 with varying C

d

5x10

8

1x10

8

1x10

7

Fig. 1. Eigenvalue ratio for solution to beam ARE for varying C

d

!10

10

!10

8

!10

6

!10

4

!10

2

!10

0

!3000

!2000

!1000

0

1000

2000

3000

Spectrum of A for N=96 with varying C

d

Real

I

m

a

g

i

n

a

r

y

5x10

8

1x10

8

1x10

7

Fig. 2. Beam: Spectrum of A for varying C

d

!10

10

!10

8

!10

6

!10

4

!10

2

!10

0

!300

!200

!100

0

100

200

300

Real

I

m

a

g

i

n

a

r

y

Beam Spectrum for N=96 with C

d

=1x10

8

A

A!BK

1

A!BK

Fig. 3. Beam: Spectrum of uncontrolled and controlled systems

REFERENCES

[1] A.C. Antoulas, D.C. Sorensen, Y. Zhou, On the decay rate of Hankel

singular values and related issues, Systems and Control Letters, vol.

46, pg. 323342, 2002.

[2] H.T. Banks and D. J. Inman, On Damping Mechanisms in Beams,

ICASE Report No. 89-64, NASA, Langley, 1989.

[3] H. T. Banks and K. Ito, A Numerical Algorithm for Optimal Feedback

Gains in High Dimensional Linear Quadratic Regulator Problems,

SIAM J. Control Optim., vol. 29, no. 3, pg. 499515, 1991.

[4] H. T. Banks and K. Kunisch, The linear regulator problem for

parabolic systems, SIAM J. Control Optim., vol. 22, no. 5, pg. 684

698, 1984.

[5] R.H. Bartels and W. Stewart, Solution of the matrix equation AX+XB

=C Comm. of ACM, vol. 15, pg. 820826, 1972.

[6] P. Benner, Efcient Algorithms for Large-Scale Quadratic Matrix

Equations, Proc. in Applied Mathematics and Mechanics, vol. 1, no.

1, pg. 492495, 2002.

[7] P. Benner and H. Mena, BDF Methods for Large-Scale Differential

Riccati Equations, Proc. of the 16th International Symposium on

Mathematical Theory of Networks and Systems, Leuven, Belgium,

2004.

[8] P. Benner, Solving Large-Scale Control Problems, IEEE Control

Systems Magazine, vol. 24, no. 1, pg. 4459, 2004.

[9] J.A. Burns and K. P. Hulsing, Numerical Methods for Approximating

Functional Gains in LQR Boundary Control Problems, Mathematical

and Computer Modelling, vol. 33, no. 1, pg. 89100, 2001.

[10] N. Ellner and E. L. Wachpress, Alternating Direction Implicit Itera-

tion for Systems with Complex Spectra, SIAM J. Numer. Anal., vol.

28, no. 3, pg. 859870, 1991.

[11] J.S. Gibson, The Riccati Integral Equations for Optimal Control

Problems on Hilbert Spaces, SIAM J. Control and Optim., vol. 17,

pg. 637565, 1979.

[12] J.S. Gibson, Linear-quadratic optimal control of hereditary differ-

ential systems: innite dimensional Riccati equations and numerical

approximations, SIAM J. Control and Optim., vol. 21, pg. 95139,

1983.

[13] G.H. Golub and C.F. van Loan, Matrix Computations, John Hopkins,

1989.

[14] J.R. Grad and K. A. Morris, Solving the Linear Quadratic Control

Problem for Innite-Dimensional Systems, Computers and Mathe-

matics with Applications, vol. 32, no. 9, pg. 99-119, 1996.

[15] K. Ito, Strong convergence and convergence rates of approximating

solutions for algebraic Riccati equations in Hilbert spaces, Distributed

Parameter Systems, eds. F. Kappel, K. Kunisch, W. Schappacher,

Springer-Verlag, pg. 151166 1987.

[16] K. Ito and K. A. Morris, An approximation theory of solutions to

operator Riccati equations for H

control, SIAM J. Control Optim.,

vol. 36, pg. 8299, 1998.

[17] D. Kleinman, On an Iterative Technique for Riccati Equation Compu-

tations, IEEE Transactions on Automat. Control, vol. 13, pg. 114115,

1968.

[18] I. Lasiecka and R. Triggiani, Control Theory for Partial Differential

Equations: Continuous and Approximation Theories, Part 1, Cam-

bridge University Press, 2000.

[19] I. Lasiecka and R. Triggiani, Control Theory for Partial Differential

Equations: Continuous and Approximation Theories, Part 2, Cam-

bridge University Press, 2000.

[20] J. R. Li and J White, Low rank solution of Lyapunov equations, SIAM

J. Matrix Anal. Appl., Vol. 24, pg. 260280, 2002.

[21] A. Lu and E. L. Wachpress, Solution of Lyapunov Equations by

Alternating Direction Implicit Iteration, Computers Math. Applic.,

vol. 21,no. 9, pg. 4358, 1991.

[22] K.A. Morris, Design of Finite-Dimensional Controllers for Innite-

Dimensional Systems by Approximation, J. Mathematical Systems,

Estimation and Control, vol. 4, pg. 130, 1994.

[23] K.A. Morris and M. Vidyasagar, A Comparison of Different Models

for Beam Vibrations from the Standpoint of Controller Design, ASME

Journal of Dynamic Systems, Measurement and Control, September,

1990, Vol. 112, pg. 349-356.

[24] Morris, K. and Navasca, C., Solution of Algebraic Riccati Equations

Arising in Control of Partial Differential Equations, in Control and

Boundary Analysis, ed. J. Cagnola and J-P Zolesio, Marcel Dekker,

2004.

[25] T. Penzl, Eigenvalue decay bounds for solutions of Lyapunov equa-

tions: the symmetric case, Systems and Control Letters, vol. 40, pg.

139144, 2000.

[26] T. Penzl, A Cyclic Low-Rank Smith Method for Large Sparse

Lyapunov Equations, SIAM J. Sci. Comput., vol. 21, no. 4, pg. 1401

1418, 2000.

[27] T. Penzl LYAPACK Userss Guide, http://www.win.tue.nl/niconet.

[28] I. G. Rosen and C. Wang, A multilevel technique for the approximate

solution of operator Lyapunov and algebraic Riccati equations, SIAM

J. Numer. Anal. vol. 32, pg. 514541, 1994.

[29] Russell, D.L., Mathematics of Finite Dimensional Control Systems:

Theory and Design, Marcel Dekker, New York, 1979.

[30] R.A. Smith, Matrix Equation XA + BX = C, SIAM Jour. of Applied

Math., vol. 16, pg. 198201, 1968.

[31] E. L. Wachpress, The ADI Model Problem, preprint, 1995.

[32] E. Wachpress, Iterative Solution of the Lyapunov Matrix Equation,

Appl. Math. Lett., vol. 1, pg. 87-90, 1988.

Vous aimerez peut-être aussi

- Difference Equations in Normed Spaces: Stability and OscillationsD'EverandDifference Equations in Normed Spaces: Stability and OscillationsPas encore d'évaluation

- Amsj 2023 N01 05Document17 pagesAmsj 2023 N01 05ntuneskiPas encore d'évaluation

- Lecture 23Document38 pagesLecture 23Azhar MahmoodPas encore d'évaluation

- Interior-Point Methods in Model Predictive Control: Stephen WrightDocument30 pagesInterior-Point Methods in Model Predictive Control: Stephen WrightIngole DeepakPas encore d'évaluation

- Basic Iterative Methods For Solving Linear Systems PDFDocument33 pagesBasic Iterative Methods For Solving Linear Systems PDFradoevPas encore d'évaluation

- Robust Positively Invariant Sets - Efficient ComputationDocument6 pagesRobust Positively Invariant Sets - Efficient ComputationFurqanPas encore d'évaluation

- State RegulatorDocument23 pagesState RegulatorRishi Kant SharmaPas encore d'évaluation

- Time Harmonic Maxwell EqDocument16 pagesTime Harmonic Maxwell Eqawais4125Pas encore d'évaluation

- Tools For Kalman Filter TunningDocument6 pagesTools For Kalman Filter Tunningnadamau22633Pas encore d'évaluation

- Observer Hybrid Systems 4Document12 pagesObserver Hybrid Systems 4Paulina MarquezPas encore d'évaluation

- A Fast Algorithm For Matrix Balancing: AbstractDocument18 pagesA Fast Algorithm For Matrix Balancing: AbstractFagner SutelPas encore d'évaluation

- High-Performance PCG Solvers For Fem Structural AnalysisDocument28 pagesHigh-Performance PCG Solvers For Fem Structural AnalysisAbu HasanPas encore d'évaluation

- MIT2 29F11 Lect 11Document20 pagesMIT2 29F11 Lect 11costpopPas encore d'évaluation

- Linear System: 2011 Intro. To Computation Mathematics LAB SessionDocument7 pagesLinear System: 2011 Intro. To Computation Mathematics LAB Session陳仁豪Pas encore d'évaluation

- Binary SplittingDocument8 pagesBinary Splittingcorne0Pas encore d'évaluation

- Improved Conic Reformulations For K-Means Clustering: Madhushini Narayana Prasad and Grani A. HanasusantoDocument24 pagesImproved Conic Reformulations For K-Means Clustering: Madhushini Narayana Prasad and Grani A. HanasusantoNo12n533Pas encore d'évaluation

- The Conjugate Gradient Method: Tom LycheDocument23 pagesThe Conjugate Gradient Method: Tom Lychett186Pas encore d'évaluation

- Controller Design of Inverted Pendulum Using Pole Placement and LQRDocument7 pagesController Design of Inverted Pendulum Using Pole Placement and LQRInternational Journal of Research in Engineering and Technology100% (1)

- Section-3.8 Gauss JacobiDocument15 pagesSection-3.8 Gauss JacobiKanishka SainiPas encore d'évaluation

- Bifurcation in The Dynamical System With ClearancesDocument6 pagesBifurcation in The Dynamical System With Clearancesklomps_jrPas encore d'évaluation

- Multigrid Algorithms For Inverse Problems With Linear Parabolic Pde ConstraintsDocument29 pagesMultigrid Algorithms For Inverse Problems With Linear Parabolic Pde Constraintsapi-19973331Pas encore d'évaluation

- A New Iterative Refinement of The Solution of Ill-Conditioned Linear System of EquationsDocument10 pagesA New Iterative Refinement of The Solution of Ill-Conditioned Linear System of Equationsscribd8885708Pas encore d'évaluation

- Variable Neighborhood Search For The Probabilistic Satisfiability ProblemDocument6 pagesVariable Neighborhood Search For The Probabilistic Satisfiability Problemsk8888888Pas encore d'évaluation

- Symmetry: Modified Jacobi-Gradient Iterative Method For Generalized Sylvester Matrix EquationDocument15 pagesSymmetry: Modified Jacobi-Gradient Iterative Method For Generalized Sylvester Matrix EquationEDU CIPANAPas encore d'évaluation

- Lecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisDocument7 pagesLecture 16: Linear Algebra III: cs412: Introduction To Numerical AnalysisZachary MarionPas encore d'évaluation

- Indefinite Symmetric SystemDocument18 pagesIndefinite Symmetric SystemsalmansalsabilaPas encore d'évaluation

- Efficient Parallel Non-Negative Least Squares On Multi-Core ArchitecturesDocument16 pagesEfficient Parallel Non-Negative Least Squares On Multi-Core ArchitecturesJason StanleyPas encore d'évaluation

- Adam P08Document36 pagesAdam P08LiquorPas encore d'évaluation

- Iterative Methods For Solving Linear SystemsDocument32 pagesIterative Methods For Solving Linear SystemsNapsterPas encore d'évaluation

- Analysis of Continuous Systems Diffebential and Vabiational FobmulationsDocument18 pagesAnalysis of Continuous Systems Diffebential and Vabiational FobmulationsDanielPas encore d'évaluation

- Hermitian Nonnegative-Definite and Positive-Definite Solutions of The Matrix Equation AXB CDocument8 pagesHermitian Nonnegative-Definite and Positive-Definite Solutions of The Matrix Equation AXB CHakanKalaycıPas encore d'évaluation

- Numerical Simulation of Unsteady MHD Flows and Applications: R. Abgrall, R. Huart, P. RametDocument7 pagesNumerical Simulation of Unsteady MHD Flows and Applications: R. Abgrall, R. Huart, P. Rametakhu_79Pas encore d'évaluation

- A Second Order Projection Scheme For The K-Epsilon Turbulence ModelDocument7 pagesA Second Order Projection Scheme For The K-Epsilon Turbulence ModelmecharashPas encore d'évaluation

- (Ir (NLL: A Robust Gmres-Based Adaptive Polynomial Preconditioning Algorithm For Nonsymmetric Linear JouberttDocument13 pages(Ir (NLL: A Robust Gmres-Based Adaptive Polynomial Preconditioning Algorithm For Nonsymmetric Linear JouberttaabbPas encore d'évaluation

- Use of Orthogonal Transformations in Data Classification-ReconciliationDocument11 pagesUse of Orthogonal Transformations in Data Classification-ReconciliationsavanPas encore d'évaluation

- Siam J. M A A - 2001 Society For Industrial and Applied Mathematics Vol. 22, No. 4, Pp. 1038-1057Document20 pagesSiam J. M A A - 2001 Society For Industrial and Applied Mathematics Vol. 22, No. 4, Pp. 1038-1057Irenee BriquelPas encore d'évaluation

- Quantum Computation of Prime Number FunctionsDocument10 pagesQuantum Computation of Prime Number FunctionsGhulam FaridPas encore d'évaluation

- Convergence Acceleration of Alternating SeriesDocument10 pagesConvergence Acceleration of Alternating SeriesblablityPas encore d'évaluation

- Approximation Techniques in Complex Reaction Kinetics: DannyDocument18 pagesApproximation Techniques in Complex Reaction Kinetics: DannyDeep GhosePas encore d'évaluation

- Adaptive ScalarizationsDocument25 pagesAdaptive ScalarizationsHala El OuarrakPas encore d'évaluation

- Convergence of the generalized-α scheme for constrained mechanical systemsDocument18 pagesConvergence of the generalized-α scheme for constrained mechanical systemsCesar HernandezPas encore d'évaluation

- Chap6 PDFDocument11 pagesChap6 PDFThiago NobrePas encore d'évaluation

- Matrix-Lifting Semi-Definite Programming For Decoding in Multiple Antenna SystemsDocument15 pagesMatrix-Lifting Semi-Definite Programming For Decoding in Multiple Antenna SystemsCatalin TomaPas encore d'évaluation

- MIR2012 Lec1Document37 pagesMIR2012 Lec1yeesuenPas encore d'évaluation

- Solon Esguerra Periods of Relativistic Oscillators With Even Polynomial PotentialsDocument12 pagesSolon Esguerra Periods of Relativistic Oscillators With Even Polynomial PotentialsJose Perico EsguerraPas encore d'évaluation

- A Direct Least-Squares (DLS) Method For PNPDocument8 pagesA Direct Least-Squares (DLS) Method For PNPMarcusPas encore d'évaluation

- Multiple Regression Analysis: I 0 1 I1 K Ik IDocument30 pagesMultiple Regression Analysis: I 0 1 I1 K Ik Iajayikayode100% (1)

- P.L.D. Peres J.C. Geromel - H2 Control For Discrete-Time Systems Optimality and RobustnessDocument4 pagesP.L.D. Peres J.C. Geromel - H2 Control For Discrete-Time Systems Optimality and RobustnessflausenPas encore d'évaluation

- Practice 6 8Document12 pagesPractice 6 8Marisnelvys CabrejaPas encore d'évaluation

- A Sequential Linear Programming Method For Generalized Linear Complementarity ProblemDocument8 pagesA Sequential Linear Programming Method For Generalized Linear Complementarity ProblemPavan Sandeep V VPas encore d'évaluation

- Matching, Linear Systems, and The Ball and Beam: RJ J J K R R RDocument7 pagesMatching, Linear Systems, and The Ball and Beam: RJ J J K R R RAlbana OxaPas encore d'évaluation

- A Model-Trust Region Algorithm Utilizing A Quadratic InterpolantDocument11 pagesA Model-Trust Region Algorithm Utilizing A Quadratic InterpolantCândida MeloPas encore d'évaluation

- A Schur-Padé Algorithm For Fractional Powers of A Matrix: Higham, Nicholas J. and Lin, Lijing 2010Document24 pagesA Schur-Padé Algorithm For Fractional Powers of A Matrix: Higham, Nicholas J. and Lin, Lijing 2010tdtv0204Pas encore d'évaluation

- Regularization Methods and Fixed Point Algorithms For A Ne Rank Minimization ProblemsDocument28 pagesRegularization Methods and Fixed Point Algorithms For A Ne Rank Minimization ProblemsVanidevi ManiPas encore d'évaluation

- Jacobi Method: Description Algorithm Convergence ExampleDocument6 pagesJacobi Method: Description Algorithm Convergence ExampleMuhammad IrfanPas encore d'évaluation

- 2 Denis Mosconi - RevisadoDocument8 pages2 Denis Mosconi - RevisadoDenis MosconiPas encore d'évaluation

- Poisson FormulaDocument15 pagesPoisson FormulaRiki NurzamanPas encore d'évaluation

- LQRDocument14 pagesLQRStefania Oliveira100% (1)

- (1972) Differential Quadrature A Technique For The Rapid Solution of Nonlinear Partial Differential EquationsDocument13 pages(1972) Differential Quadrature A Technique For The Rapid Solution of Nonlinear Partial Differential EquationsMohammad AshrafyPas encore d'évaluation

- Lecture 8. Linear Systems of Differential EquationsDocument4 pagesLecture 8. Linear Systems of Differential EquationsChernet TugePas encore d'évaluation

- Hydraulic Conductivity and Permeability As Tensors) : 1. Intuitive ThinkingDocument3 pagesHydraulic Conductivity and Permeability As Tensors) : 1. Intuitive ThinkingwiedziePas encore d'évaluation

- Analytic GeometryDocument312 pagesAnalytic Geometrysticker59290% (10)

- Chap 1 Preliminary Concepts: Nkim@ufl - EduDocument20 pagesChap 1 Preliminary Concepts: Nkim@ufl - Edudozio100% (1)

- ChaosDocument51 pagesChaoswladuzzPas encore d'évaluation

- I J I J I J I: Class - Xii Mathematics Assignment No. 1 MatricesDocument3 pagesI J I J I J I: Class - Xii Mathematics Assignment No. 1 MatricesAmmu KuttiPas encore d'évaluation

- On The Electrodynamics of Spinning Magnets PDFDocument5 pagesOn The Electrodynamics of Spinning Magnets PDFMenelao ZubiriPas encore d'évaluation

- The Derivative As The Slope of The Tangent Line: (At A Point)Document20 pagesThe Derivative As The Slope of The Tangent Line: (At A Point)Levi LeuckPas encore d'évaluation

- The Caratheodory Formulation of The Second LawDocument13 pagesThe Caratheodory Formulation of The Second Lawmon kwanPas encore d'évaluation

- 2d TransformationsDocument38 pages2d TransformationshfarrukhnPas encore d'évaluation

- Line Spectra: Institute of Chemistry, University of The Philippines-Los BanosDocument5 pagesLine Spectra: Institute of Chemistry, University of The Philippines-Los BanosKimberly DelicaPas encore d'évaluation

- PT Symmetry: Carl Bender Physics Department Washington UniversityDocument53 pagesPT Symmetry: Carl Bender Physics Department Washington UniversityMargaret KittyPas encore d'évaluation

- MIND MAP For PhysicsDocument4 pagesMIND MAP For Physicsalliey71% (7)

- AVRO Academy: Knowledge Thinking Communication ApplicationDocument7 pagesAVRO Academy: Knowledge Thinking Communication ApplicationFarhad AhmedPas encore d'évaluation

- 2103-351 - 2009 - Lecture Slide 5.0 - Convection Flux and Reynolds Transport Theorem (RTT)Document36 pages2103-351 - 2009 - Lecture Slide 5.0 - Convection Flux and Reynolds Transport Theorem (RTT)Muaz MushtaqPas encore d'évaluation

- Spherically Averaged Endpoint Strichartz Estimates For The Two-Dimensional SCHR Odinger EquationDocument15 pagesSpherically Averaged Endpoint Strichartz Estimates For The Two-Dimensional SCHR Odinger EquationUrsula GuinPas encore d'évaluation

- Noncommutative Geometry From D0-Branes in A Background B-FieldDocument14 pagesNoncommutative Geometry From D0-Branes in A Background B-FieldmlmilleratmitPas encore d'évaluation

- Part 1Document90 pagesPart 1Saeid SamiezadehPas encore d'évaluation

- DLP Q2 Week 1 D2Document6 pagesDLP Q2 Week 1 D2Menchie Yaba100% (1)

- Ap Calculus Ab IntegrationDocument1 pageAp Calculus Ab IntegrationteachopensourcePas encore d'évaluation

- Composite TpeDocument11 pagesComposite TpeAnyah Sampson ObassiPas encore d'évaluation

- Eigenvalue Problems (ODE) Sturm-Liouville ProblemsDocument21 pagesEigenvalue Problems (ODE) Sturm-Liouville Problemshoungjunhong03Pas encore d'évaluation

- Unit 11Document16 pagesUnit 11IISER MOHALIPas encore d'évaluation

- Lecture Notes On General RelativityDocument962 pagesLecture Notes On General RelativityClara MenendezPas encore d'évaluation

- (Krenk & Damkilde.,1993) - Semi-Loof Element For Plate InstabilityDocument9 pages(Krenk & Damkilde.,1993) - Semi-Loof Element For Plate Instabilitychristos032Pas encore d'évaluation

- Chapter 18: Graphing Straight Lines Chapter Pre-TestDocument3 pagesChapter 18: Graphing Straight Lines Chapter Pre-TestIvanakaPas encore d'évaluation

- Particle Astrophysics With High Energy Neutrinos: University of Wisconsin - MadisonDocument102 pagesParticle Astrophysics With High Energy Neutrinos: University of Wisconsin - MadisonAniruddha RayPas encore d'évaluation

- Quantum Gravity LecturesDocument222 pagesQuantum Gravity Lecturessidhartha samtaniPas encore d'évaluation

- 전자기학 1장 솔루션Document13 pages전자기학 1장 솔루션이재하Pas encore d'évaluation

- Classical Mechanics PDFDocument131 pagesClassical Mechanics PDFsgw67Pas encore d'évaluation