Académique Documents

Professionnel Documents

Culture Documents

01 Introductory Econometrics 4E Woolridge

Transféré par

Krak RanechantDescription originale:

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

01 Introductory Econometrics 4E Woolridge

Transféré par

Krak RanechantDroits d'auteur :

Formats disponibles

Wooldridge, Introductory Econometrics, 4th ed. Chapter 1: Nature of Econometrics and Economic Data What do we mean by econometrics?

Econometrics is the eld of economics in which statistical methods are developed and applied to estimate economic relationships, test economic theories, and evaluate plans and policies implemented by private industry, government, and supranational organizations. Econometrics encompasses forecastingnot only the high-prole forecasts of macroeconomic and nancial variables, but also forecasts of demand for a product, likely eects of a tax package, or the interaction between the demand for health services and welfare reform. Why is econometrics separate from mathematical statistics? Because most applications of

statistics in economics and nance are related to the use of non-experimental data, or observational data. The fundamental techniques of statistics have been developed for use on experimental data: that gathered from controlled experiments, where the design of the experiment and the reliability of measurements from its outcomes are primary foci. In relying on observational data, economists are more like astronomers, able to collect and analyse increasingly complete measures on the world (or universe) around them, but unable to inuence the outcomes. This distinction is not absolute; some economic policies are in the nature of experiments, and economists have been heavily involved in both their design and implementation. A good example within the last ve years is the implementation of welfare reform, limiting individuals tenure on the welfare rolls to ve years

of lifetime experience. Many doubted that this would be successful in addressing the needs of those welfare recipients who have low job skills; but the reforms have been surprisingly successful, as a recent article in The Economist states, at raising the employment rate among this cohort. Economists are also able to carefully examine the economic consequences of massive regime changes in an economy, such as the transition from a planned economy to a capitalist system in the former Soviet bloc. But fundamentally applied econometricians observe the data, and use sophisticated techniques to evaluate their meaning. We speak of this work as empirical analysis, or empirical research. The rst step is the careful formulation of the question of interest. This will often involve the application or development of an economic model, which may be as simple as noting that normal goods have negative price elasticities, or exceedingly complex,

involving a full-edged description of many aspects of a set of interrelated markets and the supply/demand relationships for the products traded (as would, for instance, an econometric analysis of an antitrust issue, such as U.S. v Microsoft). Economists are often attacked for their imperialistic tendenciesapplying economic logic to consider such diverse topics as criminal behavior, fertility, or environmental issues but where there is an economic dimension, the application of economic logic and empirical research based on econometric practice may yield valuable insights. Gary Becker, who has made a career of applying economics to non-economic realms, won a Nobel Prize for his eorts.Crime, after all, is yet another career choice, and for high school dropouts who dont see much future in ipping burgers at minimum wage, it is hardly surprising that there are ample applicants for positions in a drug dealers distribution network. In risk-adjusted terms (gauging the risk of getting shot, or arrested and

successfully prosecuted...) the risk-adjusted hourly wage is many times the minimum wage. Should we be surprised by the outcome? Irregardless of whether empirical research is based on a formal economic model or economic intuition, the hypotheses about economic behavior must be transformed into an econometric model that can be applied to the data. In an economic model, we can speak of functions such as Q = Q (P, Y ) ; but if we are to estimate the parameters of that relationship, we must have an explicit functional form for the Q function, and determine that it is an appropriate form for the model we have in mind. For instance, if we were trying to predict the eciency of an automobile in terms of its engine size (displacement, in in3 or liters), Americans would likely rely on a measure like mpg miles per gallon. But the engineering relationship is not linear between mpg and displacement; it

is much closer to being a linear function if we relate gallons per mile (gpm = 1/mpg ) to engine size. The relationship will be curvilinear in mpg terms, requiring a more complex model, but nearly linear in gpm vs displacement. An econometric model will spell out the role of each of its variables: for instance, gpmi = 0 + 1displi + i would express the relationship between the fuel consumption of the ith automobile to its engine size, or displacement, as a linear function, with an additive error term i which encompasses all factors not included in the model. The parameters of the model are the terms, which must be estimated via statistical methods. Once that estimation has been done, we may test specic hypotheses on their values: for instance, that 1 is positive (larger engines use more fuel), or that 1 takes on a certain value. Estimating this relationship for Statas

auto.dta dataset of 74 automobiles, the predicted relationship is gpmi = 0.029 + 0.011displi where displacement is measured in hundreds of in3. This estimated relationship has an R2 value of 0.59, indicating that 59% of the variation of gpm around its mean is explained by displacement, and a root-mean-squared error of 0.008 (which can be compared to gpms mean of 0.050, corresponding to about 21 mpg). The structure of economic data We must acquaint ourselves with some terminology to describe the several forms in which economic and nancial data may appear. A great deal of the work we will do in this course will relate to cross-sectional data: a sample of units (individuals, families, rms, industries, countries...) taken at a given point

in time, or in a particular time frame. The sample is often considered to be a random sample of some sort when applied to microdata such as that gathered from individuals or households. For instance, the ocial estimates of the U.S. unemployment rate are gathered from a monthly survey of individuals, in which each is asked about their employment status. It is not a count, or census, of those out of work. Of course, some cross sections are not samples, but may represent the population: e.g. data from the 50 states do not represent a random sample of states. A crosssectional dataset can be conceptualized as a spreadsheet, with variables in the columns and observations in the rows. Each row is uniquely identied by an observation number, but in a cross-sectional datasets the ordering of the observations is immaterial. Dierent variables may correspond to dierent time periods; we might have a dataset containing municipalities,

their employment rates, and their population in the 1990 and 2000 censuses. The other major form of data considered in econometrics is the time series: a series of evenly spaced measurements on a variable. A time-series dataset may contain a number of measures, each measured at the same frequency, including measures derived from the originals such as lagged values, dierences, and the like. Time series are innately more dicult to handle in an econometric context because their observations almost surely are interdependent across time. Most economic and nancial time series exhibit some degree of persistence. Although we may be able to derive some measures which should not, in theory, be explainable from earlier observations (such as tomorrows stock return in an ecient market), most economic time series are both interrelated and autocorrelatedthat is, related to themselves

across time periods. In a spreadsheet context, the variables would be placed in the columns, and the rows labelled with dates or times. The order of the observations in a time-series dataset matters, since it denotes the passage of equal increments of time. We will discuss time-series data and some of the special techniques that have been developed for its analysis in the latter part of the course. Two combinations of these data schemes are also widely used: pooled cross-section/time series (CS/TS) datasets and panel, or longitudinal, data sets. The former (CS/TS) arise in the context of a repeated surveysuch as a presidential popularity pollwhere the respondents are randomly chosen. It is advantageous to analyse multiple cross-sections, but not possible to link observations across the cross-sections. Much more useful are panel data sets, in which we have timeseries of observations on the same unit: for instance, Ci,t

might be the consumption level of the ith household at time t. Many of the datasets we commonly utilize in economic and nancial research are of this nature: for instance, a great deal of research in corporate nance is carried out with Standard and Poors COMPUSTAT, a panel data set containing 20 years of annual nancial statements for thousands of major U.S. corporations. There is a wide array of specialized econometric techniques that have been developed to analyse panel data; we will not touch upon them in this course. Causality and ceteris paribus The hypotheses tested in applied econometric analysis are often posed to make inferences about the possible causal eects of one or more factors on a response variable: that is, do changes in consumers incomes cause changes in their consumption of beer? At some level,

of course, we can never establish causation unlike the physical sciences, where the interrelations of molecules may follow well-established physical laws, our observed phenomena represent innately unpredictable human behavior. In economic theory, we generally hold that individuals exhibit rational behavior; but since the econometrician does not observe all of the factors that might inuence behavior, we cannot always make sensible inferences about potentially causal factors. Whenever we operationalize an econometric model, we implicitly acknowledge that it can only capture a few key details of the behavioral relationship, and is leaving many additional factors (which may or may not be observable) in the pound of ceteris paribus. ceteris paribusliterally, other things equalalways underlies our inferences from empirical research. Our best hope is that we might control for many of the factors, and be able to use our empirical ndings

to ascertain whether systematic factors have been omitted. Any econometric model should be subjected to diagnostic testing to determine whether it contains obvious aws. For instance, the relationship between mpg and displ in the automobile data is strictly dominated by a model containing both displ and displ2, given the curvilinear relation between mpg and displ. Thus the original linear model can be viewed as unacceptable in comparison to the polynomial model; this conclusion could be drawn from analysis of the models residuals, coupled with an understanding of the engineering relationship that posits a nonlinear function between mpg and displ.

Vous aimerez peut-être aussi

- Econometrics Notes PDFDocument8 pagesEconometrics Notes PDFumamaheswariPas encore d'évaluation

- IntroEconmerics AcFn 5031 (q1)Document302 pagesIntroEconmerics AcFn 5031 (q1)yesuneh98Pas encore d'évaluation

- Multicollinearity Among The Regressors Included in The Regression ModelDocument13 pagesMulticollinearity Among The Regressors Included in The Regression ModelNavyashree B MPas encore d'évaluation

- DP1HL1 IntroDocument85 pagesDP1HL1 IntroSenam DzakpasuPas encore d'évaluation

- Homoscedastic That Is, They All Have The Same Variance: HeteroscedasticityDocument11 pagesHomoscedastic That Is, They All Have The Same Variance: HeteroscedasticityNavyashree B M100% (1)

- DummyDocument20 pagesDummymushtaque61Pas encore d'évaluation

- Public Finance SolutionDocument24 pagesPublic Finance SolutionChan Zachary100% (1)

- Chapter 6 Dummy Variable AssignmentDocument4 pagesChapter 6 Dummy Variable Assignmentharis100% (1)

- Studenmund Ch01 v2Document31 pagesStudenmund Ch01 v2tsy0703Pas encore d'évaluation

- AutocorrelationDocument172 pagesAutocorrelationJenab Peteburoh100% (1)

- Stationarity and Unit Root TestingDocument21 pagesStationarity and Unit Root TestingAwAtef LHPas encore d'évaluation

- Regression TechniquesDocument5 pagesRegression Techniquesvisu009Pas encore d'évaluation

- Topic 6 Two Variable Regression Analysis Interval Estimation and Hypothesis TestingDocument36 pagesTopic 6 Two Variable Regression Analysis Interval Estimation and Hypothesis TestingOliver Tate-DuncanPas encore d'évaluation

- HeteroscedasticityDocument7 pagesHeteroscedasticityBristi RodhPas encore d'évaluation

- Financial Econometrics 2010-2011Document483 pagesFinancial Econometrics 2010-2011axelkokocur100% (1)

- Complete Business Statistics: Simple Linear Regression and CorrelationDocument50 pagesComplete Business Statistics: Simple Linear Regression and Correlationmallick5051rajatPas encore d'évaluation

- Econometrics Ch6 ApplicationsDocument49 pagesEconometrics Ch6 ApplicationsMihaela SirițanuPas encore d'évaluation

- Module 2 - Research MethodologyDocument18 pagesModule 2 - Research MethodologyPRATHAM MOTWANI100% (1)

- Unbalanced Panel Data PDFDocument51 pagesUnbalanced Panel Data PDFrbmalasaPas encore d'évaluation

- Final Project - Climate Change PlanDocument32 pagesFinal Project - Climate Change PlanHannah LeePas encore d'évaluation

- Micro Perfect and MonopolyDocument57 pagesMicro Perfect and Monopolytegegn mogessiePas encore d'évaluation

- 178.200 06-4Document34 pages178.200 06-4api-3860979Pas encore d'évaluation

- Introduction To Introduction To Econometrics Econometrics Econometrics Econometrics (ECON 352) (ECON 352)Document12 pagesIntroduction To Introduction To Econometrics Econometrics Econometrics Econometrics (ECON 352) (ECON 352)Nhatty Wero100% (2)

- Term Paper Eco No MetricDocument34 pagesTerm Paper Eco No MetricmandeepkumardagarPas encore d'évaluation

- Panel Econometrics HistoryDocument65 pagesPanel Econometrics HistoryNaresh SehdevPas encore d'évaluation

- Business Economics MainDocument8 pagesBusiness Economics MainJaskaran MannPas encore d'évaluation

- Ex08 PDFDocument29 pagesEx08 PDFSalman FarooqPas encore d'évaluation

- Solution Manual For Using Econometrics A Practical Guide 6 e 6th Edition A H StudenmundDocument6 pagesSolution Manual For Using Econometrics A Practical Guide 6 e 6th Edition A H Studenmundlyalexanderzh52Pas encore d'évaluation

- Chapter # 6: Multiple Regression Analysis: The Problem of EstimationDocument43 pagesChapter # 6: Multiple Regression Analysis: The Problem of EstimationRas DawitPas encore d'évaluation

- 3 Multiple Linear Regression: Estimation and Properties: Ezequiel Uriel Universidad de Valencia Version: 09-2013Document37 pages3 Multiple Linear Regression: Estimation and Properties: Ezequiel Uriel Universidad de Valencia Version: 09-2013penyia100% (1)

- Introduction To Econometrics - Stock & Watson - CH 12 SlidesDocument46 pagesIntroduction To Econometrics - Stock & Watson - CH 12 SlidesAntonio AlvinoPas encore d'évaluation

- Chap 8 AnswersDocument7 pagesChap 8 Answerssharoz0saleemPas encore d'évaluation

- Structure Project Topics For Projects 2016Document4 pagesStructure Project Topics For Projects 2016Mariu VornicuPas encore d'évaluation

- Dev't PPA I (Chap-4)Document48 pagesDev't PPA I (Chap-4)ferewe tesfaye100% (1)

- Chapter 1: The Nature of Econometrics and Economic Data: Ruslan AliyevDocument27 pagesChapter 1: The Nature of Econometrics and Economic Data: Ruslan AliyevMurselPas encore d'évaluation

- Multiple Choice Test Bank Questions No Feedback - Chapter 3: y + X + X + X + UDocument3 pagesMultiple Choice Test Bank Questions No Feedback - Chapter 3: y + X + X + X + Uimam awaluddinPas encore d'évaluation

- Statistics For EconomicsDocument58 pagesStatistics For EconomicsKintu GeraldPas encore d'évaluation

- The Three Box Solution Cheat SheetDocument1 pageThe Three Box Solution Cheat SheetAndrea CattaneoPas encore d'évaluation

- Hoff (2000), 'The Modern Theory of Underdevelopment Traps'Document58 pagesHoff (2000), 'The Modern Theory of Underdevelopment Traps'Joe OglePas encore d'évaluation

- Liquidity Preference As Behavior Towards Risk Review of Economic StudiesDocument23 pagesLiquidity Preference As Behavior Towards Risk Review of Economic StudiesCuenta EliminadaPas encore d'évaluation

- Autocorrelation: What Happens If The Error Terms Are Correlated?Document18 pagesAutocorrelation: What Happens If The Error Terms Are Correlated?Nusrat Jahan MoonPas encore d'évaluation

- Financial Econometrics IntroductionDocument13 pagesFinancial Econometrics IntroductionPrabath Suranaga MorawakagePas encore d'évaluation

- Nature of Regression AnalysisDocument22 pagesNature of Regression Analysiswhoosh2008Pas encore d'évaluation

- Economics Unit OutlineDocument8 pagesEconomics Unit OutlineHaris APas encore d'évaluation

- Sample Exam Questions (And Answers)Document22 pagesSample Exam Questions (And Answers)Diem Hang VuPas encore d'évaluation

- Cases RCCDocument41 pagesCases RCCrderijkPas encore d'évaluation

- CAPMDocument34 pagesCAPMadityav_13100% (1)

- 1-Value at Risk (Var) Models, Methods & Metrics - Excel Spreadsheet Walk Through Calculating Value at Risk (Var) - Comparing Var Models, Methods & MetricsDocument65 pages1-Value at Risk (Var) Models, Methods & Metrics - Excel Spreadsheet Walk Through Calculating Value at Risk (Var) - Comparing Var Models, Methods & MetricsVaibhav KharadePas encore d'évaluation

- Unit 9 Linear Regression: StructureDocument18 pagesUnit 9 Linear Regression: StructurePranav ViswanathanPas encore d'évaluation

- Economics Open Closed SystemsDocument37 pagesEconomics Open Closed Systemsmahathir rafsanjaniPas encore d'évaluation

- Solution For HW 1Document15 pagesSolution For HW 1Ashtar Ali Bangash0% (1)

- AutocorrelationDocument46 pagesAutocorrelationBabar AdeebPas encore d'évaluation

- Multiple Linear Regression: y BX BX BXDocument14 pagesMultiple Linear Regression: y BX BX BXPalaniappan SellappanPas encore d'évaluation

- Chap 013Document67 pagesChap 013Bruno MarinsPas encore d'évaluation

- Harvard Economics 2020a Problem Set 3Document2 pagesHarvard Economics 2020a Problem Set 3JPas encore d'évaluation

- Micro Chapter 04Document15 pagesMicro Chapter 04deeptitripathi09Pas encore d'évaluation

- Weil GrowthDocument72 pagesWeil GrowthJavier Burbano ValenciaPas encore d'évaluation

- TAPPI T 810 Om-06 Bursting Strength of Corrugated and Solid FiberboardDocument5 pagesTAPPI T 810 Om-06 Bursting Strength of Corrugated and Solid FiberboardNguyenSongHaoPas encore d'évaluation

- ASM1 ProgramingDocument14 pagesASM1 ProgramingTran Cong Hoang (BTEC HN)Pas encore d'évaluation

- Plantas Con Madre Plants That Teach and PDFDocument15 pagesPlantas Con Madre Plants That Teach and PDFJetPas encore d'évaluation

- Trainer Manual Internal Quality AuditDocument32 pagesTrainer Manual Internal Quality AuditMuhammad Erwin Yamashita100% (5)

- OMN-TRA-SSR-OETC-Course Workbook 2daysDocument55 pagesOMN-TRA-SSR-OETC-Course Workbook 2daysMANIKANDAN NARAYANASAMYPas encore d'évaluation

- Successful School LeadershipDocument132 pagesSuccessful School LeadershipDabney90100% (2)

- Schematic Circuits: Section C - ElectricsDocument1 pageSchematic Circuits: Section C - ElectricsIonut GrozaPas encore d'évaluation

- Ems Speed Sensor Com MotorDocument24 pagesEms Speed Sensor Com MotorKarina RickenPas encore d'évaluation

- CH 2 PDFDocument85 pagesCH 2 PDFSajidPas encore d'évaluation

- File 1038732040Document70 pagesFile 1038732040Karen Joyce Costales MagtanongPas encore d'évaluation

- Civil Engineering Literature Review SampleDocument6 pagesCivil Engineering Literature Review Sampleea442225100% (1)

- Modicon PLC CPUS Technical Details.Document218 pagesModicon PLC CPUS Technical Details.TrbvmPas encore d'évaluation

- Top249 1 PDFDocument52 pagesTop249 1 PDFCarlos Henrique Dos SantosPas encore d'évaluation

- ILI9481 DatasheetDocument143 pagesILI9481 DatasheetdetonatPas encore d'évaluation

- BS en 50216-6 2002Document18 pagesBS en 50216-6 2002Jeff Anderson Collins100% (3)

- Parts List 38 254 13 95: Helical-Bevel Gear Unit KA47, KH47, KV47, KT47, KA47B, KH47B, KV47BDocument4 pagesParts List 38 254 13 95: Helical-Bevel Gear Unit KA47, KH47, KV47, KT47, KA47B, KH47B, KV47BEdmundo JavierPas encore d'évaluation

- LampiranDocument26 pagesLampiranSekar BeningPas encore d'évaluation

- Chapter 3 PayrollDocument5 pagesChapter 3 PayrollPheng Tiosen100% (2)

- Angelina JolieDocument14 pagesAngelina Joliemaria joannah guanteroPas encore d'évaluation

- Boq Cme: 1 Pole Foundation Soil WorkDocument1 pageBoq Cme: 1 Pole Foundation Soil WorkyuwonoPas encore d'évaluation

- Amberjet™ 1500 H: Industrial Grade Strong Acid Cation ExchangerDocument2 pagesAmberjet™ 1500 H: Industrial Grade Strong Acid Cation ExchangerJaime SalazarPas encore d'évaluation

- Change LogDocument145 pagesChange LogelhohitoPas encore d'évaluation

- Remediation of AlphabetsDocument34 pagesRemediation of AlphabetsAbdurahmanPas encore d'évaluation

- Harish Raval Rajkot.: Civil ConstructionDocument4 pagesHarish Raval Rajkot.: Civil ConstructionNilay GandhiPas encore d'évaluation

- JOB Performer: Q .1: What Is Permit?Document5 pagesJOB Performer: Q .1: What Is Permit?Shahid BhattiPas encore d'évaluation

- Cable Schedule - Instrument - Surfin - Malanpur-R0Document3 pagesCable Schedule - Instrument - Surfin - Malanpur-R0arunpandey1686Pas encore d'évaluation

- EHVACDocument16 pagesEHVACsidharthchandak16Pas encore d'évaluation

- Citing Correctly and Avoiding Plagiarism: MLA Format, 7th EditionDocument4 pagesCiting Correctly and Avoiding Plagiarism: MLA Format, 7th EditionDanish muinPas encore d'évaluation

- Cognitive-Behavioral Interventions For PTSDDocument20 pagesCognitive-Behavioral Interventions For PTSDBusyMindsPas encore d'évaluation

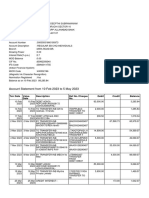

- Feb-May SBI StatementDocument2 pagesFeb-May SBI StatementAshutosh PandeyPas encore d'évaluation