Académique Documents

Professionnel Documents

Culture Documents

Parallel Processing: Execution of Concurrent Events in The Computing Process To Achieve Faster Computational Speed

Transféré par

Georgian ChailTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Parallel Processing: Execution of Concurrent Events in The Computing Process To Achieve Faster Computational Speed

Transféré par

Georgian ChailDroits d'auteur :

Formats disponibles

Memory Organization

Lecture 46

Parallel Processing

xecution of Concurrent Events in the computing

ocess to achieve faster Computational Speed

Levels of Parallel Processing

- Job or Program level

- Task or Procedure level

- Inter-Instruction level

- Intra-Instruction level

Memory Organization

Lecture 46

Parallel Computers

Architectural Classification

Flynn's classification

Based on the multiplicity of Instruction Streams

and Data Streams

Instruction Stream

Sequence of Instructions read from memory

Data Stream

Operations performed on the data in the processor

Number of Data Streams

Number of Single

Instruction

Streams

Multiple

Single

Multiple

SISD

SIMD

MISD

MIMD

Memory Organization

Lecture 46

SISD

Control

Unit

Processor

Unit

Data stream Memory

Instruction stream

Characteristics

- Standard von Neumann machine

- Instructions and data are stored in memory

- One operation at a time

Limitations

Von Neumann bottleneck

Maximum speed of the system is limited by the Memory

Bandwidth (bits/sec or bytes/sec)

- Limitation on Memory Bandwidth

- Memory is shared by CPU and I/O

Memory Organization

Lecture 46

MISD

M

CU

CU

Memory

CU

Data stream

Instruction stream

Characteristics

- There is no computer at present that can be classified

as MISD

Memory Organization

Lecture 46

SIMD

Memory

Data bus

Control Unit

Instruction stream

P

P Processor units

Data stream

Alignment network

Characteristics

MMemory modules

- Only one copy of the program exists

- A single controller executes one instruction at a time

Memory Organization

Lecture 46

MIMD

P

Interconnection Network

Shared Memory

Characteristics

- Multiple processing units

- Execution of multiple instructions on multiple data

Types of MIMD computer systems

- Shared memory multiprocessors

- Message-passing multicomputers

Memory Organization

Lecture 46

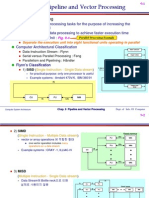

Pipelining

A technique of decomposing a sequential process into sub

operations, with each sub process being executed in a

partial dedicated segment that operates concurrently with

all other segments.

Ai * Bi + Ci

Segment 1

for i = 1, 2, 3, ... , 7

Ai

Bi

R1

R2

C

Memory

i

Multiplier

Segment 2

R4

R3

Segment 3

Adder

R5

R1 Ai, R2 Bi

Load Ai and Bi

R3 R1 * R2, R4 Ci Multiply and load Ci

R5 R3 + R4

Add

Memory Organization

Lecture 46

Operations in each Pipeline Stage

Clock Segment 1

Segment 2

Segment 3

Pulse

Number R1

R2

R3

R4

R5

1

A1

B1

2

A2

B2

A1 * B1 C1

3

A3

B3

A2 * B2 C2

A1 * B1 + C1

4

A4

B4

A3 * B3 C3

A2 * B2 + C2

5

A5

B5

A4 * B4 C4

A3 * B3 + C3

6

A6

B6

A5 * B5 C5

A4 * B4 + C4

7

A7

B7

A6 * B6 C6

A5 * B5 + C5

8

A7 * B7

C7

A6 * B6 + C6

9

A7 * B7 + C7

Memory Organization

Lecture 46

General Pipeline

General Structure of a 4-Segment Pipeline

Clock

Input

S1

R1

S2

R2

S3

R3

S4

R4

Space-Time Diagram

1

Segment 1 T1 T2 T3 T4 T5 T6

2

3

4

T1 T2 T3 T4 T5 T6

T1 T2 T3 T4 T5 T6

T1 T2 T3 T4 T5 T6

Clock cycles

Memory Organization

10

Lecture 46

Pipeline SpeedUp

n:

Number of tasks to be performed

Conventional Machine (Non-Pipelined)

tn: Clock cycle

Time required to complete the n tasks = n * tn

Pipelined Machine (k stages)

tp: Clock cycle (time to complete each

suboperation)

Time required to complete the n tasks = (k +

n - 1) * tp

Speedup

Sk: Speedup

Sk = n*tn / (k + n - 1)*tp

Vous aimerez peut-être aussi

- Preliminary Specifications: Programmed Data Processor Model Three (PDP-3) October, 1960D'EverandPreliminary Specifications: Programmed Data Processor Model Three (PDP-3) October, 1960Pas encore d'évaluation

- Pipelining and Vector ProcessingDocument37 pagesPipelining and Vector ProcessingJohn DavidPas encore d'évaluation

- Parallel Processing Pipelining Characteristics of Multiprocessors Interconnection Structures Inter Processor Arbitration Inter Processor Communication and SynchronizationDocument13 pagesParallel Processing Pipelining Characteristics of Multiprocessors Interconnection Structures Inter Processor Arbitration Inter Processor Communication and SynchronizationYash Gupta MauryaPas encore d'évaluation

- Pipelining and Vector ProcessingDocument28 pagesPipelining and Vector ProcessingBinuVargisPas encore d'évaluation

- Ca Unit 2.2Document22 pagesCa Unit 2.2GuruKPO100% (2)

- CH7-Parallel and Pipelined ProcessingDocument23 pagesCH7-Parallel and Pipelined Processingaashifr469Pas encore d'évaluation

- Pipeline and VectorDocument29 pagesPipeline and VectorGâgäñ LøhîăPas encore d'évaluation

- Computer ArchDocument11 pagesComputer ArchYuvan KumarPas encore d'évaluation

- RISC Architecture and Super Computer: Prof. Sin-Min Lee Department of Computer Science San Jose State UniversityDocument85 pagesRISC Architecture and Super Computer: Prof. Sin-Min Lee Department of Computer Science San Jose State UniversityAvneet JohalPas encore d'évaluation

- RISC Architecture: Prof. Sin-Min Lee Department of Computer Science San Jose State UniversityDocument69 pagesRISC Architecture: Prof. Sin-Min Lee Department of Computer Science San Jose State UniversityArgneshu GuptaPas encore d'évaluation

- Basic Processing UnitDocument49 pagesBasic Processing UnitritikPas encore d'évaluation

- Risc in Pipe IneDocument39 pagesRisc in Pipe IneNagarjuna ReddyPas encore d'évaluation

- Lecture 16: Basic CPU DesignDocument20 pagesLecture 16: Basic CPU DesignFarid MansurPas encore d'évaluation

- Unit 2 - Embedded SystemDocument75 pagesUnit 2 - Embedded Systemsujith100% (4)

- Unit 4 - P 2Document13 pagesUnit 4 - P 2Sanju SanjayPas encore d'évaluation

- Pipelining and Vector Processing: - ParallelDocument37 pagesPipelining and Vector Processing: - ParallelAmory Sabri AsmaroPas encore d'évaluation

- Unit-5 (Coa) NotesDocument33 pagesUnit-5 (Coa) NotesyashPas encore d'évaluation

- Slides12 04Document47 pagesSlides12 04don504Pas encore d'évaluation

- Cs/Coe 1541: Single and Multi-Cycle ImplementationsDocument93 pagesCs/Coe 1541: Single and Multi-Cycle ImplementationsBobo JooPas encore d'évaluation

- Pipelining: Basic and Intermediate ConceptsDocument69 pagesPipelining: Basic and Intermediate ConceptsmanibrarPas encore d'évaluation

- ARM ProcessorDocument46 pagesARM Processoryixexi7070Pas encore d'évaluation

- CA Classes-76-80Document5 pagesCA Classes-76-80SrinivasaRaoPas encore d'évaluation

- Parallel ProcessingDocument32 pagesParallel ProcessingMannan BansalPas encore d'évaluation

- ED Unit-1Document83 pagesED Unit-1Brij Nandan SinghPas encore d'évaluation

- Unit 6 PipeliningDocument19 pagesUnit 6 PipeliningadfasdfadsfdsfPas encore d'évaluation

- Co - Unit Ii - IiDocument34 pagesCo - Unit Ii - Iiy22cd125Pas encore d'évaluation

- ILP - Appendix C PDFDocument52 pagesILP - Appendix C PDFDhananjay JahagirdarPas encore d'évaluation

- Unit_IV_material_Part_3_1705469836025Document6 pagesUnit_IV_material_Part_3_1705469836025ansh kumarPas encore d'évaluation

- Multipath 1 NotesDocument37 pagesMultipath 1 NotesNarender KumarPas encore d'évaluation

- Risc - A Basic ThingsDocument4 pagesRisc - A Basic ThingsPrabakar ThepaiyaPas encore d'évaluation

- Chapter 2 - Lecture 5 Control Unit OperationDocument44 pagesChapter 2 - Lecture 5 Control Unit OperationAlazar DInberuPas encore d'évaluation

- EE 346 Microprocessor Principles and Applications An Introduction To Microcontrollers, Assembly Language, and Embedded SystemsDocument40 pagesEE 346 Microprocessor Principles and Applications An Introduction To Microcontrollers, Assembly Language, and Embedded SystemsAbG Compilation of VideosPas encore d'évaluation

- Multi-Cycle Implementation of MIPS: Revisit The 1-Cycle VersionDocument10 pagesMulti-Cycle Implementation of MIPS: Revisit The 1-Cycle VersionbigfatdashPas encore d'évaluation

- Cpe 631 Pentium 4Document111 pagesCpe 631 Pentium 4rohitkotaPas encore d'évaluation

- Reduced Instruction Set Computing (RISC) : Li-Chuan FangDocument42 pagesReduced Instruction Set Computing (RISC) : Li-Chuan FangDevang PatelPas encore d'évaluation

- Pentium ArchitectureDocument3 pagesPentium ArchitectureweblnsPas encore d'évaluation

- Pentium 4 Pipe LiningDocument7 pagesPentium 4 Pipe Liningapi-3801329100% (5)

- 5 PipelineDocument63 pages5 Pipeline1352 : NEEBESH PADHYPas encore d'évaluation

- L06 Arc DR Wail c4 PipeliningDocument168 pagesL06 Arc DR Wail c4 PipeliningtesfuPas encore d'évaluation

- Ovn MainDocument54 pagesOvn MainAvenir BajraktariPas encore d'évaluation

- Slides Chapter 5 Basic Processing UnitDocument44 pagesSlides Chapter 5 Basic Processing UnitWin WarPas encore d'évaluation

- CMPS293&290 Class Notes Chap 02Document24 pagesCMPS293&290 Class Notes Chap 02hassanPas encore d'évaluation

- Fundamentals of Computer Design and PipeliningDocument22 pagesFundamentals of Computer Design and PipeliningSuganya PeriasamyPas encore d'évaluation

- System-on-Chip Design: 2ECDE54Document24 pagesSystem-on-Chip Design: 2ECDE54Pragya jhalaPas encore d'évaluation

- General-Purpose Processors Explained: Architecture, Operations & DevelopmentDocument37 pagesGeneral-Purpose Processors Explained: Architecture, Operations & Developmentwaqar khan77Pas encore d'évaluation

- Chapter 4 - Central Processing Units Is BzudhesvsDocument7 pagesChapter 4 - Central Processing Units Is Bzudhesvsrosdi jkmPas encore d'évaluation

- Appendix ADocument93 pagesAppendix AzeewoxPas encore d'évaluation

- SYSC 4507 Computer Systems Architecture Control UnitsDocument67 pagesSYSC 4507 Computer Systems Architecture Control UnitsnitinmgPas encore d'évaluation

- Single Cycle Datapath PDFDocument30 pagesSingle Cycle Datapath PDFShivam KhandelwalPas encore d'évaluation

- Internal Structure of CPUDocument5 pagesInternal Structure of CPUSaad KhanPas encore d'évaluation

- Microprocessor UNIT - IVDocument87 pagesMicroprocessor UNIT - IVMani GandanPas encore d'évaluation

- Computer Systems: Hardware (Book No. 1 Chapter 2)Document82 pagesComputer Systems: Hardware (Book No. 1 Chapter 2)Belat CruzPas encore d'évaluation

- Revision 9608 Section 1.4Document19 pagesRevision 9608 Section 1.4Riaz KhanPas encore d'évaluation

- PF PF Performance Performance: What Is Good PerformanceDocument7 pagesPF PF Performance Performance: What Is Good Performancemanishbhardwaj8131Pas encore d'évaluation

- Coa Unit 6Document37 pagesCoa Unit 6Akash AgrawalPas encore d'évaluation

- Computer Organization and Architecture Micro-OperationsDocument9 pagesComputer Organization and Architecture Micro-OperationsArvinder SinghPas encore d'évaluation

- Organization of ProcessorsDocument32 pagesOrganization of ProcessorsNaveen SuddalaPas encore d'évaluation

- ch09 Morris ManoDocument15 pagesch09 Morris ManoYogita Negi RawatPas encore d'évaluation

- Chapter10-Computer ArithmaticDocument25 pagesChapter10-Computer ArithmaticGeorgian ChailPas encore d'évaluation

- Computer Organization and Design: Lecture: 3 Tutorial: 2 Practical: 0 Credit: 4Document12 pagesComputer Organization and Design: Lecture: 3 Tutorial: 2 Practical: 0 Credit: 4Georgian ChailPas encore d'évaluation

- Asynchronous Data Transfer Modes of Transfer Priority Interrupt Direct Memory Access Input-Output Processor Serial CommunicationDocument49 pagesAsynchronous Data Transfer Modes of Transfer Priority Interrupt Direct Memory Access Input-Output Processor Serial CommunicationGeorgian ChailPas encore d'évaluation

- Chapter 13Document20 pagesChapter 13Georgian ChailPas encore d'évaluation

- Chapter4Document58 pagesChapter4Georgian ChailPas encore d'évaluation

- Chapter7Document17 pagesChapter7Georgian ChailPas encore d'évaluation

- Timing and Control Instruction Cycle Memory Reference Instructions Input-Output and Interrupt Complete Computer DescriptionDocument38 pagesTiming and Control Instruction Cycle Memory Reference Instructions Input-Output and Interrupt Complete Computer DescriptionGeorgian ChailPas encore d'évaluation

- Chapter 12Document23 pagesChapter 12Georgian ChailPas encore d'évaluation

- Chapter8Document38 pagesChapter8Georgian ChailPas encore d'évaluation

- Chap1Document18 pagesChap1Georgian ChailPas encore d'évaluation

- Window - Thermal TrendDocument30 pagesWindow - Thermal TrendCC Cost AdvisoryPas encore d'évaluation

- Fitbit CaseAnalysisDocument5 pagesFitbit CaseAnalysisCHIRAG NARULAPas encore d'évaluation

- Catalogo Rodi Domestico 2015 2016 Pt1Document112 pagesCatalogo Rodi Domestico 2015 2016 Pt1Rosa Maria Asmar UrenaPas encore d'évaluation

- Egyptian Architecture: The Influence of Geography and BeliefsDocument48 pagesEgyptian Architecture: The Influence of Geography and BeliefsYogesh SoodPas encore d'évaluation

- W0016 HowtoconfigDWG2000BCDasTerminationwithElastixDocument8 pagesW0016 HowtoconfigDWG2000BCDasTerminationwithElastixtomba2kPas encore d'évaluation

- American Style ISVS 2022Document22 pagesAmerican Style ISVS 2022Nishan WijetungePas encore d'évaluation

- General Structural/Construction Notes and Specifications: Proposed 4-Storey Residential Building W/FenceDocument1 pageGeneral Structural/Construction Notes and Specifications: Proposed 4-Storey Residential Building W/FenceWilbert ReuyanPas encore d'évaluation

- ServletDocument620 pagesServletChanaka PrasannaPas encore d'évaluation

- Converting shipping containers into sewage treatment unitsDocument1 pageConverting shipping containers into sewage treatment unitsSyafiq KamaluddinPas encore d'évaluation

- Masterbilt BLG HDDocument2 pagesMasterbilt BLG HDwsfc-ebayPas encore d'évaluation

- XBee PRO 2 - 4 DigiMeshDocument69 pagesXBee PRO 2 - 4 DigiMeshRam_G_JesPas encore d'évaluation

- Lecture 2 Bearing and Punching Stress, StrainDocument16 pagesLecture 2 Bearing and Punching Stress, StrainDennisVigoPas encore d'évaluation

- BoQ GRP Pipe Contructor 20180918Document8 pagesBoQ GRP Pipe Contructor 20180918Mohammad SyeduzzamanPas encore d'évaluation

- Ac Smart Premium: PQCSW421E0ADocument1 pageAc Smart Premium: PQCSW421E0Aphyo7799Pas encore d'évaluation

- M101-M & M102-M Modbus Protocol 1TNC911505M0203Document25 pagesM101-M & M102-M Modbus Protocol 1TNC911505M0203Tarek FawzyPas encore d'évaluation

- FCU Acoustic Guide Nov09 PDFDocument18 pagesFCU Acoustic Guide Nov09 PDFprasathinusaPas encore d'évaluation

- Symantec DLP 14.5 Data Insight Implementation GuideDocument63 pagesSymantec DLP 14.5 Data Insight Implementation GuidePedro HernandezPas encore d'évaluation

- Whirlpool KAR-16 French Door Bottom Mount Refrigerator Service ManualDocument68 pagesWhirlpool KAR-16 French Door Bottom Mount Refrigerator Service ManualKeith Drake67% (3)

- Port Aransas Marina Maps PDFDocument9 pagesPort Aransas Marina Maps PDFcallertimesPas encore d'évaluation

- S7 200 CommunicationDocument34 pagesS7 200 CommunicationsyoussefPas encore d'évaluation

- Concept: Nature (Description) Key Ideas Educational ImplicationsDocument3 pagesConcept: Nature (Description) Key Ideas Educational ImplicationsJacqueline Llano100% (1)

- Building Construction ARP-431: Submitted By: Shreya Sood 17BAR1013Document17 pagesBuilding Construction ARP-431: Submitted By: Shreya Sood 17BAR1013Rithika Raju ChallapuramPas encore d'évaluation

- RBD-17 09 2020Document137 pagesRBD-17 09 2020hishamndtPas encore d'évaluation

- Network Devices GuideDocument51 pagesNetwork Devices GuideTasya ZumarPas encore d'évaluation

- Install Oracle 10g R2 in CentOS 6.2Document4 pagesInstall Oracle 10g R2 in CentOS 6.2sajal_0171Pas encore d'évaluation

- High Speed Counter and TimerDocument34 pagesHigh Speed Counter and TimerpaldopalPas encore d'évaluation

- Perfera Floorstanding 007 LRDocument12 pagesPerfera Floorstanding 007 LRbitpoint2022Pas encore d'évaluation

- Accessing BI Publisher 11g Web Services Through A JDeveloper Web Service ProxyDocument8 pagesAccessing BI Publisher 11g Web Services Through A JDeveloper Web Service ProxychinbomPas encore d'évaluation

- Symfony Reference 2.3Document290 pagesSymfony Reference 2.3kaliperzatanPas encore d'évaluation

- Import PS1/PS2 Saves as PSVDocument2 pagesImport PS1/PS2 Saves as PSVStiven MedinaPas encore d'évaluation