Académique Documents

Professionnel Documents

Culture Documents

STKI - Pertemuan 6

Transféré par

Hanifa Vidya RizantiDescription originale:

Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

STKI - Pertemuan 6

Transféré par

Hanifa Vidya RizantiDroits d'auteur :

Formats disponibles

Vector Space Model

- Imam Cholissodin -

Review : Boolean Retrieval

Kelebihan dan Kekurangan :

Documents either match or dont.

Good for expert users with precise

understanding of their needs and the collection

(e.g., library search).

Also good for applications: Applications can

easily consume 1000s of results.

Not good for the majority of users.

Most users incapable of writing Boolean queries

(or they are, but they think its too much work).

Most users dont want to wade through 1000s of

results (e.g., web search).

Problem with Boolean Model

Boolean queries often result in either too few

(=0) or too many (1000s) results.

Query 1: standard user dlink 650 200,000

hits

Query 2: standard user dlink 650 no card

found: 0 hits

Setiap space di default dengan

ekspresi boolean AND

Scoring as the basis of ranked

retrieval

We wish to return in order the documents most

likely to be useful to the searcher

How can we rank-order the documents in the

collection with respect to a query?

Assign a score say in [0, 1] to each

document

This score measures how well document and

query match.

Perbandingan Model : Boolean &

Vector

Boolean : Each document is represented by a

binary vector {0,1}

|V|

Vector : Each document is a count vector

|V|

Antony and Cleopatra Julius Caesar The Tempest Hamlet Othello Macbeth

Antony 1 1 0 0 0 1

Brutus 1 1 0 1 0 0

Caesar 1 1 0 1 1 1

Calpurnia 0 1 0 0 0 0

Cleopatra 1 0 0 0 0 0

mercy 1 0 1 1 1 1

worser 1 0 1 1 1 0

Antony and Cleopatra Julius Caesar The Tempest Hamlet Othello Macbeth

Antony 157 73 0 0 0 0

Brutus 4 157 0 1 0 0

Caesar 232 227 0 2 1 1

Calpurnia 0 10 0 0 0 0

Cleopatra 57 0 0 0 0 0

mercy 2 0 3 5 5 1

worser 2 0 1 1 1 0

Structure : Vector Space Model

Represent query

as a weighted

Represent each document

as a weighted

Compute the cosine similarity score for

the query vector and each document

vector

Rank documents with respect to the query by

score

Return the top K (e.g., K = 10) to the user

Bag of Words : Vector Model

Vector representation doesnt consider the

ordering of words in a document

John is quicker than Mary and Mary is quicker

than John have the same vectors

This is called the bag of words model.

In a sense, this is a step back: The positional

index was able to distinguish these two

documents.

Term Frequency (tf)

The term frequency tf

t,d

of term t in document d

is defined as the number of times that t occurs

in d.

We want to use tf when computing query-

document match scores. But how?

Raw term frequency is not what we want:

A document with 10 occurrences of the term may

be more relevant than a document with one

occurrence of the term.

But not 10 times more relevant.

Relevance does not increase proportionally with

term frequency.

Log-Frequency Weighting

The log frequency weight of term t in d is

0 0, 1 1, 2 1.3, 10 2, 1000 4, etc.

Score for a document-query pair: sum over

terms t in both q and d:

score

The score is 0 if none of the query terms is

present in the document.

> +

=

otherwise 0,

0 tf if , tf log 1

10 t,d t,d

t,d

w

e

+ =

d q t

d t

) tf log (1

,

Inverse Document Frequency

(idf)

df

t

is the document frequency of t: the number

of documents that contain t

df is a measure of the informativeness of t

We define the idf (inverse document frequency)

of t by

We use log N/df

t

instead of N/df

t

to dampen the

effect of idf.

t t

N/df log idf

10

=

tf-idf Weighting

The tf-idf weight of a term is the product of its tf

weight and its idf weight.

Best known weighting scheme in information

retrieval

Note: the - in tf-idf is a hyphen, not a minus sign!

Alternative names: tf.idf, tf x idf

Increases with the number of occurrences

within a document

Increases with the rarity of the term in the

collection

t d t

N

d t

df / log ) tf log 1 ( w

,

,

+ =

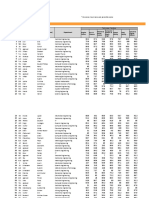

Example Weight Matrix

Each document is now represented by a real-

valued vector of tf-idf weights R

|V|

Antony and Cleopatra Julius Caesar The Tempest Hamlet Othello Macbeth

Antony 5.25 3.18 0 0 0 0.35

Brutus 1.21 6.1 0 1 0 0

Caesar 8.59 2.54 0 1.51 0.25 0

Calpurnia 0 1.54 0 0 0 0

Cleopatra 2.85 0 0 0 0 0

mercy 1.51 0 1.9 0.12 5.25 0.88

worser 1.37 0 0.11 4.15 0.25 1.95

Example Weight Matrix (After

Normalization)

Each document is now normalization :

Antony and Cleopatra Julius Caesar The Tempest Hamlet Othello Macbeth

Antony 0.489364639 0.424394021 0 0 0 0.16145327

Brutus 0.112786898 0.814089159 0 0.2207715 0 0

Caesar 0.800693761 0.338981387 0 0.333365 0.04751 0

Calpurnia 0 0.205524148 0 0 0 0

Cleopatra 0.26565509 0 0 0 0 0

mercy 0.140750591 0 0.998328301 0.0264926 0.99774 0.40593964

worser 0.127700868 0 0.057797954 0.9162019 0.04751 0.89952535

=

=

n

t

d t

d t

d t

1

2

,

,

,

w

w

w

Latihan : tf-idf

Given a document containing terms with given

frequencies:

A(3), B(2), C(1)

Assume collection contains 10,000 documents

and document frequencies of these terms are:

A(50), B(1300), C(250)

Then:

A: tf = 3; idf =; log freq Weight =; tf-idf Weight = ;

B: tf = 2; idf =; log freq Weight =; tf-idf Weight = ;

C: tf = 1; idf =; log freq Weight =; tf-idf Weight = ;

Documents & Query as Vectors

Document :

So we have a |V|-dimensional vector space

Terms are axes of the space

Documents are points or vectors in this space

Query :

Key idea 1: Do the same for queries: represent

them as vectors in the space

Key idea 2: Rank documents according to their

proximity to the query in this space

proximity = similarity of vectors

proximity inverse of distance

Graphic Representation : Di & Q

Example:

D

1

= 2T

1

+ 3T

2

+ 5T

3

D

2

= 3T

1

+ 7T

2

+ T

3

Q = 0T

1

+ 0T

2

+ 2T

3

T

3

T

1

T

2

D

1

= 2T

1

+ 3T

2

+ 5T

3

D

2

= 3T

1

+ 7T

2

+ T

3

Q = 0T

1

+ 0T

2

+ 2T

3

7

3 2

5

Is D

1

or D

2

more similar to Q?

How to measure the degree

of similarity?

Similarity Measure

A similarity measure is a function that computes

the degree of similarity between two vectors.

Using a similarity measure between the query

and each document:

It is possible to rank documents

It is possible to enforce a certain threshold so

that the size of the retrieved set can be

controlled.

Cosine Similarity Measure

Cosine similarity measures the cosine of the

angle between two vectors.

Get value Cosine similarity :

Without normalization wt,d.

With normalization wt,d.

= =

=

-

t

i

t

i

t

i

w w

w w

q d

q d

iq ij

iq ij

j

j

1 1

2 2

1

) (

CosSim(d

j

, q) =

=

- =

t

i

w w

q d

iq ij

j

1

) (

CosSim(d

j

, q) =

=

=

n

t

d t

d t

d t

1

2

,

,

,

w

w

w

Example : Cosine similarity

amongst 3 documents

How similar are the novels

SaS: Sense and Sensibility

PaP: Pride and Prejudice, and

WH: Wuthering Heights?

term SaS PaP WH

affection 115 58 20

jealous 10 7 11

gossip 2 0 6

wuthering 0 0 38

Term frequencies (counts)

Note: To simplify this example, we dont do idf weighting.

Jawab : Cosine similarity

amongst 3 documents

Note: To simplify this example, we dont do idf weighting.

term SaS PaP WH

affection 3.0606978 2.763427994 2.301029996

jealous 2 1.84509804 2.041392685

gossip 1.30103 0 1.77815125

wuthering 0 0 2.579783597

Log frequency weighting

CosSim

Sas PaP WH

Sas 1 0.942083434 0.788681945

PaP 1 0.694003346

Wh 1

tf.idf weighting has many

variants

Columns are acronyms for weight schemes.

Chart borrowed from Salton, Buckley 88.

Terima Kasih

Latihan: Hitunglah CosSim

amongst 4 documents

D1: Dokumen 1

D2: Dokumen 2

D3: Dokumen 3

D4: Dokumen 4

term D1 D2 D3 D4

t1 50 32 4 15

t2 0 16 0 10

t3 42 2 0 23

t4 18 0 17 19

tf (counts)

Latihan: Hitunglah CosSim

amongst 4 documents (Lanjutan)

Diasumsikan total keseluruhan dokumen

adalah 1000

term df

t1 24

t2 50

t3 11

t4 9

idf (counts)

Vous aimerez peut-être aussi

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (120)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Johnson, David W - Johnson, Frank P - Joining Together - Group Theory and Group Skills (2013)Document643 pagesJohnson, David W - Johnson, Frank P - Joining Together - Group Theory and Group Skills (2013)Farah Ridzky Ananda88% (8)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Fieldglass Testing DocmentDocument32 pagesFieldglass Testing DocmentAthul NairPas encore d'évaluation

- Chimney Design UnlineDocument9 pagesChimney Design Unlinemsn sastryPas encore d'évaluation

- Unity FlowchartDocument1 pageUnity Flowchartcippolippo123Pas encore d'évaluation

- Green ManagementDocument58 pagesGreen ManagementRavish ChaudhryPas encore d'évaluation

- Innoventure List of Short Listed CandidatesDocument69 pagesInnoventure List of Short Listed CandidatesgovindmalhotraPas encore d'évaluation

- CT Analyzer Whats New V4 52 ENUDocument6 pagesCT Analyzer Whats New V4 52 ENUSivakumar NatarajanPas encore d'évaluation

- Construction Manual California PDFDocument956 pagesConstruction Manual California PDFAlexander Ponce VelardePas encore d'évaluation

- Structural Dynamics: 10/11/2017 Dynamic Analysis 1Document110 pagesStructural Dynamics: 10/11/2017 Dynamic Analysis 1Mohammed Essam ShatnawiPas encore d'évaluation

- Sample MidtermDocument7 pagesSample MidtermMuhammad WasifPas encore d'évaluation

- Titanic Is A 1997 American Romantic Disaster Film Directed, Written. CoDocument13 pagesTitanic Is A 1997 American Romantic Disaster Film Directed, Written. CoJeric YutilaPas encore d'évaluation

- Neuroview: Neurobiology and The HumanitiesDocument3 pagesNeuroview: Neurobiology and The Humanitiesports1111Pas encore d'évaluation

- Journal of Teacher Education-2008-Osguthorpe-288-99 PDFDocument13 pagesJournal of Teacher Education-2008-Osguthorpe-288-99 PDFFauzan WildanPas encore d'évaluation

- TGC 121 505558shubham AggarwalDocument4 pagesTGC 121 505558shubham Aggarwalshubham.aggarwalPas encore d'évaluation

- Flow Diagram: Equipment Identification Numbering SystemDocument24 pagesFlow Diagram: Equipment Identification Numbering Systemmkpq100% (1)

- VMD-412 9 April 2014Document8 pagesVMD-412 9 April 2014ashish kumarPas encore d'évaluation

- Seismic Earth Pressures On Retaining WallsDocument71 pagesSeismic Earth Pressures On Retaining Wallsradespino1Pas encore d'évaluation

- Exalted 2e Sheet2 Mortal v0.1Document2 pagesExalted 2e Sheet2 Mortal v0.1Lenice EscadaPas encore d'évaluation

- Program - PPEPPD 2019 ConferenceDocument3 pagesProgram - PPEPPD 2019 ConferenceLuis FollegattiPas encore d'évaluation

- 40 Years of Transit Oriented DevelopmentDocument74 pages40 Years of Transit Oriented DevelopmentTerry MaynardPas encore d'évaluation

- Uvod PDFDocument13 pagesUvod PDFbarbara5153Pas encore d'évaluation

- Organisational B.Document62 pagesOrganisational B.Viktoria MolnarPas encore d'évaluation

- Sidereal TimeDocument6 pagesSidereal TimeBruno LagetPas encore d'évaluation

- Arulanandan Soil Structure PDFDocument251 pagesArulanandan Soil Structure PDFchongptPas encore d'évaluation

- Associate Cloud Engineer - Study NotesDocument14 pagesAssociate Cloud Engineer - Study Notesabhi16101Pas encore d'évaluation

- Solution StochiometryDocument23 pagesSolution StochiometryAnthony AbesadoPas encore d'évaluation

- 2a Theory PDFDocument41 pages2a Theory PDF5ChEA DrivePas encore d'évaluation

- Safety Instrumented Systems SummersDocument19 pagesSafety Instrumented Systems SummersCh Husnain BasraPas encore d'évaluation

- Internal Audit CharterDocument5 pagesInternal Audit CharterUrsu BârlogeaPas encore d'évaluation